Post-upgrade Tasks

Post-upgrade Tasks for Ranger Usersnyc

For HDP-2.6 and higher, Ranger Usersync supports incremental sync for LDAP sync. For users

upgrading with LDAP sync, the ranger.usersync.ldap.deltasync property (found in

Ranger User Info with the label "Incremental Sync") will be set to false. This

property is set to true in new installs of Ranger in HDP-2.6 and higher.

Post-upgrade Tasks for Ranger with Kerberos

If you have not already done so, you should migrate your audit logs from DB to Solr.

After successfully upgrading, you must regenerate keytabs. Select the Only regenerate keytabs for missing hosts and components check box, confirm the keytab regeneration, then restart all required services.

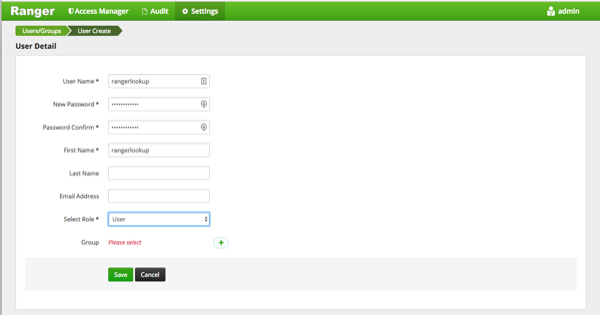

Log in to the Ranger Admin UI and select Settings > Users/Groups. Select the Users tab, then click Add New User. On the User Detail page, enter the short-name of the principal ranger.lookup.kerberos.principal (if that user does not already exist) in the User Name box. For example, if the lookup principal is rangerlookup/_HOST@${realm}, enter rangelookup as the user name. Enter the other settings shown below, then click Save to create the user. Add the new rangerlookup user in Ranger policies and services. You can use Test Connection on the Services pages to chcck the connection.

Additional Steps for Ranger HA with Kerberos

If Ranger HA (High Availability) is set up along with Kerberos, perform the following additional steps:

Use SSH to connect to the KDC server host. Use the

kadmin.local

command to access the Kerberos CLI, then check the list of principals for each domain where Ranger Admin and the load-balancer are installed.

kadmin.local kadmin.local: list_principals

For example, if Ranger Admin is installed on <host1> and <host2>, and the load-balancer is installed on <host3>, the list returned should include the following entries:

HTTP/ <host3>@EXAMPLE.COM HTTP/ <host2>@EXAMPLE.COM HTTP/ <host1>@EXAMPLE.COMIf the HTTP principal for any of these hosts is not listed, use the following command to add the principal:

kadmin.local: addprinc -randkey HTTP/<host3>@EXAMPLE.COM

![[Note]](../common/images/admon/note.png)

Note This step will need to be performed each time the Spnego keytab is regenerated.

Use the following

kadmin.localcommands to add the HTTP Principal of each of the Ranger Admin and load-balancer nodes to the Spnego keytab file:kadmin.local: ktadd -norandkey -kt /etc/security/keytabs/spnego.service.keytab HTTP/ <host3>@EXAMPLE.COM kadmin.local: ktadd -norandkey -kt /etc/security/keytabs/spnego.service.keytab HTTP/ <host2>@EXAMPLE.COM kadmin.local: ktadd -norandkey -kt /etc/security/keytabs/spnego.service.keytab HTTP/ <host1>@EXAMPLE.COM

Use the

exitcommand to exitktadmin.local.Run the following command to check the Spnego keytab file:

klist -kt /etc/security/keytabs/spnego.service.keytab

The output should include the principals of all of the nodes on which Ranger Admin and the load-balancer are installed. For example:

Keytab name: FILE:/etc/security/keytabs/spnego.service.keytab KVNO Timestamp Principal ---- ----------------- -------------------------------------------------------- 1 07/22/16 06:27:31 HTTP/ <host3>@EXAMPLE.COM 1 07/22/16 06:27:31 HTTP/ <host3>@EXAMPLE.COM 1 07/22/16 06:27:31 HTTP/ <host3>@EXAMPLE.COM 1 07/22/16 06:27:31 HTTP/ <host3>@EXAMPLE.COM 1 07/22/16 06:27:31 HTTP/ <host3>@EXAMPLE.COM 1 07/22/16 08:37:23 HTTP/ <host2>@EXAMPLE.COM 1 07/22/16 08:37:23 HTTP/ <host2>@EXAMPLE.COM 1 07/22/16 08:37:23 HTTP/ <host2>@EXAMPLE.COM 1 07/22/16 08:37:23 HTTP/ <host2>@EXAMPLE.COM 1 07/22/16 08:37:23 HTTP/ <host2>@EXAMPLE.COM 1 07/22/16 08:37:23 HTTP/ <host2>@EXAMPLE.COM 1 07/22/16 08:37:35 HTTP/ <host1>@EXAMPLE.COM 1 07/22/16 08:37:36 HTTP/ <host1>@EXAMPLE.COM 1 07/22/16 08:37:36 HTTP/ <host1>@EXAMPLE.COM 1 07/22/16 08:37:36 HTTP/ <host1>@EXAMPLE.COM 1 07/22/16 08:37:36 HTTP/ <host1>@EXAMPLE.COM 1 07/22/16 08:37:36 HTTP/ <host1>@EXAMPLE.COMUse

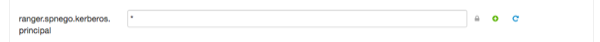

scpto copy the Spnego keytab file to every node in the cluster on which Ranger Admin and the load-balancer are installed. Verify that the/etc/security/keytabs/spnego.service.keytabfile is present on all Ranger Admin and load-balancer hosts.On the Ambari dashboard, select Ranger > Configs > Advanced, then select Advanced ranger-admin-site. Set the value of the ranger.spnego.kerberos.principal property to *.

Click Save to save the configuration, then restart Ranger Admin , only if you have completed all applicable post-upgrade tasks.

Ranger KMS

When upgrading Ranger KMS in an SSL environment to HDP-2.6, the properties listed in Step 2 under "Configuring the Ranger KMS Server" on the Enabling SSL for Ranger KMS will be moved to the "Advanced ranger-kms-site" section.

Druid Buffer Size Configuration

Problem:

After upgrading HDP to 2.6.3, the Druid Broker may fail with the following Out Of Memory Error:

Error in custom provider, java.lang.OutOfMemoryError

at io.druid.guice.DruidProcessingModule.getMergeBufferPool(DruidProcessingModule.java:124) (via modules: com.google.inject.util.Modules$OverrideModule -> com.google.inject.util.Modules$OverrideModule -> io.druid.guice.DruidProcessingModule)

at io.druid.guice.DruidProcessingModule.getMergeBufferPool(DruidProcessingModule.java:124) (via modules: com.google.inject.util.Modules$OverrideModule -> com.google.inject.util.Modules$OverrideModule -> io.druid.guice.DruidProcessingModule)

while locating io.druid.collections.BlockingPool<java.nio.ByteBuffer> annotated with @io.druid.guice.annotations.Merging()

for the 4th parameter of io.druid.query.groupby.strategy.GroupByStrategyV2.<init>(GroupByStrategyV2.java:97)

while locating io.druid.query.groupby.strategy.GroupByStrategyV2

for the 3rd parameter of io.druid.query.groupby.strategy.GroupByStrategySelector.<init>(GroupByStrategySelector.java:43)

while locating io.druid.query.groupby.strategy.GroupByStrategySelector

for the 1st parameter of io.druid.query.groupby.GroupByQueryQueryToolChest.<init>(GroupByQueryQueryToolChest.java:104)

at io.druid.guice.QueryToolChestModule.configure(QueryToolChestModule.java:95) (via modules: com.google.inject.util.Modules$OverrideModule -> com.google.inject.util.Modules$OverrideModule -> io.druid.guice.QueryRunnerFactoryModule)

while locating io.druid.query.groupby.GroupByQueryQueryToolChest

while locating io.druid.query.QueryToolChest annotated with @com.google.inject.multibindings.Element(setName=,uniqueId=64, type=MAPBINDER, keyType=java.lang.Class<? extends io.druid.query.Query>)

at io.druid.guice.DruidBinders.queryToolChestBinder(DruidBinders.java:45) (via modules: com.google.inject.util.Modules$OverrideModule -> com.google.inject.util.Modules$OverrideModule -> io.druid.guice.QueryRunnerFactoryModule -> com.google.inject.multibindings.MapBinder$RealMapBinder)

while locating java.util.Map<java.lang.Class<? extends io.druid.query.Query>, io.druid.query.QueryToolChest>

for the 1st parameter of io.druid.query.MapQueryToolChestWarehouse.<init>(MapQueryToolChestWarehouse.java:36)

while locating io.druid.query.MapQueryToolChestWarehouse

while locating io.druid.query.QueryToolChestWarehouse

for the 1st parameter of io.druid.client.BrokerServerView.<init>(BrokerServerView.java:91)

at io.druid.cli.CliBroker$1.configure(CliBroker.java:95) (via modules: com.google.inject.util.Modules$OverrideModule -> com.google.inject.util.Modules$OverrideModule -> io.druid.cli.CliBroker$1)

while locating io.druid.client.BrokerServerView

at io.druid.cli.CliBroker$1.configure(CliBroker.java:96) (via modules: com.google.inject.util.Modules$OverrideModule -> com.google.inject.util.Modules$OverrideModule -> io.druid.cli.CliBroker$1)

while locating io.druid.client.TimelineServerView

for the 6th parameter of io.druid.server.BrokerQueryResource.<init>(BrokerQueryResource.java:64)

at io.druid.cli.CliBroker$1.configure(CliBroker.java:111) (via modules: com.google.inject.util.Modules$OverrideModule -> com.google.inject.util.Modules$OverrideModule -> io.druid.cli.CliBroker$1)

while locating io.druid.server.BrokerQueryResource

Caused by: java.lang.OutOfMemoryError

at sun.misc.Unsafe.allocateMemory(Native Method)

at java.nio.DirectByteBuffer.<init>(DirectByteBuffer.java:127)

at java.nio.ByteBuffer.allocateDirect(ByteBuffer.java:311)

at io.druid.offheap.OffheapBufferGenerator.get(OffheapBufferGenerator.java:53)

at io.druid.offheap.OffheapBufferGenerator.get(OffheapBufferGenerator.java:29)

at io.druid.collections.DefaultBlockingPool.<init>(DefaultBlockingPool.java:58)

at io.druid.guice.DruidProcessingModule.getMergeBufferPool(DruidProcessingModule.java:127)

at io.druid.guice.DruidProcessingModule$$FastClassByGuice$$8e266e5c.invoke(<generated>)

at com.google.inject.internal.ProviderMethod$FastClassProviderMethod.doProvision(ProviderMethod.java:264)

at com.google.inject.internal.ProviderMethod$Factory.provision(ProviderMethod.java:401)

at com.google.inject.internal.ProviderMethod$Factory.get(ProviderMethod.java:376)

at com.google.inject.internal.ProviderToInternalFactoryAdapter$1.call(ProviderToInternalFactoryAdapter.java:46)Solution:

To work around this issue, manually reduce processing buffer size for both historical and broker:

druid.processing.buffer.sizeBytes= 134217728

More Information