Creating a NiFi Flow to Stream Events to HCP

You can use NiFi to create a flow to capture events from the new data source and push them into HCP.

![[Important]](../common/images/admon/important.png) | Important |

|---|---|

The following task is an example using the Squid data source. Prior to creating a NiFi flow to stream Squid events to HCP, you would need to install Squid and create parsers for the data source. |

Drag the first icon on the toolbar (the processor icon) to your workspace.

Select the TailFile type of processor and click Add.

Right-click the processor icon and select Configure to display the Configure Processor dialog box.

In the Settings tab, change the name to

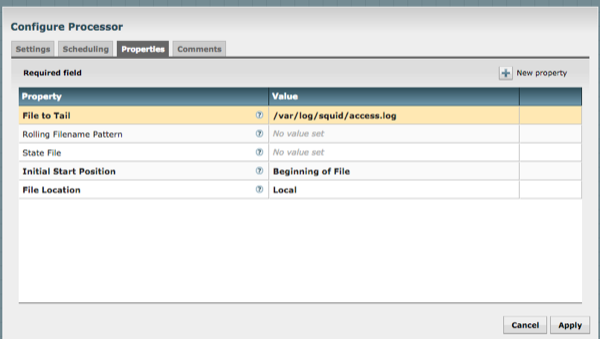

Ingest $DATASOURCE Events.In the Properties tab, configure the following:

Repeat Step 1.

Select the PutKafka type of processor and click Add.

Right-click the processor and select Configure.

In the Settings tab, change the name to

Stream to Metronand then click the relationship check boxes for failure and success.In the Properties tab, set the following three properties:

Known Brokers: $KAFKA_HOST:6667

Topic Name: $DATAPROCESSOR

Client Name: nifi-$DATAPROCESSOR

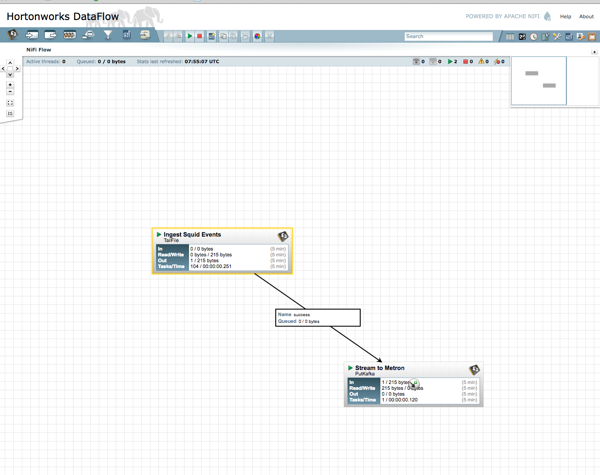

Create a connection by dragging the arrow from the Ingest $DATAPROCESSOR Events processor to the Stream to Metron processor.

Press the Shift key and draw a box around both parsers to select the entire flow; then click the play button (green arrow).

You should see all of the processor icons turn into green arrows:

Generate some data using the new data processor client.

You should see metrics on the processor of data being pushed into Metron.

Look at the Storm UI for the parser topology and you should see tuples coming in.

After about five minutes, you should see a new Elastic Search index called $DATAPROCESSOR_index* in the Elastic Admin UI.

For more information about creating a NiFi data flow, see the NiFi documentation.