Manually Enabling Kerberos

If you installed HDP manually, then you need to enable Kerberos manually.

To manually enable Kerberos, complete the following steps:

Note: These are manual instructions for Kerberizing Metron Storm topologies from Kafka to Kafka. This does not cover the Ambari MPack, sensor connections, or MAAS.

Stop all topologies - you will restart them again once Kerberos has been enabled.

for topology in bro snort enrichment indexing; do storm kill $topology; done

Set up Kerberos:

![[Note]](../common/images/admon/note.png)

Note If you copy/paste this full set of commands, the

kdb5_utilcommand will not run as expected. So, run the commands individually to ensure they all execute.Be sure to set 'node1' to the correct host for your kdc.

yum -y install krb5-server krb5-libs krb5-workstation sed -i 's/kerberos.example.com/node1/g' /etc/krb5.conf cp /etc/krb5.conf /var/lib/ambari-server/resources/scripts # This step takes a moment. It creates the kerberos database. kdb5_util create -s /etc/rc.d/init.d/krb5kdc start /etc/rc.d/init.d/kadmin start chkconfig krb5kdc on chkconfig kadmin on

Set up the admin and metron user principals.

![[Note]](../common/images/admon/note.png)

Note The OS or metron user name must be the same as the Kerberos user name.

You'll kinit as the metron user when running topologies. Make sure to remember the passwords.

kadmin.local -q "addprinc admin/admin" kadmin.local -q "addprinc metron"

Create the metron user HDFS home directory:

sudo -u hdfs hdfs dfs -mkdir /user/metron && \ sudo -u hdfs hdfs dfs -chown metron:hdfs /user/metron && \ sudo -u hdfs hdfs dfs -chmod 770 /user/metron

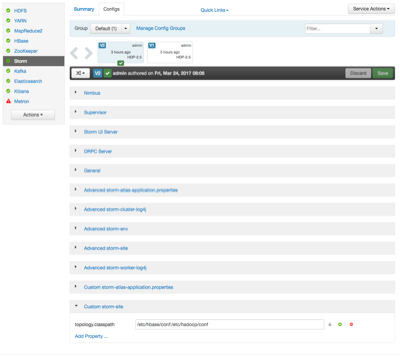

In Ambari, set up Storm to run with Kerberos and run worker jobs as the submitting user:

Add the following properties to custom storm-site:

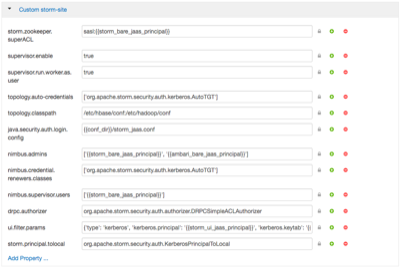

topology.auto-credentials=['org.apache.storm.security.auth.kerberos.AutoTGT'] nimbus.credential.renewers.classes=['org.apache.storm.security.auth.kerberos.AutoTGT'] supervisor.run.worker.as.user=true

In the Storm config section in Ambari, choose Add Property under custom storm-site:

In the dialog window, choose the bulk property add mode toggle button and add the following values:

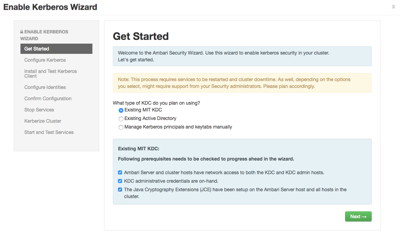

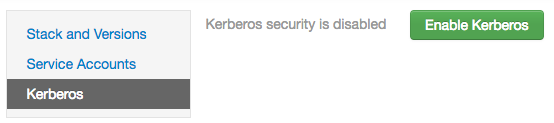

Kerberize the cluster via Ambari.

More detailed documentation can be found in Enabling Kerberos Security in the [HDP Security] guide.

For this exercise, choose existing MIT KDC (this is what you set up and installed in the previous steps.)

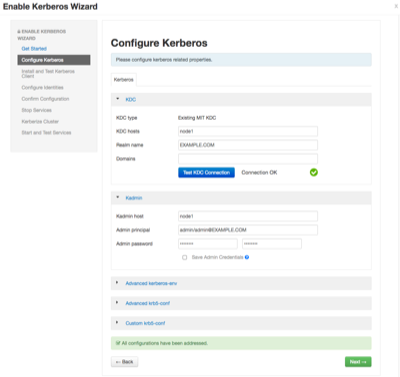

Set up Kerberos configuration. Realm is EXAMPLE.COM. The admin principal will end up as admin/admin@EXAMPLE.COM when testing the KDC. Use the password you entered during the step to add the admin principal.

Click through to Start and Test Services.

Let the cluster spin up, but don't worry about starting up Metron via Ambari. You will run the parsers manually against the rest of the Hadoop cluster Kerberized.

The wizard will fail at starting Metron, but it is okay.

Click continue.

When you’re finished, the custom storm-site should look similar to the following:

Set up Metron keytab:

kadmin.local -q "ktadd -k metron.headless.keytab metron@EXAMPLE.COM" && \ cp metron.headless.keytab /etc/security/keytabs && \ chown metron:hadoop /etc/security/keytabs/metron.headless.keytab && \ chmod 440 /etc/security/keytabs/metron.headless.keytab

Kinit with the metron user:

kinit -kt /etc/security/keytabs/metron.headless.keytab metron@EXAMPLE.COM

First create any additional Kafka topics you will need.

You need to create the topics before adding the required ACLs. The current full dev installation will deploy bro, snort, enrichments, and indexing only. For example:

${HDP_HOME}/kafka-broker/bin/kafka-topics.sh --zookeeper ${ZOOKEEPER}:2181 --create --topic yaf --partitions 1 --replication-factor 1Set up Kafka ACLs for the topics:

export KERB_USER=metron; for topic in bro enrichments indexing snort; do ${HDP_HOME}/kafka-broker/bin/kafka-acls.sh --authorizer kafka.security.auth.SimpleAclAuthorizer --authorizer-properties zookeeper.connect=${ZOOKEEPER}:2181 --add --allow-principal user:${KERB_USER} --topic ${topic}; done;Set up Kafka ACLs for the consumer groups:

${HDP_HOME}/kafka-broker/bin/kafka-acls.sh --authorizer kafka.security.auth.SimpleAclAuthorizer --authorizer-properties zookeeper.connect=${ZOOKEEPER}:2181 --add --allow-principal user:${KERB_USER} --group bro_parser; ${HDP_HOME}/kafka-broker/bin/kafka-acls.sh --authorizer kafka.security.auth.SimpleAclAuthorizer --authorizer-properties zookeeper.connect=${ZOOKEEPER}:2181 --add --allow-principal user:${KERB_USER} --group snort_parser; ${HDP_HOME}/kafka-broker/bin/kafka-acls.sh --authorizer kafka.security.auth.SimpleAclAuthorizer --authorizer-properties zookeeper.connect=${ZOOKEEPER}:2181 --add --allow-principal user:${KERB_USER} --group yaf_parser; ${HDP_HOME}/kafka-broker/bin/kafka-acls.sh --authorizer kafka.security.auth.SimpleAclAuthorizer --authorizer-properties zookeeper.connect=${ZOOKEEPER}:2181 --add --allow-principal user:${KERB_USER} --group enrichments; ${HDP_HOME}/kafka-broker/bin/kafka-acls.sh --authorizer kafka.security.auth.SimpleAclAuthorizer --authorizer-properties zookeeper.connect=${ZOOKEEPER}:2181 --add --allow-principal user:${KERB_USER} --group indexing;Add metron user to the Kafka cluster ACL:

/usr/hdp/current/kafka-broker/bin/kafka-acls.sh --authorizer kafka.security.auth.SimpleAclAuthorizer --authorizer-properties zookeeper.connect=${ZOOKEEPER}:2181 --add --allow-principal user:${KERB_USER} --cluster kafka-clusterYou also need to grant permissions to the HBase tables. Kinit as the hbase user and add ACLs for metron:

kinit -kt /etc/security/keytabs/hbase.headless.keytab hbase-metron_cluster@EXAMPLE.COM echo "grant 'metron', 'RW', 'threatintel'" | hbase shell echo "grant 'metron', 'RW', 'enrichment'" | hbase shell

Create a “.storm” directory in the metron user’s home directory and switch to that directory.

su metron && cd ~/ mkdir .storm cd .storm

Create a custom client jaas file.

This should look identical to the Storm client jaas file located in

/etc/storm/conf/client_jaas.confexcept for the addition of a Client stanza. The Client stanza is used for ZooKeeper. All quotes and semicolons are necessary.[metron@node1 .storm]$ cat client_jaas.conf StormClient { com.sun.security.auth.module.Krb5LoginModule required useTicketCache=true renewTicket=true serviceName="nimbus"; }; Client { com.sun.security.auth.module.Krb5LoginModule required useKeyTab=true keyTab="/etc/security/keytabs/metron.headless.keytab" storeKey=true useTicketCache=false serviceName="zookeeper" principal="metron@EXAMPLE.COM"; }; KafkaClient { com.sun.security.auth.module.Krb5LoginModule required useKeyTab=true keyTab="/etc/security/keytabs/metron.headless.keytab" storeKey=true useTicketCache=false serviceName="kafka" principal="metron@EXAMPLE.COM"; };Create a storm.yaml with jaas file info. Set the array of nimbus hosts accordingly.

[metron@node1 .storm]$ cat storm.yaml nimbus.seeds : ['node1'] java.security.auth.login.config : '/home/metron/.storm/client_jaas.conf' storm.thrift.transport : 'org.apache.storm.security.auth.kerberos.KerberosSaslTransportPlugin'

Create an auxiliary storm configuration json file in the metron user’s home directory.

![[Note]](../common/images/admon/note.png)

Note The login config option in the file points to our custom

client_jaas.conf.cd /home/metron [metron@node1 ~]$ cat storm-config.json { "topology.worker.childopts" : "-Djava.security.auth.login.config=/home/metron/.storm/client_jaas.conf" }Set up enrichment and indexing:

Modify

enrichment.properties:${METRON_HOME}/config/enrichment.propertieskafka.security.protocol=PLAINTEXTSASL topology.worker.childopts=-Djava.security.auth.login.config=/home/metron/.storm/client_jaas.confModify

elasticsearch.properties:${METRON_HOME}/config/elasticsearch.propertieskafka.security.protocol=PLAINTEXTSASL topology.worker.childopts=-Djava.security.auth.login.config=/home/metron/.storm/client_jaas.conf

Kinit with the metron user again:

kinit -kt /etc/security/keytabs/metron.headless.keytab metron@EXAMPLE.COM

Restart the parser topologies.

Be sure to pass in the new parameter, “-ksp” or “--kafka_security_protocol.” Run this from the metron home directory.

for parser in bro snort; do ${METRON_HOME}/bin/start_parser_topology.sh -z ${ZOOKEEPER}:2181 -s ${parser} -ksp SASL_PLAINTEXT -e storm-config.json; doneNow restart the enrichment and indexing topologies:

${METRON_HOME}/bin/start_enrichment_topology.sh ${METRON_HOME}/bin/start_elasticsearch_topology.shPush some sample data to one of the parser topics.

For example, for yaf we took raw data from https://github.com/mmiklavc/incubator-metron/blob/994ba438a3104d2b7431bee79d2fce0257a96fec/metron-platform/metron-integration-test/src/main/sample/data/yaf/raw/YafExampleOutput

cat sample-yaf.txt | ${HDP_HOME}/kafka-broker/bin/kafka-console-producer.sh --broker-list ${BROKERLIST}:6667 --security-protocol SASL_PLAINTEXT --topic yafWait a few moments for data to flow through the system and then check for data in the Elasticsearch indexes. Replace yaf with whichever parser type you’ve chosen.

curl -XGET "${ZOOKEEPER}:9200/yaf*/_search" curl -XGET "${ZOOKEEPER}:9200/yaf*/_count"You should have data flowing from the parsers all the way through to the indexes.