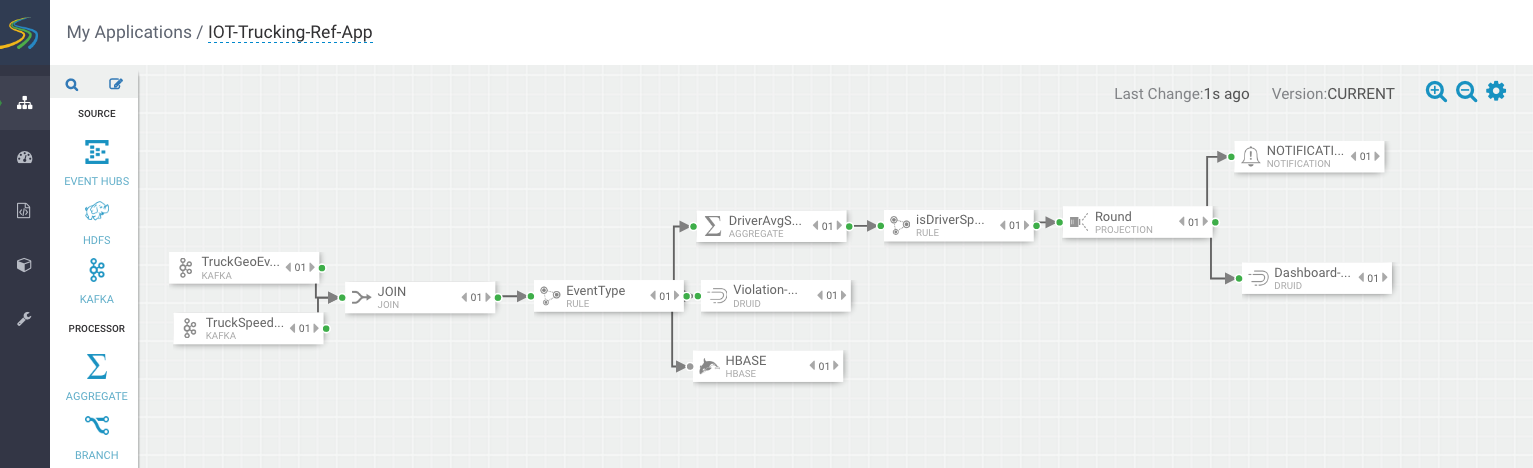

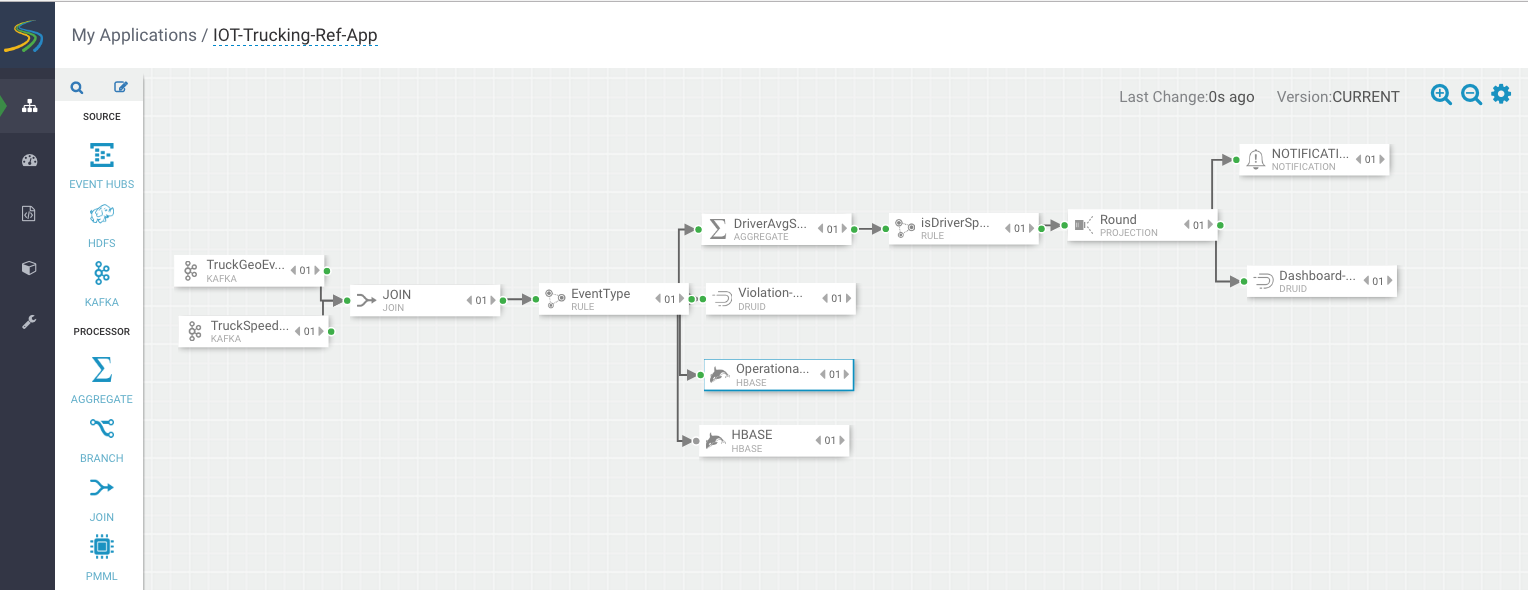

Streaming Violation Events into a Data Lake and Operational Data Store

About This Task

Another common requirement is to stream data into an operational data store like HBase to power real-time web apps as well as a data lake powered by HDFS for long term storage and batch etl and analytic.

Steps

You will need ot have HBase service running. This can be easily done by adding the HDP HBase Service via Ambari. Create a new HBase table by logging into an node where Hbase client is installed then execute the below commands

cd /usr/hdp/current/hbase-client/bin /hbase shell create 'violation_events', {NAME=> 'events', VERSIONS => 3} ;Create the following directory in HDFS and give it access to all users. Log into a node where HDFS client is installed and execute the below commands

su hdfs hadoop fs -mkdir /apps/trucking-app hadoop fs -chmod 777 /apps/trucking-app

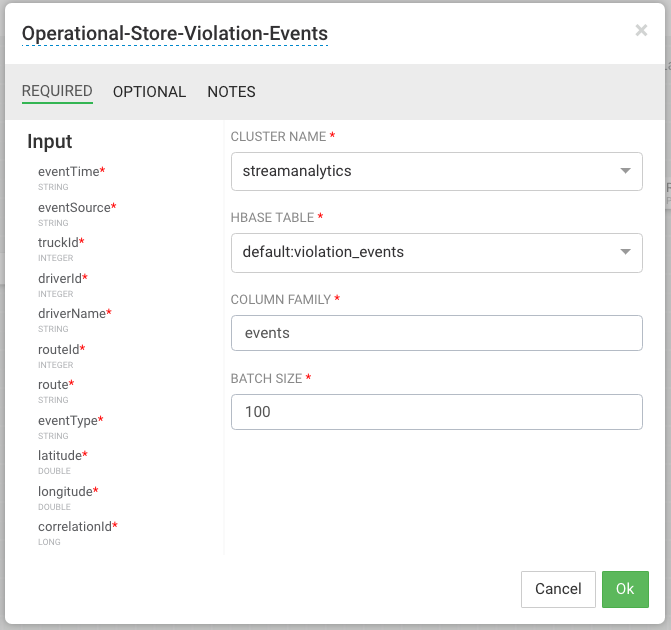

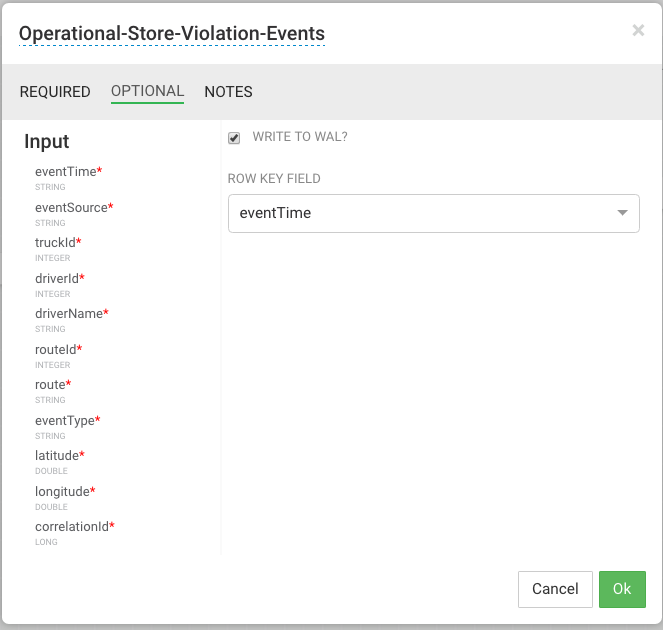

Drag the HBase sink to the canvas and connect it to the ViolationEvents Rule processor.

Configure the Hbase Sink as below.

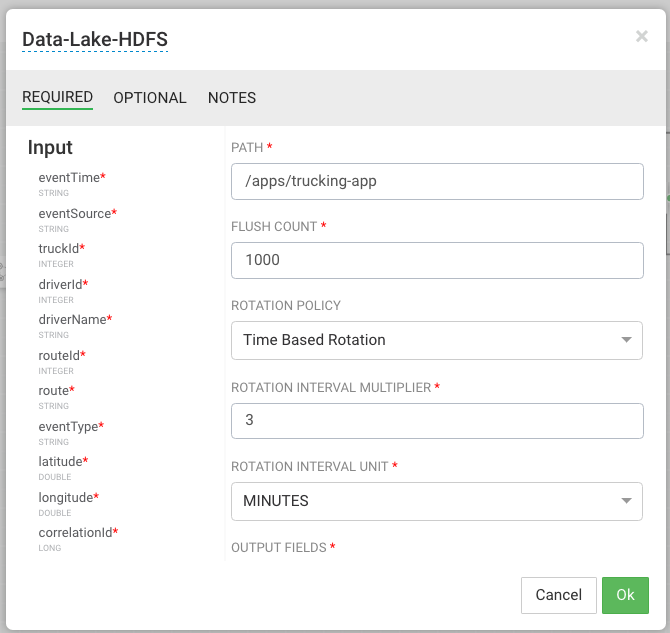

Drag the HDFS sink to the canvas and connect it to the ViolationEvents Rule processor.

Configure HDFS as below. Make sure you have permissiosn to write into the directory you have configired for HDFS path.