In this section, we use NN1 to denote the original NameNode in the non-HA setup, and NN2 to denote the other NameNode that is to be added in the HA setup.

![[Note]](../common/images/admon/note.png) | Note |

|---|---|

HA clusters reuse the A new abstraction called To support a single configuration file for all of the NameNodes, the relevant configuration parameters are suffixed with both the nameservice ID and the NameNode ID. |

Start the JournalNode daemons on those set of machines where the JNs are deployed. On each machine, execute the following command:

/usr/lib/hadoop/sbin/hadoop-daemon.sh start journalnode

where NNI is the original NameNode machine in your non-HA cluster.

Wait for the daemon to start on each of the JN machines.

Initialize JournalNodes.

At the NN1 host machine, execute the following command:

su hdfs namenode -initializeSharedEdits [-force | -nonInteractive]

This command formats all the JournalNodes. This by default happens in an interactive way: the command prompts users for “Y/N” input to confirm the format. You can skip the prompt by using option -force or -nonInteractive.

It also copies all the edits data after the most recent checkpoint from the edits directories of the local NameNode (NN1) to JournalNodes.

At the host with the journal node (if it is separated from the primary host), execute the following command:

su hdfs namenode -initializeSharedEdits -forceOn every Zookeeper host, start the Zookeeper service:

hdfs zkfc -formatZK -force

At the standby namenode host, execute the following command:

hdfs namenode -bootstrapStandby -force

Start NN1. At the NN1 host machine, execute the following command:

/usr/lib/hadoop/sbin/hadoop-daemon.sh start namenode

Ensure that NN1 is running correctly.

Initialize NN2.

Format NN2 and copy the latest checkpoint (FSImage) from NN1 to NN2 by executing the following command:

su hdfs namenode -bootstrapStandby [-force | -nonInteractive]

This command connects with HH1 to get the namespace metadata and the checkpointed fsimage. This command also ensures that NN2 receives sufficient editlogs from the JournalNodes (corresponding to the fsimage). This command fails if JournalNodes are not correctly initialized and cannot provide the required editlogs.

Start NN2. Execute the following command on the NN2 host machine:

/usr/lib/hadoop/sbin/hadoop-daemon.sh start namenode

Ensure that NN2 is running correctly.

Start DataNodes. Execute the following command on all the DataNodes:

su -l hdfs -c "/usr/lib/hadoop/sbin/hadoop-daemon.sh --config /etc/hadoop/conf start datanode"

Validate the HA configuration.

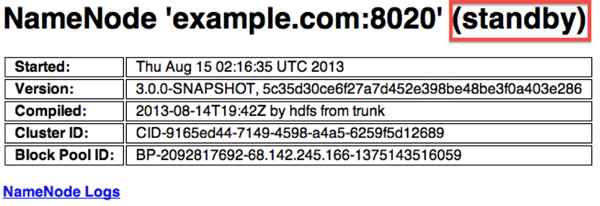

Go to the NameNodes' web pages separately by browsing to their configured HTTP addresses.

Under the configured address label, you should see that HA state of the NameNode. The NameNode can be either in "standby" or "active" state.

![[Note]](../common/images/admon/note.png)

Note The HA NameNode is initially in the Standby state after it is bootstrapped.

You can also use either JMX (

tag.HAState) to query the HA state of a NameNode.The following command can also be used query HA state for NameNode:

hdfs haadmin -getServiceState

Transition one of the HA NameNode to Active state.

Initially, both NN1 and NN2 are in Standby state. Therefore you must transition one of the NameNode to Active state. This transition can be performed using one of the following options:

Option I - Using CLI

Use the command line interface (CLI) to transition one of the NameNode to Active State. Execute the following command on that NameNode host machine:

hdfs haadmin -failover --forcefence --forceactive <serviceId> <namenodeId>

For more information on the

haadmincommand, see Appendix - Administrative Commands section in this document.Option II - Deploying Automatic Failover

You can configure and deploy automatic failover using the instructions provided here.