Configuring access to Amazon S3

Amazon S3 is not supported as a default file system, but access to data in S3 is possible via the s3a connector. Use these steps to configure access from your cluster to Amazon S3.

These steps assume that you are using an HDP version that supports the s3a cloud storage connector (HDP 2.6.1 or newer).

Creating an IAM role for S3 access

In order to configure access from your cluster to Amazon S3, you must have an existing IAM role which determines what actions can be performed on which S3 buckets. If you already have an IAM role, skip to the next step. If you do not have an existing IAM role, use the following instructions to create one.

Steps

-

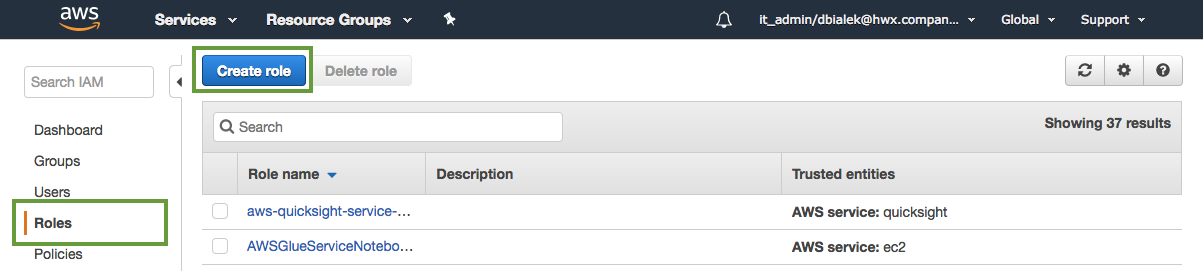

Navigate to the IAM console > Roles and click Create Role.

-

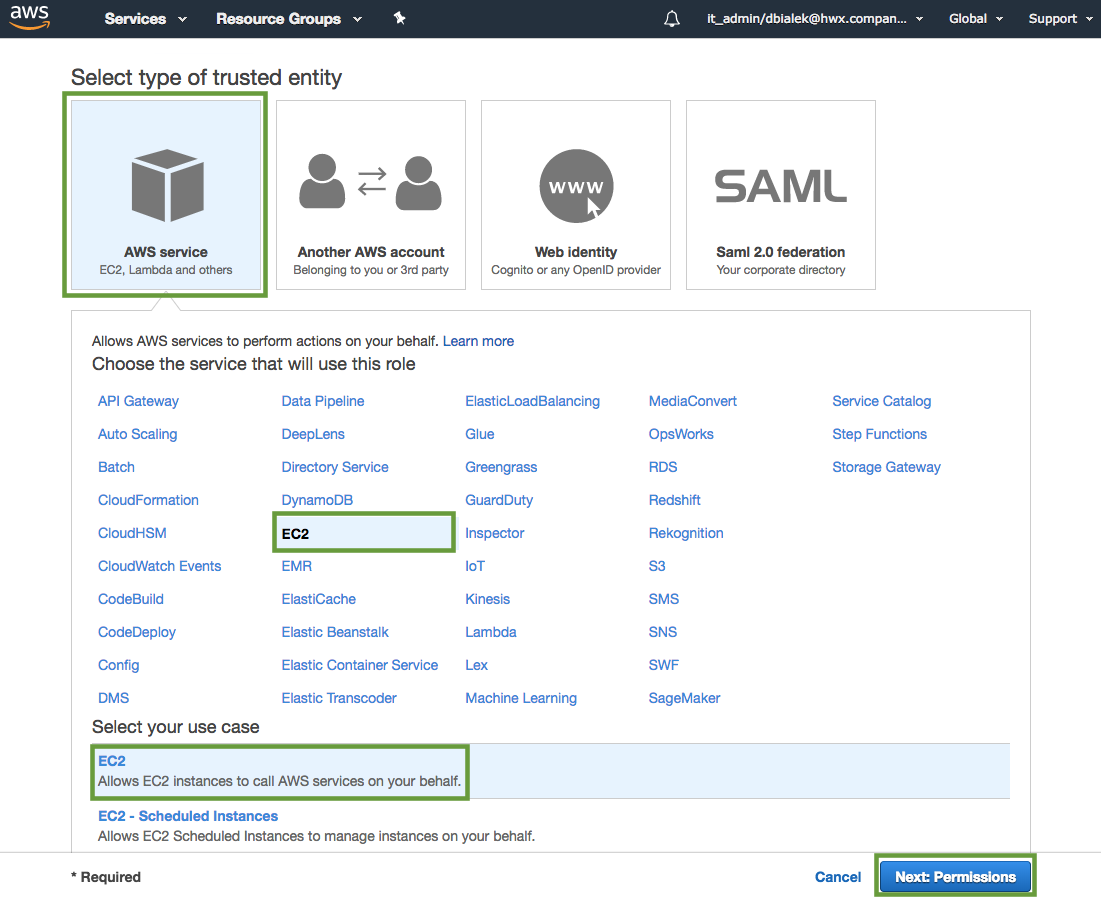

In the "Create Role" wizard, select AWS service role type and then select EC2 service and EC2 use case.

-

When done, click Next: Permissions to navigate to the next page in the wizard.

-

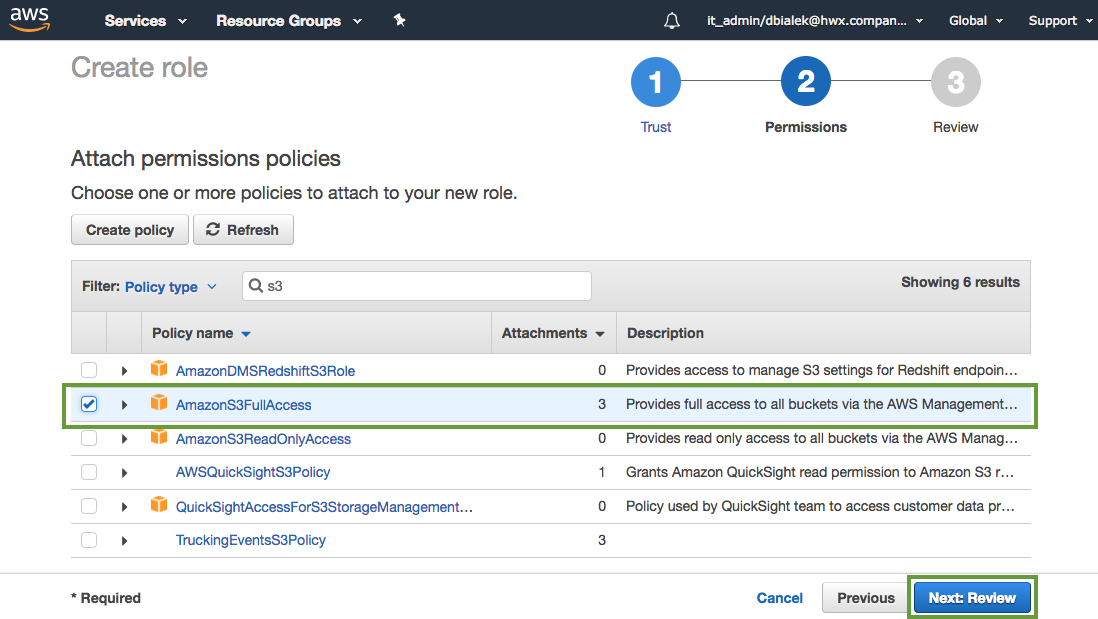

Select an existing S3 access policy or click Create policy to define a new policy. If you are just getting started, you can select a built-in policy called "AmazonS3FullAccess", which provides full access to S3 buckets that are part of your account:

-

When done attaching the policy, click Next: Review.

-

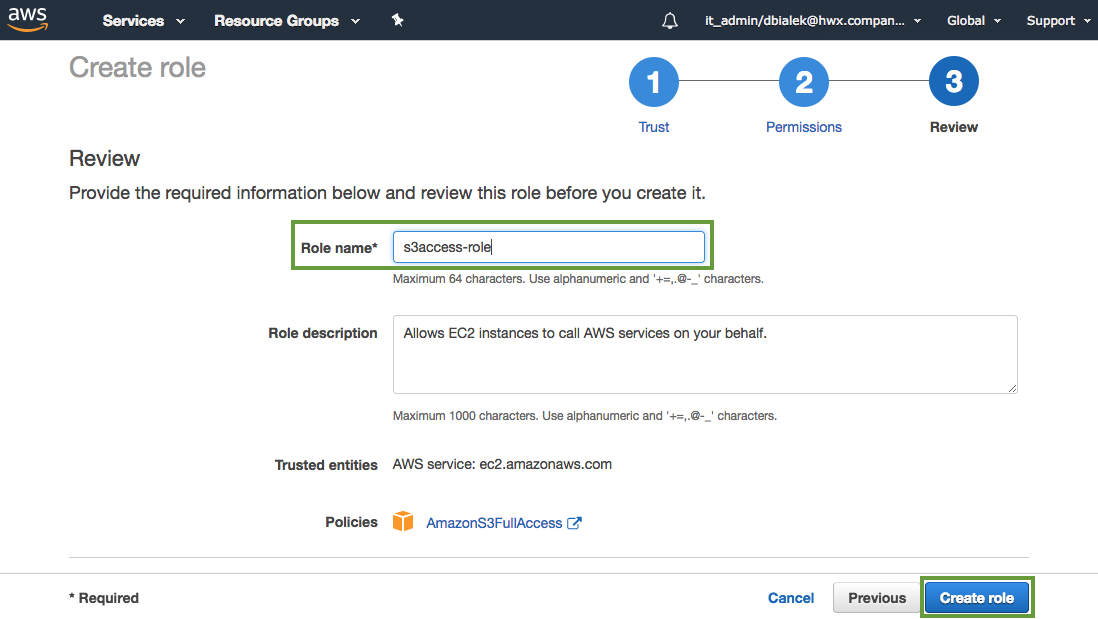

In the Roles name field, enter a name for the role that you are creating:

-

Click Create role to finish the role creation process.

Configure access to S3

Amazon S3 is not supported as a default file system, but access to data in S3 from your cluster VMs can be automatically configured by attaching an instance profile allowing access to S3. You can optionally create or attach an existing instance profile during cluster creation on the Cloud Storage page.

To configure access to S3 with an instance profile, follow these steps.

Steps

- You or your AWS admin must create an IAM role with an S3 access policy which can be used by cluster instances to access one or more S3 buckets. Refer to Creating an IAM role for S3 access.

- On the Cloud Storage page in the advanced cluster wizard view, select Use existing instance profile.

- Select an existing IAM role created in step 1:

During the cluster creation process, Cloudbreak assigns the IAM role and its associated permissions to the EC2 instances that are part of the cluster so that applications running on these instances can use the role to access S3.

Testing access from HDP to S3

Amazon S3 is not supported in HDP as a default file system, but access to data in Amazon S3 is possible via the s3a connector.

To tests access to S3 from HDP, SSH to a cluster node and run a few hadoop fs shell commands against your existing S3 bucket.

Amazon S3 access path syntax is:

s3a://bucket/dir/file

For example, to access a file called "mytestfile" in a directory called "mytestdir", which is stored in a bucket called "mytestbucket", the URL is:

s3a://mytestbucket/mytestdir/mytestfile

The following FileSystem shell commands demonstrate access to a bucket named "mytestbucket":

hadoop fs -ls s3a://mytestbucket/ hadoop fs -mkdir s3a://mytestbucket/testDir hadoop fs -put testFile s3a://mytestbucket/testFile hadoop fs -cat s3a://mytestbucket/testFile test file content

For more information about configuring the S3 connector for HDP and working with data stored on S3, refer to Cloud Data Access documentation.

Related links

Cloud Data Access (Hortonworks)

Configure S3 storage locations

After configuring access to S3 via instance profile, you can optionally use an S3 bucket as a base storage location; this storage location is mainly for the Hive Warehouse Directory (used for storing the table data for managed tables).

Prerequisites

- You must have an existing bucket. For instructions on how to create a bucket on S3, refer to AWS documentation.

- The instance profile that you configured under Configure access to S3 must allow access to the bucket.

Steps

- When creating a cluster, on the Cloud Storage page in the advanced cluster wizard view, select Use existing instance profile and select the instance profile to use, as described in Configure access to S3.

- Under Storage Locations, enable Configure Storage Locations by clicking the

button.

button. -

Provide your existing bucket name under Base Storage Location.

-

Under Path for Hive Warehouse Directory property (hive.metastore.warehouse.dir), Cloudbreak automatically suggests a location within the bucket. For example, if the bucket that you specified is

my-test-bucketthen the suggested location will bemy-test-bucket/apps/hive/warehouse. You may optionally update this path.Cloudbreak automatically creates this directory structure in your bucket.