Creating Highly Available Clusters With Cloudera Director

Limitations and Restrictions

- The procedure described in this section works with Cloudera Director 2.0 or higher and Cloudera Manager 5.5 or higher.

- Cloudera Director does not support migrating a cluster from a non-high availability setup to a high availability setup.

- Cloudera recommends sizing the master nodes large enough to support the desired final cluster size.

- Settings must comply with the configuration requirements described below and in the aws.ha.reference.conf file. Incorrect configurations can result in failures during initial bootstrap.

Editing the Configuration File to Launch a Highly Available Cluster

- Download the sample configuration file aws.ha.reference.conf from the Cloudera GitHub site. The cluster section of the file shows the role assignments and required configurations for the services where high availability is supported. The file includes comments that explain the configurations and requirements.

- Copy the sample file to your home directory before editing it. Rename the aws.ha.reference.conf file, for example, to ha.cluster.conf. The configuration file must use the .conf file extension. Open the configuration file with a text editor.

- Edit the file to supply your cloud provider credentials and other details about the cluster. A highly available cluster has additional requirements, as seen in the sample aws.ha.reference.conf file. These requirements include duplicating the master roles for highly available services.

The sample configuration file includes a set of instance groups for the services where high availability is supported. An instance group specifies the set of roles that are installed together on an instance in the cluster. The master roles in the sample aws.ha.reference.conf file are included in four instance groups, each containing particular roles. The names of the instance groups are arbitrary, but the names used in the sample file are hdfsmasters-1, hdfsmasters-2, masters-1, and masters-2. You can create multiple instances in the cluster by setting the value of the count field for the instance group. The sample file is configured for two hdfsmasters-1 instances, one hdfsmasters-2 instance, two masters-1 instances, and one masters-2 instance.

- HDFS

- Two NAMENODE roles.

- Three JOURNALNODE roles.

- Two FAILOVERCONTROLLER roles, each colocated to run on the same host as one of the NAMENODE roles (that is, included in the same instance group).

- One HTTPFS role if the cluster contains a Hue service.

- The NAMENODE nameservice, autofailover, and quorum journal name must be configured for high availability exactly as shown in the sample aws.ha.reference.conf file.

- Set the HDFS service-level configuration for fencing as shown in the sample aws.ha.reference.conf file:

configs { # HDFS fencing should be set to true for HA configurations HDFS { dfs_ha_fencing_methods: "shell(true)" } - Three role instances are required for the HDFS JOURNALNODE role. This ensures a quorum for determining which is the active node and which are standbys.

For more information, see HDFS High Availability in the Cloudera Administration documentation.

- YARN

- Two RESOURCEMANAGER roles.

- One JOBHISTORY role.

For more information, see YARN (MRv2) ResourceManager High Availability in the Cloudera Administration documentation.

- ZooKeeper

- Three SERVER roles (recommended). There must be an odd number, but one will not provide high availability

- Three role instances are required for the ZooKeeper SERVER role. This ensures a quorum for determining which is the active node and which are standbys.

- HBase

- Two MASTER roles.

- Two HBASETHRIFTSERVER roles (needed for Hue).

For more information, see HBase High Availability in the Cloudera Administration documentation.

- Hive

- Two HIVESERVER2 roles.

- Two HIVEMETASTORE roles.

For more information, see Hive Metastore High Availability in the Cloudera Administration documentation.

- Hue

- Two HUESERVER roles.

- One HTTPFS role for the HDFS service.

For more information, see Hue High Availability in the Cloudera Administration documentation.

- Oozie

- Two SERVER roles.

- Oozie plug-ins must be configured for high availability exactly as shown in the sample aws.ha.reference.conf file. In addition to the required Oozie plug-ins, other Oozie plug-ins can be enabled. All Oozie plug-ins must be configured for high availability.

- Oozie requires a load balancer for high availability. Cloudera Director does not create or manage the load balancer. The load balancer must be configured with the IP addresses of the Oozie servers after the cluster completes bootstrapping.

For more information, see Oozie High Availability in the Cloudera Administration documentation.

- The following requirements apply to databases for your cluster:

- You can configure external databases for use by the services in your cluster and for Cloudera Director. If no databases are specified in the configuration file, an embedded PostgreSQL database is used.

- External databases can be set up by Cloudera Director, or you can configure preexisting external databases to be used. Databases set up by Cloudera Director are specified in the databaseTemplates block of the configuration file. Preexisting databases are specified in the databases block of the configuration file. External databases for the cluster must be either all preexisting databases or all databases set up by Cloudera Director; a combination of these is not supported.

- Hue, Oozie, and the Hive metastore each require a database.

- Databases for highly available Hue, Oozie, and Hive services must themselves be highly available. An Amazon RDS MySQL Multi-AZ deployment, whether preexisting or configured to be created by Cloudera Director, satisfies this requirement.

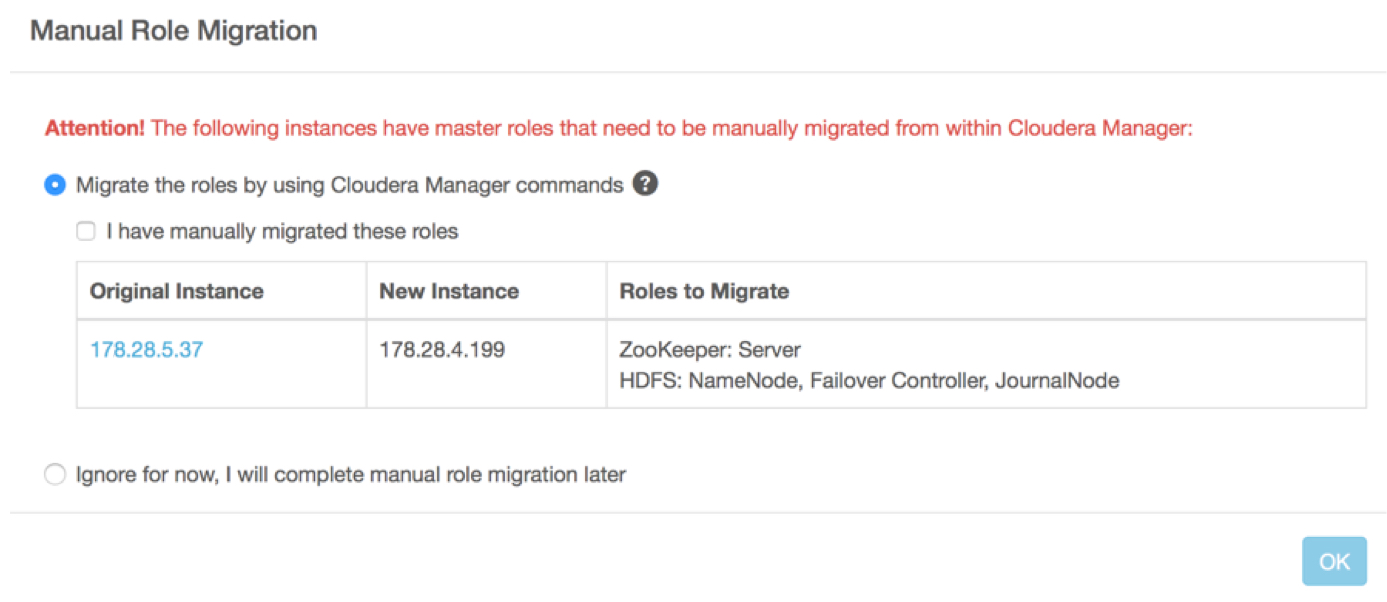

Using Role Migration to Repair HDFS Master Role Instances

Cloudera Director supports exact one-for-one host replacement for HDFS master role instances. This is a partially manual process that requires migration of the roles in Cloudera Manager. If a host running HDFS master roles (NameNode, Failover Controller, and JournalNode) fails in a highly available cluster, you can use Cloudera Director and the Cloudera Manager Role Migration wizard to move the roles to another host without losing the role states, if any. The previously standby instance of each migrated role runs as the active instance. When the migration is completed, the role that runs on the new host becomes the standby instance.

- Do not modify any instance groups on the cluster during the repair and role migration process.

- Do not clone the cluster during the repair and role migration process.

- To complete the migration (Step 3 below), click a checkbox to indicate that the migration is done, after which the old instance is terminated. Check Cloudera Manager to ensure that the old host has no roles or data on it before performing this step in Cloudera Director. Once the old instance is terminated, any information or state it contained is lost.

- If you have completed Step 1 (on Cloudera Director) and intend to complete Step 2 (on Cloudera Manager) at a later time, you can confirm which IP address to migrate from or to by going

to the cluster status page in Cloudera Director and clicking either the link for migration in the upper left, or the Modify Cluster button on the right. A popup

displays the hosts to migrate from and to:

- You do not need to check the boxes to restart and deploy client configuration at the start of the repair process. You restart and deploy the client configuration manually after role migration is complete.

- Do not attempt repair for non-highly available master roles. The Cloudera Manager Role Migration wizard only works for high availability HDFS roles.

Step 1: In Cloudera Director, Create a New Instance

- In Cloudera Director, click the cluster name and click Modify Cluster.

- Click the checkbox next to the IP address of the failed instance (containing the HDFS NameNode and colocated Failover Controller, and possibly a JournalNode). Click Repair.

- Click OK. You do not need to select Restart Cluster at this time, because you will restart the cluster after migrating the HDFS master roles.

Cloudera Director creates a new instance on a new host, installs the Cloudera Manager agent on the instance, and copies the Cloudera Manager parcels to it.

Step 2: In Cloudera Manager, Migrate Roles and Data

Open the cluster in Cloudera Manager. On the Hosts tab, you see a new instance with no roles. The cluster is in an intermediate state, containing the new host to which the roles will be migrated and the old host from which the roles will be migrated.

Use the Cloudera Manager Migrate Roles wizard to move the roles.

See Moving Highly Available NameNode, Failover Controller, and JournalNode Roles Using the Migrate Roles Wizard in the Cloudera Administration guide.

Step 3: In Cloudera Director, Delete the Old Instance

- Return to the cluster in Cloudera Director.

- Click Details. The message "Attention: Cluster requires manual role migration" is displayed. Click More Details.

- Check the box labeled, "I have manually migrated these roles."

- Click OK.

The failed instance is deleted from the cluster.