Configuring access to WASB

Hortonworks Data Platform (HDP) supports reading and writing block blobs and page blobs from and to Windows Azure Storage Blob (WASB) object store, as well as reading and writing files stored in an Azure Data Lake Storage (ADLS) account.

Windows Azure Storage Blob (WASB) is an object store service available on Azure. WASB is not supported as a default file system, but access to data in WASB is possible via the wasb connector.

These steps assume that you are using an HDP version that supports the wasb cloud storage connector (HDP 2.6.1 or newer).

Prerequisites

If you want to use Windows Azure Storage Blob to store your data, you must enable Azure subscription for Blob Storage, and then create a storage account.

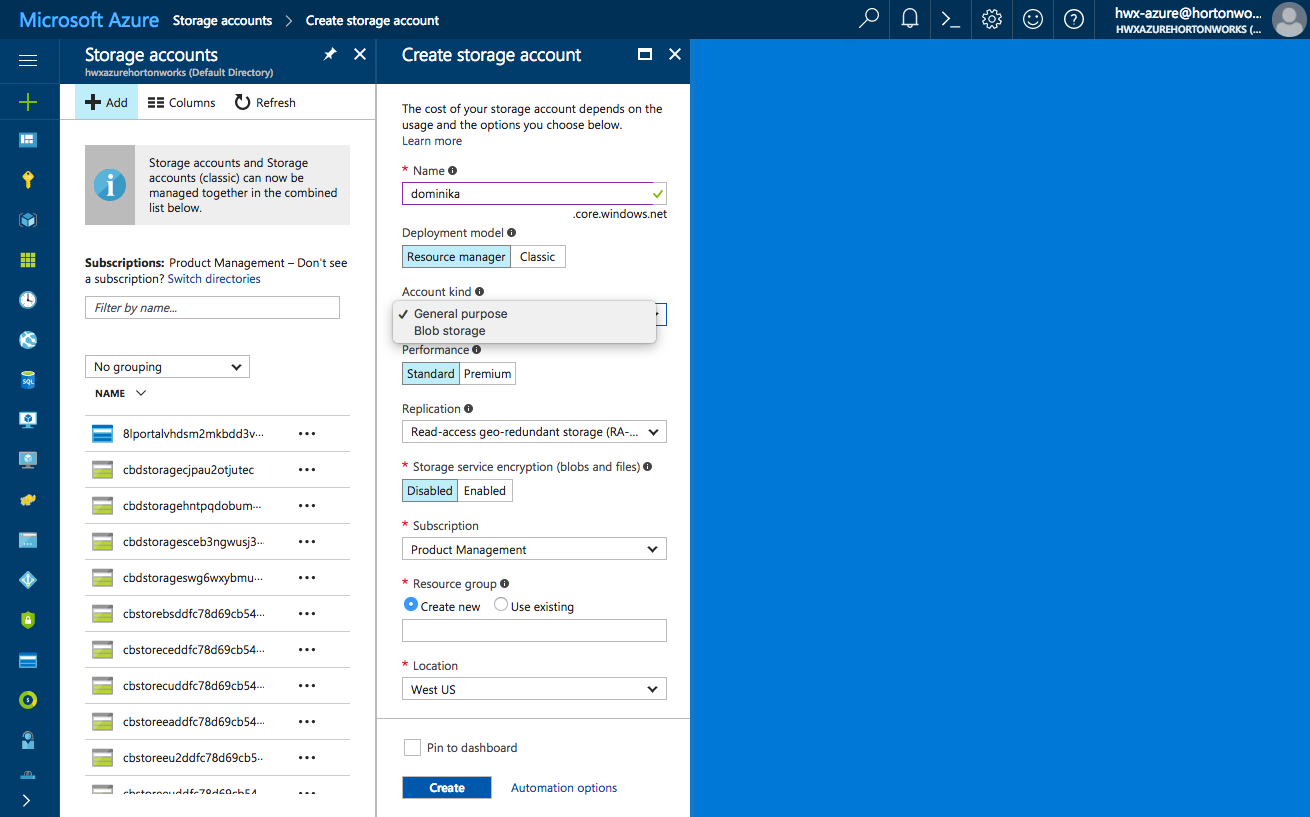

Here is an example of how to create a a storage account:

Configure access to WASB

In order to access data stored in your Azure blob storage account, you must obtain your access key from your storage account settings, and then provide the storage account name and its corresponding access key when creating a cluster.

Steps

-

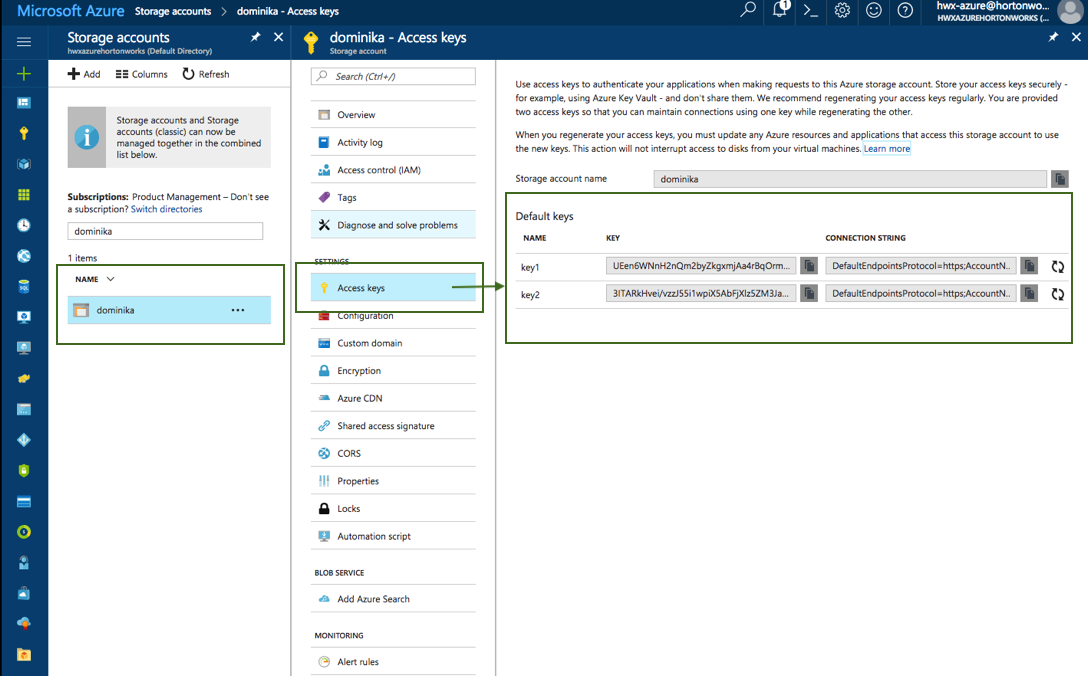

Obtain your storage account name and storage access key from the Access keys page in your storage account settings:

-

In Cloudbreak web UI, on the advanced Cloud Storage page of the create a cluster wizard, select Use existing WASB storage.

-

Provide the Storage Account Name and Access Key parameters obtained in the previous step.

Once your cluster is in the running state, you will be able to access the Azure blob storage account from the cluster nodes.

Test access to WASB

To tests access to WASB, SSH to a cluster node and run a few hadoop fs shell commands against your existing WASB account.

WASB access path syntax is:

wasb://container_name@storage_account_name.blob.core.windows.net/dir/file

For example, to access a file called "testfile" located in a directory called "testdir", stored in the container called "testcontainer" on the account called "hortonworks", the URL is:

wasb://testcontainer@hortonworks.blob.core.windows.net/testdir/testfile

You can also use "wasbs" prefix to utilize SSL-encrypted HTTPS access:

wasbs://@ .blob.core.windows.net/dir/file

The following Hadoop FileSystem shell commands demonstrate access to a storage account named "myaccount" and a container named "mycontainer":

hadoop fs -ls wasb://mycontainer@myaccount.blob.core.windows.net/ hadoop fs -mkdir wasb://mycontainer@myaccount.blob.core.windows.net/testDir hadoop fs -put testFile wasb://mycontainer@myaccount.blob.core.windows.net/testDir/testFile hadoop fs -cat wasb://mycontainer@myaccount.blob.core.windows.net/testDir/testFile test file content

For more information about configuring the WASB connector and working with data stored in WASB, refer to Cloud Data Access documentation.

Related links

Cloud Data Access (Hortonworks)

Create a storage account (External)

Configure WASB storage locations

After configuring access to WASB, you can optionally use that WASB storage account as a base storage location; this storage location is mainly for the Hive Warehouse Directory (used for storing the table data for managed tables).

Steps

- When creating a cluster, on the Cloud Storage page in the advanced cluster wizard view, select Use existing WASB storage and select the instance profile to use, as described in Configure access to WASB.

- Under Storage Locations, enable Configure Storage Locations by clicking the

button.

button. -

Provide your existing directory name under Base Storage Location.

Make sure that the container exists within the account.

-

Under Path for Hive Warehouse Directory property (hive.metastore.warehouse.dir), Cloudbreak automatically suggests a location within the bucket. For example, if the directory that you specified is

my-test-containerthen the suggested location will bemy-test-container@my-wasb-account.blob.core.windows.net/apps/hive/warehouse. You may optionally update this path.Cloudbreak automatically creates this directory structure in your bucket.