Configuring access to Google Cloud Storage (GCS)

Google Cloud Storage (GCS) is not supported as a default file system, but access to data in GCS is possible via the gs connector. Use these steps to configure access from your cluster to GCS.

These steps assume that you are using an HDP version that supports the gs cloud storage connector (HDP 2.6.5 introduced this feature as a TP).

Prerequisites

Access to Google Cloud Storage is via a service account. The service account that you provide to Cloudbreak for GCS data access must have the following permissions:

-

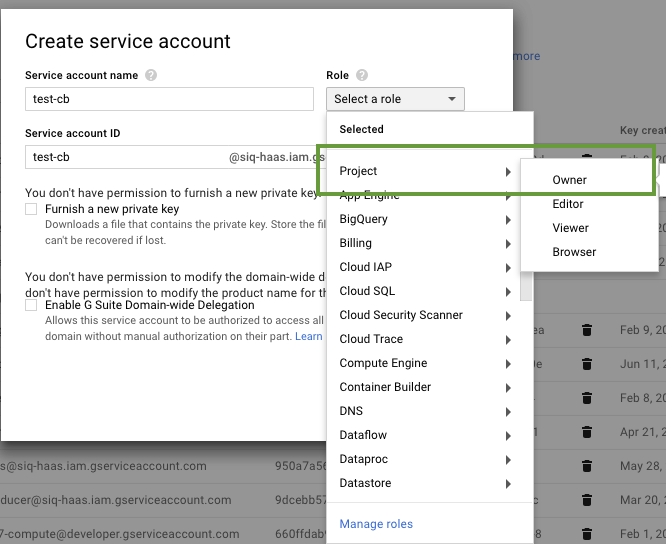

The service account must have the project-wide Owner role:

-

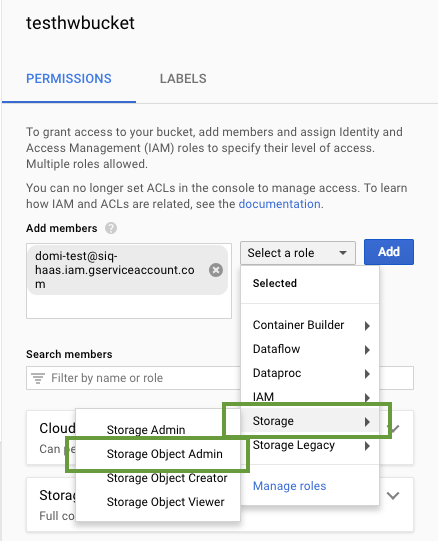

The service account must have the Storage Object Admin role for the bucket that you are planning to use. You can set this in the bucket's permissions settings:

Configure access to GCS

Access to Google Cloud Storage is via a service account.

Steps

-

On the Cloud Storage page in the advanced cluster wizard view, select Use existing GSC storage.

-

Under Service Account Email Address provide the email for your GCS storage account.

Once your cluster is in the running state, you should be able to access buckets that the configured storage account has access to.

Testing access to GCS

Test access to the Google Cloud Storage bucket by running a few commands from any cluster node. For example, you can use the command listed below (replace “mytestbucket” with the name of your bucket):

hadoop fs -ls gs://my-test-bucket/

Configure GCS storage locations

After configuring access to GCS via service account, you can optionally use a GCS bucket as a base storage location; this storage location is mainly for the Hive Warehouse Directory (used for storing the table data for managed tables).

Prerequisites

- You must have an existing bucket. For instructions on how to create a bucket on GCS, refer to GCP documentation.

- The service account that you configured under Configure access to GCS must allow access to the bucket.

Steps

- When creating a cluster, on the Cloud Storage page in the advanced cluster wizard view, select Use existing GCS storage and select the existing service account, as described in Configure access to GCS.

- Under Storage Locations, enable Configure Storage Locations by clicking the

button.

button. - Provide your existing bucket name under Base Storage Location.

-

Under Path for Hive Warehouse Directory property (hive.metastore.warehouse.dir), Cloudbreak automatically suggests a location within the bucket. For example, if the bucket that you specified is

my-test-bucketthen the suggested location will bemy-test-bucket/apps/hive/warehouse. You may optionally update this path.Cloudbreak automatically creates this directory structure in your bucket.