Resource Management

- Guarantee completion in a reasonable time frame for critical workloads

- Support reasonable cluster scheduling between groups of users based on fair allocation of resources per group

- Prevent users from depriving other users access to the cluster

Continue reading:

Schedulers

A scheduler is responsible for deciding which tasks get to run and where and when to run them. The MapReduce and YARN computation frameworks support the following schedulers:

- FIFO - Allocates resources based on arrival time.

- Fair - Allocates resources to weighted pools, with fair sharing within each pool.

- Capacity - Allocates resources to pools, with FIFO scheduling within each pool.

The scheduler defaults for MapReduce and YARN are:

- MapReduce - Cloudera Manager, CDH 5, and CDH 4 set the default scheduler to FIFO. FIFO is set as the default for backward-compatibility purposes, but

Cloudera recommends Fair Scheduler because Impala and Llama are optimized for it. Capacity Scheduler is also available.

If you are running CDH 4, you can specify how MapReduce jobs share resources by configuring the MapReduce scheduler.

- YARN - Cloudera Manager, CDH 5, and CDH 4 set the default to Fair Scheduler. Cloudera recommends Fair Scheduler because Impala and Llama are optimized for

it. FIFO and Capacity Scheduler are also available.

In YARN, the scheduler is responsible for allocating resources to the various running applications subject to familiar constraints of capacities, queues, and so on. The scheduler performs its scheduling function based the resource requirements of the applications; it does so based on the abstract notion of a resource container that incorporates elements such as memory, CPU, disk, network, and so on.

The YARN scheduler has a pluggable policy plug-in, which is responsible for partitioning the cluster resources among the various queues, applications, and so on.

If you are running CDH 5, you can specify how YARN applications share resources by manually configuring the YARN scheduler. Alternatively you can use Cloudera Manager dynamic allocation features to manage the scheduler configuration.

Cloudera Manager Resource Management

Cloudera Manager provides two methods for allocating cluster resources to services: static and dynamic.

Static Allocation

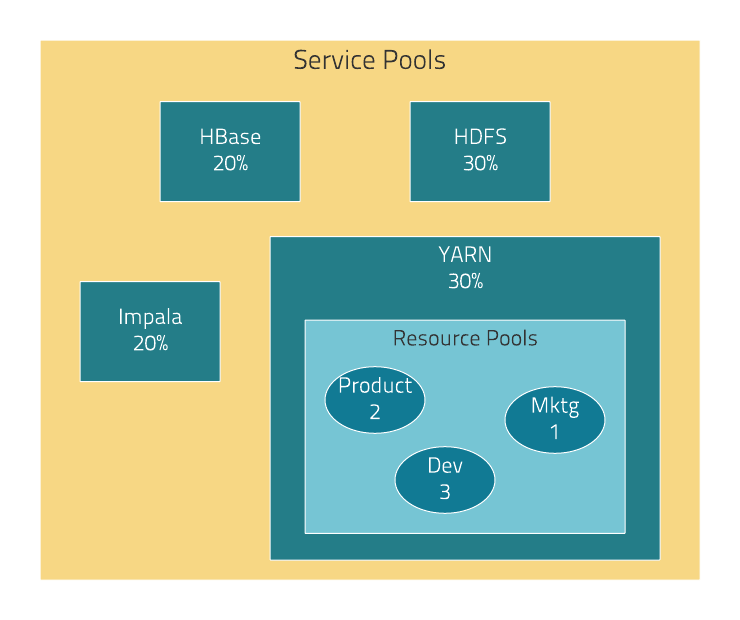

Cloudera Manager 4 introduced the ability to partition resources across HBase, HDFS, Impala, MapReduce, and YARN services by allowing you to set configuration properties that were enforced by Linux control groups (Linux cgroups). With Cloudera Manager 5, the ability to statically allocate resources using cgroups is configurable through a single static service pool wizard. You allocate services a percentage of total resources and the wizard configures the cgroups.

For example, the following figure illustrates static pools for HBase, HDFS, Impala, and YARN services that are respectively assigned 20%, 30%, 20%, and 30% of cluster resources.

Dynamic Allocation

Cloudera Manager allows you to manage mechanisms for dynamically apportioning resources statically allocated to YARN and Impala using dynamic resource pools.

- (CDH 5) YARN Independent RM - YARN manages the virtual cores, memory, running applications, and scheduling policy for each pool. In the preceding diagram, three dynamic resource pools - Dev, Product, and Mktg with weights 3, 2, and 1 respectively - are defined for YARN. If an application starts and is assigned to the Product pool, and other applications are using the Dev and Mktg pools, the Product resource pool will receive 30% x 2/6 (or 10%) of the total cluster resources. If there are no applications using the Dev and Mktg pools, the YARN Product pool will be allocated 30% of the cluster resources.

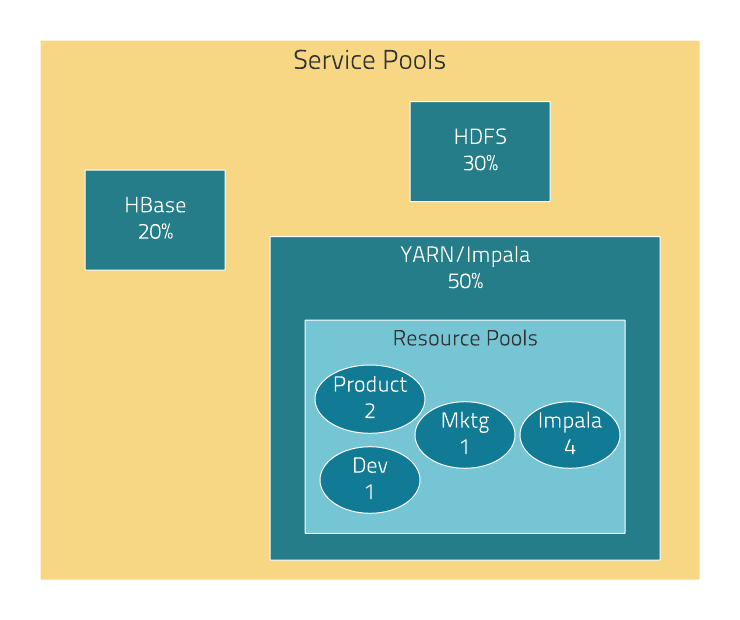

- (CDH 5) YARN and Impala Independent RM - YARN manages the virtual cores, memory, running applications, and scheduling policy for each pool; Impala manages memory for pools running queries and limits the number of running and queued queries in each pool.

- (CDH 5 and CDH 4) Impala Independent RM - Impala manages memory for pools running queries and limits the number of running and queued queries in each pool.

- (CDH 5) YARN and Impala Integrated RM -

YARN manages memory for pools running Impala queries; Impala limits the number of running and queued queries in each pool. In the YARN

and Impala integrated RM scenario, Impala services can reserve resources through YARN, effectively sharing the static YARN service pool and resource pools with YARN applications. The integrated

resource management scenario, where both YARN and Impala use the YARN resource management framework, require the Impala Llama role.

In the following figure, the YARN and Impala services have a 50% static share which is subdivided among the original resource pools with an additional resource pool designated for the Impala service. If YARN applications are using all the original pools, and Impala uses its designated resource pool, Impala queries will have the same resource allocation 50% x 4/8 = 25% as in the first scenario. However, when YARN applications are not using the original pools, Impala queries will have access to 50% of the cluster resources.

The scenarios where YARN manages resources, whether for independent RM or integrated RM, map to the YARN scheduler configuration. The scenarios where Impala independently manages resources employ the Impala admission control feature.

To submit a YARN application to a specific resource pool, specify the mapreduce.job.queuename property. The YARN application's queue property is mapped to a resource pool. To submit an Impala query to a specific resource pool, specify the REQUEST_POOL option.