Managing YARN ACLs

An Access Control List (ACL) is a list of specific permissions or controls that allow individual users or groups to perform specific actions upon specific objects, as well as defining what operations are allowed on a given object. YARN ACLs do not deny access; rather, they identify a user, list of users, group, or list of groups who can access a particular object.

Like HDFS ACLs, YARN ACLs provide a way to set different permissions for specific named users or named groups. ACLs enhance the traditional permissions model by defining access control for arbitrary combinations of users and groups instead of a single owner/user or a single group.

YARN ACL Rules and Syntax

This section describes the rules governing YARN ACLs and includes syntax examples.

YARN ACL Rules

- Special Values:

- The wildcard character (*) indicates that everyone has access.

- A single space entry indicates that no one has access.

- If there are no spaces in an ACL, then all entries (the listed users and/or groups) are considered authorized users.

- Group names in YARN Resource Manager ACLs are case sensitive. So, if you specify an uppercase group name in the ACL, it will not match the group name resolved from the Active Directory because Active Directory group names are resolved in lowercase.

- If an ACL starts with a single space, then it must consist of groups only.

- All entries after the occurrence of a second single space in an ACL are ignored.

- There are no ACLs that deny access to a user or group. However, if you wish to block access to an operation entirely, enter a value for a non-existent user or group (for example,'NOUSERS NOGROUPS'), or simply enter a single space. By doing so, you ensure that no user or group maps to a particular operation by default.

- If you wish to deny only a certain set of users and/or groups, specify every single user and/or group that requires access. Users and/or groups that are not included are "implicitly" denied access.

YARN ACL Syntax

- Users only

user1,user2,userN

Use a comma-separated list of user names. Do not place spaces after the commas separating the users in the list.

- Groups only

<single space>HR,marketing,support

You must begin group-only ACLs with a single space. Group-only ACLs use the same syntax as users, except each entry is a group name rather than user name.

- Users and Groups

fred,alice,haley<single space>datascience,marketing,support

A comma-separated list of user names, followed by a single space, followed by a comma-separated list of group names. This sample ACL authorizes access to users “fred”, “alice”, and “haley”, and to those users in the groups “datascience”, “marketing”, and “support”.

Examples

<single space>my_group

*

john,jane<single space>HR

<single space>group_1,group_2,group_3,group_4,group_5

Activating YARN ACLs

In a default Cloudera Manager managed YARN deployment, ACL checks are turned on but do not provide any security, which means that any user can execute administrative commands or submit an application to any YARN queue. To provide security the ACL must be changed from its default value, the wildcard character (*).

In non-Cloudera Manager managed clusters, the default YARN ACL setting is false, and ACLs are turned off and provide security out-of-the-box.

<property> <name>yarn.acl.enable</name> <value>true</value> </property>

YARN ACLs are independent of HDFS or protocol ACLs, which secure communications between clients and servers at a low level.

YARN ACL Types

- YARN Admin ACL

(yarn.admin.acl)

- Queue ACL

(aclSubmitApps and aclAdministerApps)

- Application ACL

(mapreduce.job.acl-view-job and mapreduce.job.acl-modify-job)

YARN Admin ACL

Use the YARN Admin ACL to allow users to run YARN administrator sub-commands, which are executed via the yarn rmadmin <command>.

The default YARN Admin ACL is set to the wildcard character (*), meaning all users and groups have YARN Administrator access and privileges. So after YARN ACL enforcement is enabled, (via the yarn.acl.enable property) every user has YARN ACL Administrator access. Unless you wish for all users to have YARN Admin ACL access, edit the yarn.admin.acl setting upon initial YARN configuration, and before enabling YARN ACLs.

hadoopadmin<space>yarnadmgroup,hadoopadmgroup

Queue ACL

Use Queue ACLs to identify and control which users and/or groups can take actions on particular queues. Configure Queue ACLs using the aclSubmitApps and aclAdministerApps properties, which are set per queue. Queue ACLs are scheduler dependent, and the implementation and enforcement differ per scheduler type.

Unlike the YARN Admin ACL, Queue ACLs are not enabled and enforced by default. Instead, you must explicitly enable Queue ACLs. Queue ACLs are defined, per queue, in the Fair Scheduler configuration. By default, neither of the Queue ACL property types is set on any queue, and access is allowed or open to any user.

The users and groups defined in the yarn.admin.acl are considered to be part of the Queue ACL, aclAdministerApps. So any user or group that is defined in the yarn.admin.acl can submit to any queue and kill any running application in the system.

The aclSubmitApps Property

Use the Queue ACL aclSubmitApps property type to enable users and groups to submit or add an application to the queue upon which the property is set. To move an application from one queue to another queue, you must have Submit permissions for both the queue in which the application is running, and the queue into which you are moving the application. You must be an administrator to set Admin ACLs; contact your system administrator to request Submit permission on this queue.

The aclAdministerApps Property

<queue name="Marketing"> <aclSubmitApps>john,jane</aclSubmitApps> <aclAdministerApps><single space>others</aclAdministerApps> </queue>

Queue ACL Evaluation

The better you understand how Queue ACLs are evaluated, the more prepared you are to define and configure them. First, you should have a basic understanding of how Fair Scheduler queues work.

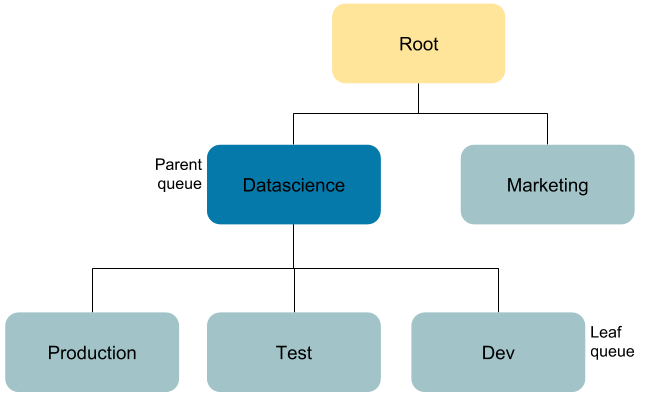

CDH Fair Scheduler supports hierarchical queues, all of which descend from a root queue, which is automatically created and defined within the system when the Scheduler starts.

Available resources are distributed among the children (“leaf” queues) of the root queue in a typical fair scheduling fashion. Then, the children distribute their assigned resources to their children in the same fashion.

As mentioned earlier, applications are scheduled on leaf queues only. You specify queues as children of other queues by placing them as sub-elements of their parents in the Fair Scheduler allocation file (fair-scheduler.xml). The default Queue ACL setting for all parent and leaf queues is “ “ (a single space), which means that by default, no one can access any of these queues.

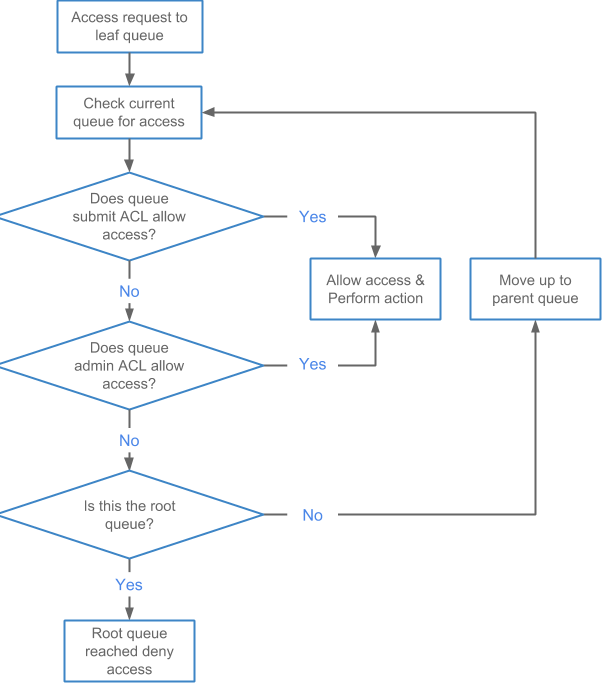

Queue ACL inheritance is enforced by assessing the ACLs defined in the queue hierarchy in a bottom-up order to the root queue. So within this hierarchy, access evaluations start at the level of the bottom-most leaf queue. If the ACL does not provide access, then the parent Queue ACL is checked. These evaluations continue upward until the root queue is checked.

Best practice: A best practice for securing an environment is to set the root queue aclSubmitApps ACL to <single space>, and specify a limited set of users and groups in aclAdministerApps. Set the ACLs for all other queues to provide submit or administrative access as appropriate.

- aclSubmitApps

- aclAdministerApps

The following diagram shows the evaluation flow for Queue ACLs:

Application ACLs

Use Application ACLs to provide a user and/or group–neither of whom is the owner–access to an application. The most common use case for Application ACLs occurs when you have a team of users collaborating on or managing a set of applications, and you need to provide read access to logs and job statistics, or access to allow for the modification of a job (killing the job) and/or application. Application ACLs are set per application and are managed by the application owner.

Users who start an application (the owners) always have access to the application they start, which includes the application logs, job statistics, and ACLs. No other user can remove or change owner access. By default, no other users have access to the application data because the Application ACL defaults to “ “ (single space), which means no one has access.

MapReduce

- mapreduce.job.acl-view-job

Provides read access to the MapReduce history and the YARN logs.

- mapreduce.job.acl-modify-job

Provides the same access as mapreduce.job.acl-view-job, and also allows the user to modify a running job.

- mapreduce.cluster.acls.enabled

- mapreduce.cluster.administrators

Spark

Spark ACLs follow a slightly different format, using a separate property for users and groups. Both user and group lists use a comma-separated list of entries. The wildcard character “*” allows access to anyone, and the single space “ “ allows access to no one. Enable Spark ACLs using the property spark.acls.enable, which is set to false by default (not enabled) and must be changed to true to enforce ACLs at the Spark level.

- Set spark.acls.enable to true (default is false).

- Set spark.admin.acls and spark.admin.acls.groups for administrative access to all Spark applications.

- Set spark.ui.view.acls and spark.ui.view.acls.groups for view access to the specific Spark application.

- Set spark.modify.acls and spark.modify.acls.groups for administrative access to the specific Spark application.

Refer to Spark Security and Spark Configuration Security for additional details.

Viewing Application Logs

- YARN Admin and Queue ACLs

- Application ACLs

After an application is in the “finished” state, logs are aggregated, depending on your cluster setup. You can access the aggregated logs via the MapReduce History server web interface. Aggregated logs are stored on shared cluster storage, which in most cases is HDFS. You can also share log aggregation via storage options like S3 or Azure by modifying the yarn.nodemanager.remote-app-log-dir setting in Cloudera Manager to point to either S3 or Azure, which should already be configured.

The shared storage on which the logs are aggregated helps to prevent access to the log files via file level permissions. Permissions on the log files are also set at the file system level, and are enforced by the file system: the file system can block any user from accessing the file, which means that the user cannot open/read the file to check the ACLs that are contained within.

- Application owner

- Group defined for the MapReduce History server

When an application runs, generates logs, and then places the logs into HDFS, a path/structure is generated (for example: /tmp/logs/john/logs/application_1536220066338_0001). So access for the application owner "john" might be set to 700, which means read, write, execute; no one else can view files underneath this directory. If you don’t have HDFS access, you will be denied access. Command line users identified in mapreduce.job.acl-view-job are also denied access at the file level. In such a use case, the Application ACLs stored inside the aggregated logs will never be evaluated because the Application ACLs do not have file access.

For clusters that do not have log aggregation, logs for running applications are kept on the node where the container runs. You can access these logs via the Resource Manager and Node Manager web interface, which performs the ACL checks.

Killing an Application

The Application ACL mapreduce.job.acl-modify-job determines whether or not a user can modify a job, but in the context of YARN, this only allows the user to kill an application. The kill action is application agnostic and part of the YARN framework. Other application types, like MapReduce or Spark, implement their own kill action independent of the YARN framework. MapReduce provides the kill actions via the mapred command.

- The application owner

- A cluster administrator defined in yarn.admin.acl

- A queue administrator defined in aclAdministerApps for the queue in which the application is running

Note that for the queue administrators, ACL inheritance applies, as described earlier.

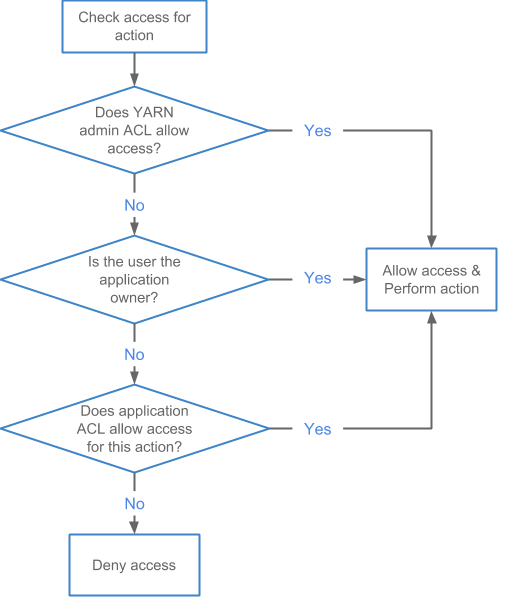

Application ACL Evaluation

The better you understand how YARN ACLs are evaluated, the more prepared you will be to define and configure the various YARN ACLs available to you. For example, if you enable user access in Administrator ACLs, then you must be aware that user may have access to/see sensitive data, and should plan accordingly. So if you are the administrator for an entire cluster, you also have access to the logs for running applications, which means you can view sensitive information in those logs associated with running the application.

Best Practice: A best practice for securing an environment is to set the YARN Admin ACL to include a limited set of users and or groups.

The following diagram shows the evaluation flow for Application ACLs:

The following diagram shows a sample queue structure, starting with leaf queues on the bottom, up to root queue at the top; use it to follow the examples of killing an application and viewing a log:

Example: Killing an Application in the Queue "Production"

- Application owner: John

- "Datascience" queue administrator: Jane

- YARN cluster administrator: Bob

In this use case, John attempts to kill the application (see Killing an Application ), which is allowed because he is the application owner.

Working as the queue administrator, Jane attempts to kill a job in the queue "Production", which she can do as the queue administrator of the parent queue.

Bob is the YARN cluster administrator and he is also listed as a user in the Admin ACL. He attempts to kill the job for which he is not the owner, but because he is the YARN cluster administrator, he can kill the job.

Example: Moving the Application and Viewing the Log in the Queue "Test"

- Application owner: John

- "Marketing" and "Dev" queue administrator: Jane

- Jane has log view rights via the mapreduce.job.acl-view-job ACL

- YARN cluster administrator: Bob

In this use case, John attempts to view the logs for his job, which is allowed because he is the application owner.

Jane attempts to access application_1536220066338_0002 in the queue "Test" to move the application to the "Marketing" queue. She is denied access to the "Test" queue via the queue ACLs–so she cannot submit to or administer the queue "Test". She is also unable to kill a job running in queue "Test". She then attempts to access the logs for application_1536220066338_0002 and is allowed access via the mapreduce.job.acl-view-job ACL.

Configuring and Enabling YARN ACLs

To configure YARN ACLs, refer to Configuring ACLs.

To enable YARN ACLs, refer to Enabling ACLs.