Dynamic Resource Pools

Minimum Required Role: Configurator (also provided by Cluster Administrator, Full Administrator)

A dynamic resource pool is a named configuration of resources and a policy for scheduling the resources among YARN applications and Impala queries running in the pool. Dynamic resource pools allow you to schedule and allocate resources to YARN applications and Impala queries based on a user's access to specific pools and the resources available to those pools. If a pool's allocation is not in use, it can be preempted and distributed to other pools. Otherwise, a pool receives a share of resources according to the pool's weight. Access control lists (ACLs) restrict who can submit work to dynamic resource pools and administer them.

Configuration Sets define the allocation of resources across pools that can be active at a given time. For example, you can define "daytime" and "off hour" configuration sets, for which you specify different resource allocations during the daytime and for the remaining time of the week.

A scheduling rule defines when a configuration set is active. The configurations in the configuration set are propagated to the fair scheduler allocation file as required by the schedule. The updated files are stored in the YARN ResourceManager configuration directory /var/run/cloudera-scm-agent/process/nn-yarn-RESOURCEMANAGER on the host running the ResourceManager role. See Server and Client Configuration.

The resources available for sharing are subject to the allocations made for each service if static service pools (cgroups) are enforced. For example, if the static pool for YARN is 75% of the total cluster resources, resource pools will use only 75% of resources.

After you create or edit dynamic resource pool settings, Refresh Dynamics Resource Pools and Discard Changes buttons display. Click Refresh Dynamics Resource Pools to propagate the settings to the fair scheduler allocation file (by default, fair-scheduler.xml). The updated files are stored in the YARN ResourceManager configuration directory /var/run/cloudera-scm-agent/process/nn-yarn-RESOURCEMANAGER on the host running the ResourceManager role. See Server and Client Configuration.

For information on determining how allocated resources are used, see Cluster Utilization Reports.

Pool Hierarchy

YARN resource pools can be nested, with subpools restricted by the settings of their parent pool. This allows you to specify a set of pools whose resources are limited by the resources of a parent. Each subpool can have its own resource restrictions; if those restrictions fall within the configuration of the parent pool, the limits for the subpool take effect. If the limits for the subpool exceed those of the parent, the parent pool limits take precedence.

You create a parent pool either by configuring it as a parent or by creating a subpool under the pool. Once a pool is a parent, you cannot submit jobs to that pool; they must be submitted to a subpool.

Managing Dynamic Resource Pools

The main entry point for using dynamic resource pools with Cloudera Manager is the menu item.

Continue reading:

- Viewing Dynamic Resource Pool Configuration

- Creating a YARN Dynamic Resource Pool

- Enabling or Disabling Impala Admission Control in Cloudera Manager

- Creating an Impala Dynamic Resource Pool

- Configuration Settings for Impala Dynamic Resource Pool

- Cloning a Resource Pool

- Deleting a Resource Pool

- Editing Dynamic Resource Pools

Viewing Dynamic Resource Pool Configuration

- YARN - Weight, Virtual Cores, Min and Max Memory, Max Running Apps, Max Resources for Undeclared Children, and Scheduling Policy

- Impala Admission Control - Max Memory, Max Running Queries, Max Queued Queries, Queue Timeout, Minimum Query Memory Limit, Maximum Query Memory Limit, Clamp MEM_LIMIT Query Option, and Default Query Memory Limit

- Select . If the cluster has a YARN service, the tab displays. If the cluster has an Impala service enabled as described in Enabling or Disabling Impala Admission Control in Cloudera Manager, the tab displays.

- Click the YARN or Impala Admission Control tab.

Creating a YARN Dynamic Resource Pool

There is always a resource pool named root.default. By default, all YARN applications run in this pool. You create additional pools when your workload includes identifiable groups of applications (such as from a particular application, or a particular group within your organization) that have their own requirements.- Select . If the cluster has a YARN service, the tab displays. If the cluster has an Impala service enabled as described in Enabling or Disabling Impala Admission Control in Cloudera Manager, the tab displays.

- Click the YARN tab.

- Click Create Resource Pool. The Create Resource Pool dialog box displays, showing the Resource Limits tab.

- Specify a name and resource limits for the pool:

- In the Resource Pool Name field, specify a unique pool name containing only alphanumeric characters. If referencing a user or group name that contains a ".", replace the "." with "_dot_".

- To make the pool a parent pool, select the Parent Pool checkbox.

- Define a configuration set by assigning values to the listed properties. Specify a weight that indicates that pool's share of resources relative to other pools, minimum and maximum for virtual cores and memory, and a limit on the number of applications that can run simultaneously in the pool. If this is a parent pool, you can also set the maximum resources for undeclared children.

- Click the Scheduling Policy tab and select a policy:

- Dominant Resource Fairness (DRF) (default) - An extension of fair scheduling for more than one resource. DRF determines CPU and memory resource shares based on the availability of those resources and the job requirements.

- Fair (FAIR) - Determines resource shares based on memory.

- First-In, First-Out (FIFO) - Determines resource shares based on when a job was added.

- If you have enabled Fair Scheduler preemption, click the Preemption tab and optionally set a preemption timeout to specify how long a job in this pool must wait before it can preempt resources from jobs in other pools. To enable preemption, follow the procedure in Enabling and Disabling Fair Scheduler Preemption.

- If you have enabled ACLs and specified users or groups, optionally click the Submission and Administration Access Control tabs to specify which users and groups can submit applications and which users can view all and kill applications. By default, anyone can submit, view all, and kill applications. To restrict these permissions, select Allow these users and groups and provide a comma-delimited list of users and groups in the Users and Groups fields respectively.

- Click Create.

- Click Refresh Dynamic Resource Pools.

Enabling or Disabling Impala Admission Control in Cloudera Manager

We recommend enabling admission control on all production clusters to alleviate possible capacity issues. The capacity issues could be because of a high volume of concurrent queries, because of heavy-duty join and aggregation queries that require large amounts of memory, or because Impala is being used alongside other Hadoop data management components and the resource usage of Impala must be constrained to work well in a multitenant deployment.

- Go to the Impala service.

- In the Configuration tab, select .

- Select or clear both the Enable Impala Admission Control checkbox and the Enable Dynamic Resource Pools checkbox.

- Enter a Reason for change, and then click Save Changes to commit the changes.

- Restart the Impala service.

After completing this task, for further configuration settings, customize the configuration settings for the dynamic resource pools, as described in below.

Creating an Impala Dynamic Resource Pool

There is always a resource pool designated as root.default. By default, all Impala queries run in this pool when the dynamic resource pool feature is enabled for Impala. You create additional pools when your workload includes identifiable groups of queries (such as from a particular application, or a particular group within your organization) that have their own requirements for concurrency, memory use, or service level agreement (SLA). Each pool has its own settings related to memory, number of queries, and timeout interval.

- Select . If the cluster has an Impala service, the tab displays under the Impala Admission Control tab.

- Click the Impala Admission Control tab.

- Click Create Resource Pool.

- Specify a name and resource limits for the pool:

- In the Resource Pool Name field, type a unique name containing only alphanumeric characters.

- Optionally, click the Submission Access Control tab to specify which users and groups can submit queries. By default, anyone can submit queries. To restrict this permission, select the Allow these users and groups option and provide a comma-delimited list of users and groups in the Users and Groups fields respectively.

- Click Create.

- Click Refresh Dynamic Resource Pools.

Configuration Settings for Impala Dynamic Resource Pool

Impala dynamic resource pools support the following settings.- Max Memory

- Maximum amount of aggregate memory available across the cluster to all queries executing in this pool. This should be a portion of the aggregate configured memory for Impala daemons,

which will be shown in the settings dialog next to this option for convenience. Setting this to a non-zero value enables memory based admission control.

Impala determines the expected maximum memory used by all queries in the pool and holds back any further queries that would result in Max Memory being exceeded.

If you specify Max Memory, you should specify the amount of memory to allocate to each query in this pool. You can do this in two ways:

- By setting Maximum Query Memory Limit and Minimum Query Memory Limit. This is preferred in CDH 6.1 and higher and gives Impala flexibility to set aside more memory to queries that are expected to be memory-hungry.

- By setting Default Query Memory Limit to the exact amount of memory that Impala should set aside for queries in that pool.

Note that if you do not set any of the above options, or set Default Query Memory Limit to 0, Impala will rely entirely on memory estimates to determine how much memory to set aside for each query. This is not recommended because it can result in queries not running or being starved for memory if the estimates are inaccurate.

For example, consider the following scenario:- The cluster is running impalad daemons on five hosts.

- A dynamic resource pool has Max Memory set to 100 GB.

- The Maximum Query Memory Limit for the pool is 10 GB and Minimum Query Memory Limit is 2 GB. Therefore, any query running in this pool could use up to 50 GB of memory (Maximum Query Memory Limit * number of Impala nodes).

- Impala will execute varying numbers of queries concurrently because queries may be given memory limits anywhere between 2 GB and 10 GB, depending on the estimated memory requirements. For example, Impala may execute up to 10 small queries with 2 GB memory limits or two large queries with 10 GB memory limits because that is what will fit in the 100 GB cluster-wide limit when executing on five hosts.

- The executing queries may use less memory than the per-host memory limit or the Max Memory cluster-wide limit if they do not need that much memory. In general this is not a problem so long as you are able to execute enough queries concurrently to meet your needs.

- Minimum Query Memory Limit and Maximum Query Memory Limit

- These two options determine the minimum and maximum per-host memory limit that will be chosen by Impala Admission control for queries in this resource pool. If set, Impala admission

control will choose a memory limit between the minimum and maximum value based on the per-host memory estimate for the query. The memory limit chosen determines the amount of memory that Impala

admission control will set aside for this query on each host that the query is running on. The aggregate memory across all of the hosts that the query is running on is counted against the pool’s

Max Memory.

Minimum Query Memory Limit must be less than or equal to Maximum Query Memory Limit and Max Memory.

You can override Impala’s choice of memory limit by setting the MEM_LIMIT query option. If the Clamp MEM_LIMIT Query Option is selected and the user sets MEM_LIMIT to a value that is outside of the range specified by these two options, then the effective memory limit will be either the minimum or maximum, depending on whether MEM_LIMIT is lower than or higher than the range. - Default Query Memory Limit

- The default memory limit applied to queries executing in this pool when no explicit MEM_LIMIT query option is set. The memory limit chosen determines the

amount of memory that Impala Admission control will set aside for this query on each host that the query is running on. The aggregate memory across all of the hosts that the query is running on is

counted against the pool’s Max Memory.

This option is deprecated from CDH 6.1 and higher and is replaced by Maximum Query Memory Limit and Minimum Query Memory Limit. Do not set this field if either Maximum Query Memory Limit or Minimum Query Memory Limit is set.

- Max Running Queries

-

Maximum number of concurrently running queries in this pool. The default value is unlimited for CDH 5.7 or higher. (optional)

The maximum number of queries that can run concurrently in this pool. The default value is unlimited. Any queries for this pool that exceed Max Running Queries are added to the admission control queue until other queries finish. You can use Max Running Queries in the early stages of resource management, when you do not have extensive data about query memory usage, to determine if the cluster performs better overall if throttling is applied to Impala queries.For a workload with many small queries, you typically specify a high value for this setting, or leave the default setting of "unlimited". For a workload with expensive queries, where some number of concurrent queries saturate the memory, I/O, CPU, or network capacity of the cluster, set the value low enough that the cluster resources are not overcommitted for Impala.

Once you have enabled memory-based admission control using other pool settings, you can still use Max Running Queries as a safeguard. If queries exceed either the total estimated memory or the maximum number of concurrent queries, they are added to the queue.

- Max Queued Queries

- Maximum number of queries that can be queued in this pool. The default value is 200 for CDH 5.3 or higher and 50 for previous versions of Impala. (optional)

- Queue Timeout

- The amount of time, in milliseconds, that a query waits in the admission control queue for this pool before being canceled. The default value is 60,000 milliseconds.

It the following cases, Queue Timeout is not significant, and you can specify a high value to avoid canceling queries unexpectedly:

- In a low-concurrency workload where few or no queries are queued

- In an environment without a strict SLA, where it does not matter if queries occasionally take longer than usual because they are held in admission control

In a high-concurrency workload, especially for queries with a tight SLA, long wait times in admission control can cause a serious problem. For example, if a query needs to run in 10 seconds, and you have tuned it so that it runs in 8 seconds, it violates its SLA if it waits in the admission control queue longer than 2 seconds. In a case like this, set a low timeout value and monitor how many queries are cancelled because of timeouts. This technique helps you to discover capacity, tuning, and scaling problems early, and helps avoid wasting resources by running expensive queries that have already missed their SLA.

If you identify some queries that can have a high timeout value, and others that benefit from a low timeout value, you can create separate pools with different values for this setting.

- Clamp MEM_LIMIT Query Option

- If this field is not selected, the MEM_LIMIT query option will not be bounded by the Maximum Query Memory Limit and the Minimum Query Memory Limit values specified for this resource pool. By default, this field is selected in CDH 6.1 and higher. The field is disabled if both Minimum Query Memory Limit and Maximum Query Memory Limit are not set.

Cloning a Resource Pool

- Select . If the cluster has a YARN service, the tab displays. If the cluster has an Impala service enabled as described in Enabling or Disabling Impala Admission Control in Cloudera Manager, the tab displays.

- Click the YARN or Impala Admission Control tab.

- Click

at the right of a resource pool row and select Clone.

at the right of a resource pool row and select Clone. - Specify a name for the pool and edit pool properties as desired.

- Click Create.

- Click Refresh Dynamic Resource Pools.

Deleting a Resource Pool

- Select . If the cluster has a YARN service, the tab displays. If the cluster has an Impala service enabled as described in Enabling or Disabling Impala Admission Control in Cloudera Manager, the tab displays.

- Click the YARN or Impala Admission Control tab.

- Click

at the right of a resource pool row and select Delete.

at the right of a resource pool row and select Delete. - Click OK.

- Click Refresh Dynamic Resource Pools.

Editing Dynamic Resource Pools

- Select . If the cluster has a YARN service, the tab displays. If the cluster has an Impala service enabled as described in Enabling or Disabling Impala Admission Control in Cloudera Manager, the tab displays.

- Click the YARN or Impala Admission Control tab.

- Click Edit at the right of a resource pool row. Edit the properties.

- If you have enabled ACLs and specified users or groups, optionally click the Submission and Administration Access Control tabs to specify which users and groups can submit applications and which users can view all and kill applications. By default, anyone can submit, view all, and kill applications. To restrict these permissions, select Allow these users and groups and provide a comma-delimited list of users and groups in the Users and Groups fields respectively.

- Click Save.

- Click Refresh Dynamic Resource Pools.

YARN Pool Status and Configuration Options

Continue reading:

Viewing Dynamic Resource Pool Status

- Cluster menu

- Select . The tab displays.

- Click View Dynamic Resource Pool Status.

- YARN service

- Go to the YARN service.

- Click the Resource Pools tab.

Configuring Default YARN Fair Scheduler Properties

For information on the default properties, see Configuring the Fair Scheduler.- Select . The tab displays.

- Click the YARN tab.

- Click the Default Settings button.

- Specify the default scheduling policy, maximum applications, and preemption timeout properties.

- Click Save.

- Click Refresh Dynamic Resource Pools.

Creating a Resource Subpool

YARN resource pools can form a nested hierarchy. To manually create a subpool:- Select . The tab displays.

- Click

at the right of a resource pool row and select Create

Subpool. Configure subpool properties.

at the right of a resource pool row and select Create

Subpool. Configure subpool properties. - Click Create.

- Click Refresh Dynamic Resource Pools.

Setting YARN User Limits

Pool properties determine the maximum number of applications that can run in a pool. To limit the number of applications specific users can run at the same time:

- Select . The tab displays.

- Click the User Limits tab. The table displays a list of users and the maximum number of jobs each user can submit.

- Click Add User Limit.

- Specify a username. containing only alphanumeric characters. If referencing a user or group name that contains a ".", replace the "." with "_dot_".

- Specify the maximum number of running applications.

- Click Create.

- Click Refresh Dynamic Resource Pools.

Configuring ACLs

To configure which users and groups can submit and kill applications in any resource pool:

- Enable ACLs.

- In Cloudera Manager select the YARN service.

- Click the Configuration tab.

- Search for Admin ACL property, and specify which users and groups can submit and kill applications.

- Enter a Reason for change, and then click Save Changes to commit the changes.

- Return to the Home page by clicking the Cloudera Manager logo.

- Click the

icon that is next to any stale services to invoke the cluster restart wizard.

icon that is next to any stale services to invoke the cluster restart wizard. - Click Restart Stale Services.

- Click Restart Now.

- Click Finish.

Enabling ACLs

To specify whether ACLs are checked:

- In Cloudera Manager select the YARN service.

- Click the Configuration tab.

- Search for the Enable ResourceManager ACL property, and select the YARN service.

- Enter a Reason for change, and then click Save Changes to commit the changes.

- Return to the Home page by clicking the Cloudera Manager logo.

- Click the

icon that is next to any stale services to invoke the cluster restart wizard.

icon that is next to any stale services to invoke the cluster restart wizard. - Click Restart Stale Services.

- Click Restart Now.

- Click Finish.

Defining Configuration Sets

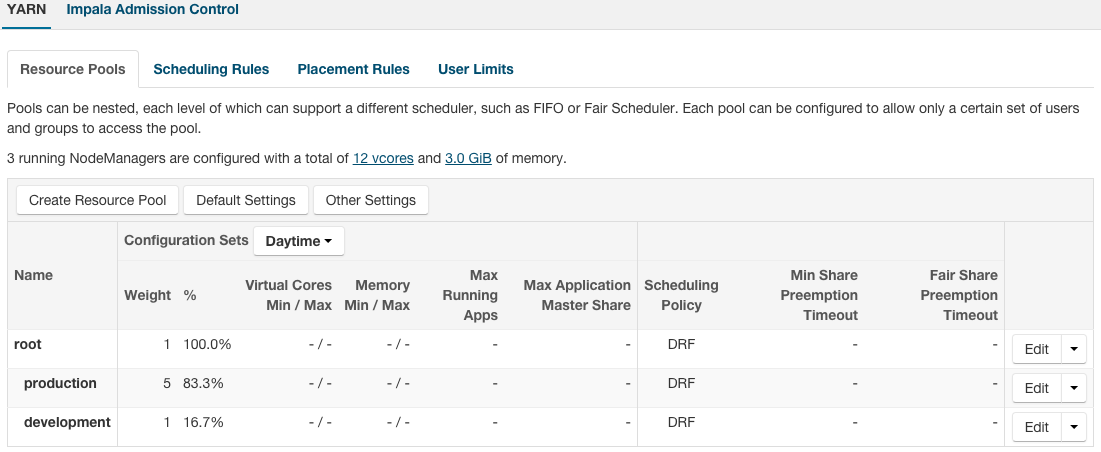

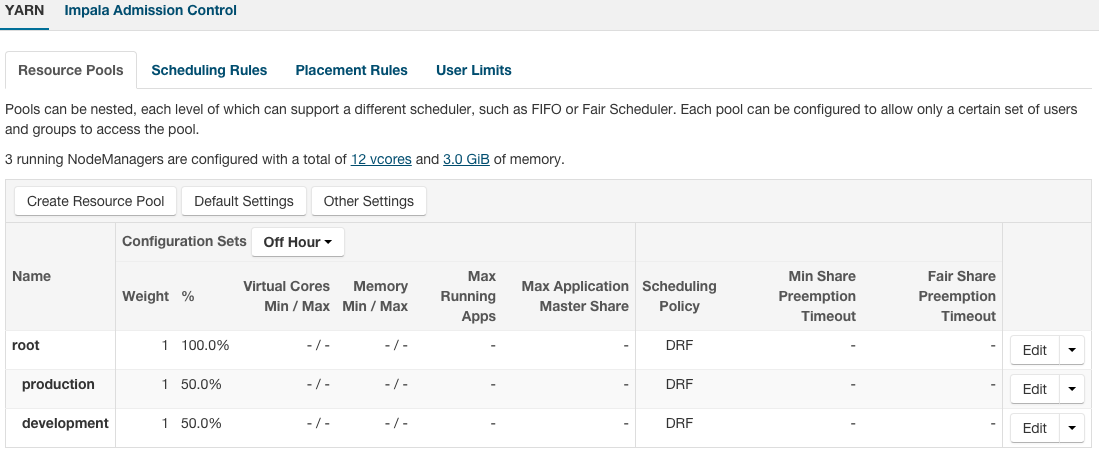

Configuration Sets define the allocation of resources across pools that can be active at a given time. For example, you can define "daytime" and "off hour" configuration sets, for which you specify different resource allocations during the daytime and for the remaining time of the week.

You define configuration set activity by creating scheduling rules. Once you have defined configuration sets, you can configure limits such as weight, minimum and maximum memory and virtual cores, and maximum running applications.

Specifying Resource Limit Properties

- Select . If the cluster has a YARN service, the tab displays. If the cluster has an Impala service enabled as described in Enabling or Disabling Impala Admission Control in Cloudera Manager, the tab displays.

- Click the Resource Pools tab.

- For each resource pool, click Edit.

- Select a configuration set name.

- Edit the configuration set properties and click Save.

- Click Refresh Dynamic Resource Pools.

Example Configuration Sets

The Daytime configuration set assigns the production pool five times the resources of the development pool:

The Off Hour configuration set assigns the production and development pools an equal amount of resources:

See example scheduling rules for these configuration sets.

Viewing the Properties of a Configuration Set

- Select . If the cluster has a YARN service, the tab displays. If the cluster has an Impala service enabled as described in Enabling or Disabling Impala Admission Control in Cloudera Manager, the tab displays.

- In the Configuration Sets drop-down list, select a configuration set. The properties of each pool for that configuration set display.

Scheduling Configuration Sets

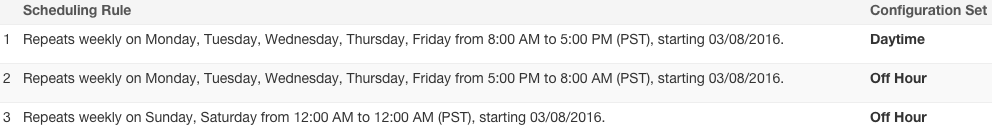

A scheduling rule defines when a configuration set is active. The configurations in the configuration set are propagated to the fair scheduler allocation file as required by the schedule. The updated files are stored in the YARN ResourceManager configuration directory /var/run/cloudera-scm-agent/process/nn-yarn-RESOURCEMANAGER on the host running the ResourceManager role. See Server and Client Configuration.

Example Scheduling Rules

Consider the example Daytime and Off Hour configuration sets. To specify that the Daytime configuration set is active every weekday from 8:00 a.m. to 5:00 p.m. and the Off Hour configuration set is active all other times (evenings and weekends), define the following rules:

Adding a Scheduling Rule

- Select . If the cluster has a YARN service, the tab displays. If the cluster has an Impala service enabled as described in Enabling or Disabling Impala Admission Control in Cloudera Manager, the tab displays.

- Click the Scheduling Rules tab.

- Click Create Scheduling Rule.

- In the Configuration Set field, choose the configuration set to which the rule applies. Select Create New or Use Existing.

- If you create a new configuration set, type a name in the Name field.

If you use an existing configuration set, select one from the drop-down list.

- Configure the rule to repeat or not:

- To repeat the rule, keep the Repeat field selected and specify the repeat frequency. If the frequency is weekly, specify the repeat day or days.

- If the rule does not repeat, clear the Repeat field, click the left side of the on field to display a drop-down calendar where you set the starting date and time. When you specify the date and time, a default time window of two hours is set in the right side of the on field. Click the right side to adjust the date and time.

- Click Create.

- Click Refresh Dynamic Resource Pools.

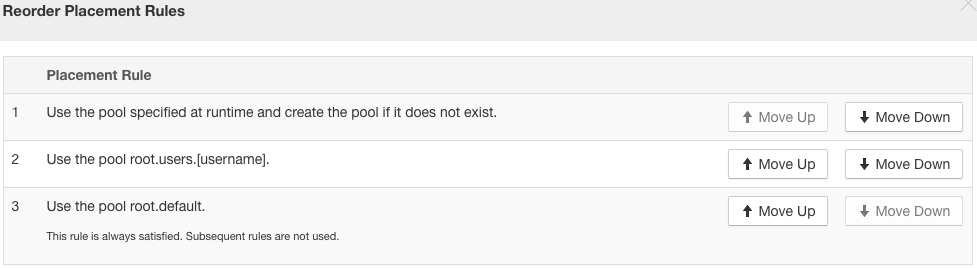

Reordering Scheduling Rules

- Select . If the cluster has a YARN service, the tab displays. If the cluster has an Impala service enabled as described in Enabling or Disabling Impala Admission Control in Cloudera Manager, the tab displays.

- Click the Scheduling Rules tab.

- Click Reorder Scheduling Rules.

- Click Move Up or Move Down in a rule row.

- Click Save.

- Click Refresh Dynamic Resource Pools.

Editing a Scheduling Rule

- Select . If the cluster has a YARN service, the tab displays. If the cluster has an Impala service enabled as described in Enabling or Disabling Impala Admission Control in Cloudera Manager, the tab displays.

- Click Scheduling Rules.

- Click Edit at the right of a rule.

- Edit the rule.

- Click Save.

- Click Refresh Dynamic Resource Pools.

Deleting a Scheduling Rule

- Select . If the cluster has a YARN service, the tab displays. If the cluster has an Impala service enabled as described in Enabling or Disabling Impala Admission Control in Cloudera Manager, the tab displays.

- Click Scheduling Rules.

- Click

at the right of a rule and select Delete.

at the right of a rule and select Delete. - Click OK.

- Click Refresh Dynamic Resource Pools.

Assigning Applications and Queries to Resource Pools

- To specify a pool at run time for a YARN application, provide the pool name in the mapreduce.job.queuename property.

- To specify a pool at run time for an Impala query, provide the pool name in the REQUEST_POOL option.

Cloudera Manager allows you to specify a set of ordered rules for assigning applications and queries to pools. You can also specify default pool settings directly in the YARN fair scheduler configuration.

Some rules allow to you specify that the pool be created in the dynamic resource pool configuration if it does not already exist. Allowing pools to be created is optional. If a rule is satisfied and you do not create a pool, YARN runs the job "ad hoc" in a pool to which resources are not assigned or managed.

If no rule is satisfied when the application or query runs, the YARN application or Impala query is rejected.

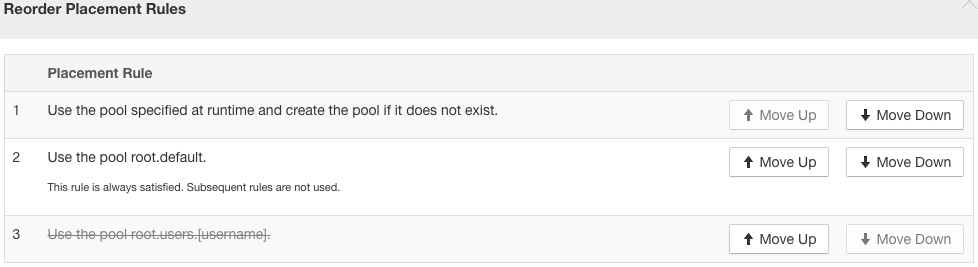

Placement Rule Ordering and Evaluation

Pool placement rules are evaluated in the order in which they appear in the placement rule list. When a job is submitted, the rules are evaluated, and the first matching rule is used to determine the pool in which the job is run.

If a rule is always satisfied, subsequent rules are not evaluated. Rules that are never evaluated appear in struck-though gray text. For an example see Example Placement Rules.

By default, pool placement rules are ordered in reverse order of when they were added; the last rule added appears first. You can easily reorder rules.

Creating Pool Placement Rules

Placement rules determine the pools to which applications and queries are assigned. At installation, Cloudera Manager provides a set of default rules and rule ordering.

- Select . If the cluster has a YARN service, the tab displays. If the cluster has an Impala service enabled as described in Enabling or Disabling Impala Admission Control in Cloudera Manager, the tab displays.

- Click the YARN or Impala Admission Control tab.

- Click the Placement Rules tab.

- Click Create Placement Rule. In the Type drop-down list, select a rule that specifies the name of pool and its

position in the pool hierarchy:

- YARN

- specified at run time - Use root.[pool name], where pool name is the name of the pool specified at run time.

- root.users.[username] - Use the parent pool root.users in a pool named by the user submitting the application. The root.users parent pool and this rule are created by default. However, on upgrading from Cloudera Manager 5.7, neither the pool or placement rule is added.

- root.default - Use the root.default pool.

- root.[pool name] - Use root.pool name, where pool name is the name you specify in the Pool Name field that displays after you select the rule.

- root.[primary group] - Use the pool that matches the primary group of the user submitting the application.

- root.[secondary group] - Use the pool that matches one of the secondary groups of the user that submitted the application.

- root.[username] - Use the pool that matches the name of the user that submitted the application.

- root.[primary group].[username] - Use the parent pool that matches the primary group of the user that submitted the application and then a subpool that matches the username.

- root.[secondary group].[username] - Use the parent pool that matches one of the secondary groups of the user that submitted the application and then a subpool that matches the username.

- Impala

- specified at run time - Use the REQUEST_POOL query option. For example, SET REQUEST_POOL=root.[pool name].

- root.[pool name] - Use root.pool name, where pool name is the name you specify in the Pool Name field that displays after you select the rule.

- root.[username] - Use the pool that matches the name of the user that submitted the query. This is not recommended.

- root.[primary group] - Use the pool that matches the primary group of the user that submitted the query.

- root.[secondary group] - Use the pool that matches one of the secondary groups of the user that submitted the query.

- root.default - Use the root.default pool.

For example, consider the following three Impala users.-

username: test1, secondarygroupname: testgroup

-

username: test2, secondarygroupname: testgroup

-

username: test3, secondarygroupname: testgroup

This cluster has 2 dynamic pools: root.default and root.testgroup

This cluster has 3 placement rules listed in the following order.

- root.[username]

- root.[primary group]

- root.[secondary group]

With the above placement rule, query submitted by all three users will be mapped to the root.testgroup resource pool.

- YARN

- (YARN only) To indicate that the pool should be created if it does not exist when the application runs, check the Create pool if it does not exist checkbox.

- Click Create. The rule is added to the top of the placement rule list and becomes the first rule evaluated.

- Click Refresh Dynamic Resource Pools.

Default Placement Rules and Order

- Use the pool specified at run time and create the pool if it does not exist.

- Use the pool root.users.[username].

- Use the pool root.default.

- Use the pool specified at run time, only if the pool exists.

- Use the pool root.default.

Reordering Pool Placement Rules

To change the order in which pool placement rules are evaluated:

- Select . If the cluster has a YARN service, the tab displays. If the cluster has an Impala service enabled as described in Enabling or Disabling Impala Admission Control in Cloudera Manager, the tab displays.

- Click the YARN or Impala Admission Control tab.

- Click the Placement Rules tab.

- Click Reorder Placement Rules.

- Click Move Up or Move Down in a rule row.

- Click Save.

- Click Refresh Dynamic Resource Pools.

Example Placement Rules

The following figures show the default pool placement rule setting for YARN:

If a pool is specified at run time, that pool is used for the job and the pool is created if it did not exist. If no pool is specified at run time, a pool named according to the user submitting the job within the root.users parent pool is used. If that pool cannot be used (for example, because the root.users pool is a leaf pool), pool root.default is used.

If you move rule 2 down (which specifies to run the job in a pool named after the user running the job nested within the parent pool root.users), rule 2

becomes disabled because the previous rule (Use the pool root.default) is always satisfied.