Supported tables in SSB

SQL Stream Builder (SSB) supports different table types to ease development and access to all kinds of data sources. When creating tables in SQL Stream Builder, you have the option to either add them manually, import them automatically or create them using Flink SQL depending on the connector you want to use.

- Kafka Tables

- Apache Kafka Tables represent data contained in a single Kafka topic in JSON, AVRO or CSV

format. It can be defined using the Streaming SQL Console wizard or you can create Kafka tables

from the following pre-defined templates:

- CDP Kafka

- Kafka

- Upsert Kafka

- Tables from Catalogs

- SSB supports Kudu, Hive and Schema Registry as catalog providers. After registering them using the Streaming SQL Console, the tables are automatically imported to SSB, and can be used in the SQL window for computations.

- Flink Tables

- Flink SQL tables represent tables created by the standard CREATE TABLE syntax. This supports

full flexibility in defining new or derived tables and views. You can either provide the syntax

by directly adding it to the SQL window or use one of the predefined DDL templates:

- Blackhole

- Datagen

- Faker

- Filesystem

- JDBC

- MySQL CDC

- Oracle CDC

- PostgreSQL CDC

- Webhook Tables

- Webhooks can only be used as tables to write results to. When you use the Webhook Tables the result of your SQL query is sent to a specified webhook.

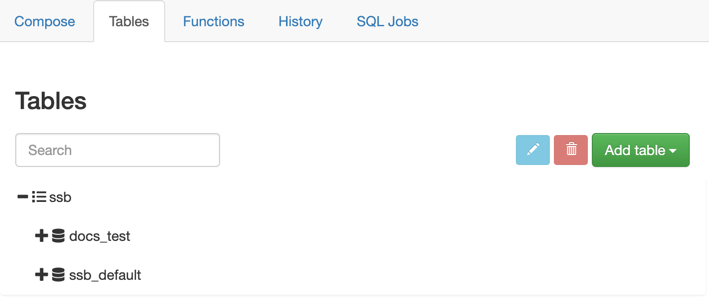

After creating your tables for the SQL jobs, you can review and manage them on the Tables tab in Streaming SQL Console. The created tables are organized based on the teams a user is assigned to.