Running Spark Applications on YARN

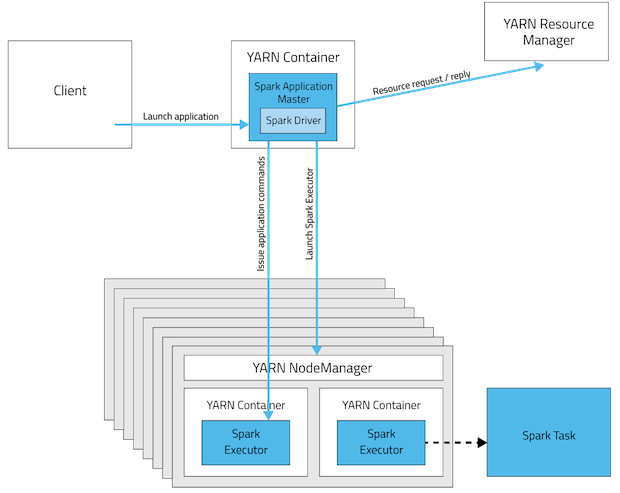

When Spark applications run on YARN, resource management, scheduling, and security are controlled by YARN. You can run an application in cluster mode or client mode.

When running Spark on YARN, each Spark executor runs as a YARN container. Where MapReduce schedules a container and starts a JVM for each task, Spark hosts multiple tasks within the same container. This approach enables several orders of magnitude faster task startup time.

Deployment Modes

In YARN, each application instance has an ApplicationMaster process, which is the first container started for that application. The application is responsible for requesting resources from the ResourceManager. Once the resources are allocated, the application instructs NodeManagers to start containers on its behalf. ApplicationMasters eliminate the need for an active client: the process starting the application can terminate, and coordination continues from a process managed by YARN running on the cluster.

For the option to specify the deployment mode, see Command-Line Options.

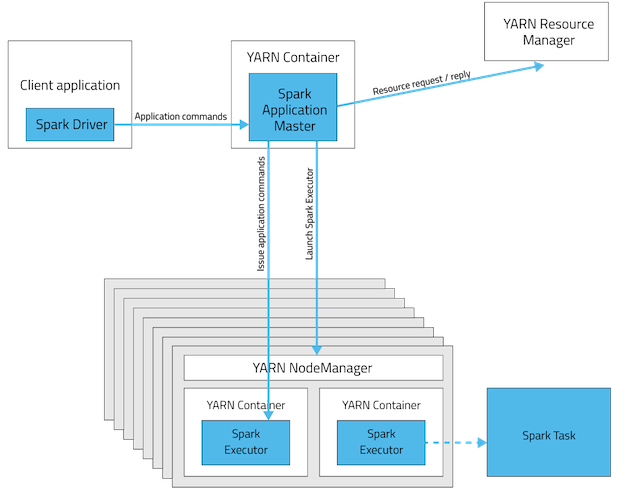

Cluster Deployment Mode

In cluster mode, the driver runs in the ApplicationMaster on a cluster host chosen by YARN. This means that the same process, which runs in a YARN container, is responsible for both

driving the application and requesting resources from YARN. The client that launches the application doesn't need to continue running for the entire lifetime of the application.

Cluster mode is not well suited to using Spark interactively. Spark applications that require user input, such as spark-shell and pyspark, need the Spark driver to run inside the client process that initiates the Spark application.

Spark Options

As well as the class implementing the application and the library containing the class, the options applicable to running Spark applications on YARN are:

| Option | Description |

|---|---|

| --executor-cores | Number of processor cores to allocate on each executor. |

| --executor-memory | The maximum heap size to allocate to each executor. Alternatively, you can use the spark.executor.memory configuration parameter. |

| --num-executors | The total number of YARN containers to allocate for this application. Alternatively, you can use the spark.executor.instances configuration parameter. |

| --queue | The YARN queue to submit to.

Default: default. |

Configuring the Environment

Spark requires the HADOOP_CONF_DIR or YARN_CONF_DIR environment variable point to the directory containing the client-side configuration files for the cluster. These configurations are used to write to HDFS and connect to the YARN ResourceManager. If you are using a Cloudera Manager deployment, these variables are configured automatically. If you are using an unmanaged deployment, ensure that you set the variables as described in Running Spark on YARN.

Running a Spark Shell Application on YARN

To run the spark-shell or pyspark client on YARN, use the --master yarn --deploy-mode client flags when you start the application.

If you are using a Cloudera Manager deployment, these properties are configured automatically.

Submitting Spark Applications to YARN

To submit an application to YARN, use the spark-submit script and specify the --master yarn flag. For other spark-submit options, see spark-submit Options.

Monitoring and Debugging Spark Applications

To obtain information about Spark application behavior you can consult YARN logs and the Spark web application UI. These two methods provide complementary information. For information how to view logs created by Spark applications and the Spark web application UI, see Monitoring Spark Applications.

Example: Running SparkPi on YARN

Running SparkPi in YARN Cluster Mode

- CDH 5.2 and lower

spark-submit --class org.apache.spark.examples.SparkPi --master yarn \ --deploy-mode cluster SPARK_HOME/examples/lib/spark-examples.jar 10

- CDH 5.3 and higher

spark-submit --class org.apache.spark.examples.SparkPi --master yarn\ --deploy-mode cluster SPARK_HOME/lib/spark-examples.jar 10

The command will continue to print out status until the job finishes or you press control-C. Terminating the spark-submit process in cluster mode does not terminate the Spark application as it does in client mode. To monitor the status of the running application, run yarn application -list.

Running SparkPi in YARN Client Mode

- CDH 5.2 and lower

spark-submit --class org.apache.spark.examples.SparkPi --master yarn \ --deploy-mode client SPARK_HOME/examples/lib/spark-examples.jar 10

- CDH 5.3 and higher

spark-submit --class org.apache.spark.examples.SparkPi --master yarn \ --deploy-mode client SPARK_HOME/lib/spark-examples.jar 10

Enabling Dynamic Executor Allocation

Spark can dynamically increase and decrease the number of executors for an application if the resource requirements for the application changes over time. To enable dynamic allocation, set spark.dynamicAllocation.enabled to true. Specify the minimum number of executors that should be allocated to an application by means of the spark.dynamicAllocation.minExecutors parameter, and specify and the maximum number of executors by means of the spark.dynamicAllocation.maxExecutors parameter. Set the initial number of executors in the spark.dynamicAllocation.initialExecutors parameter. Do not use the --num-executors command line argument or the spark.executor.instances parameter; they are incompatible with dynamic allocation. For more information, see dynamic resource allocation.

Optimizing YARN Mode

Unmanaged CDH Deployments

In CDH deployments not managed by Cloudera Manager, Spark copies the Spark assembly JAR file to HDFS each time you run spark-submit. You can avoid doing this copy in one of the following ways:

- Set spark.yarn.jar to the local path to the assembly JAR:

- package installation - local:/usr/lib/spark/lib/spark-assembly.jar

- parcel installation - local:/opt/cloudera/parcels/CDH/lib/spark/lib/spark-assembly.jar

- Upload the JAR and configure the JAR location:

- Manually upload the Spark assembly JAR file to HDFS:

$ hdfs dfs -mkdir -p /user/spark/share/lib $ hdfs dfs -put SPARK_HOME/assembly/lib/spark-assembly_*.jar /user/spark/share/lib/spark-assembly.jar

You must manually upload the JAR each time you upgrade Spark to a new minor CDH release. - Set spark.yarn.jar to the HDFS path:

spark.yarn.jar=hdfs://namenode:8020/user/spark/share/lib/spark-assembly.jar

- Manually upload the Spark assembly JAR file to HDFS: