Chapter 1. Workflow Manager Basics

Workflow Manager, which can be accessed as a View in Ambari, allows you to easily create and schedule workflows and monitor workflow jobs. It is based on the Apache Oozie workflow engine that allows users to connect and automate the execution of big data processing tasks into a defined workflow. Workflow Manager integrates with the Hortonworks Data Platform (HDP) and supports Hadoop jobs for Hive, Sqoop, Pig, MapReduce, Spark, and more. In addition, it can be used to perform Java, Linux shell, distcp, SSH, email, and other operations.

The following content describes the design component and the dashboard component that are included with Workflow Manager.

Workflow Manager Design Component

Workflow Manager Dashboard Component

Workflow Manager Design Component

You can use the design component of Workflow Manager to create and graphically lay out your workflow. You can select action nodes and control nodes and implement them in a defined sequence to ingest, transform, and analyze data. Action nodes trigger a computation or processing task. Control nodes control the workflow execution path. You can add Oozie actions and other operations as action nodes on the workflow, and you can add fork and decision points as control nodes to your workflow. You can include a maximum of 400 nodes in a workflow.

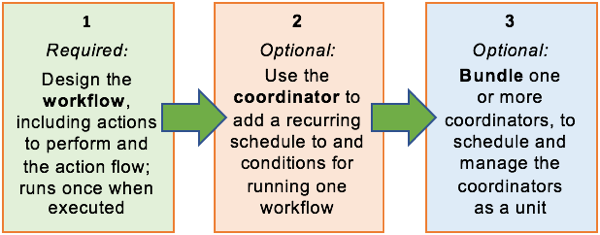

From the designer, you can also schedule workflows using coordinators, and group multiple coordinators into bundles. A coordinator enables you to automate the recurring execution of a workflow based on time, an external event, or the availability of specific data files. The coordinator can define parameters only around a single workflow. Coordinators make it easy to manage concurrency and keep track of the completion of each workflow instance. The coordinator controls how many instances of a workflow can execute in parallel, as well as controlling how many instances are brought into a waiting state before the next workflow instance can run.

Coordinator jobs can be combined to create a chain of jobs that can be managed as a single entity. This logical chaining together of multiple, interdependent coordinator jobs is referred to as a bundle, also called a data application pipeline. By using bundled coordinator jobs, the output of one workflow that runs at one frequency can be used as the initial input for another job that runs at a different frequency. You can even bundle the same workflow with different coordinator schedules. To create a bundle, you must configure one or more workflows as coordinator jobs, then configure the coordinator jobs as part of a bundle.

More Information

You can read further details about workflows, coordinators, and bundles in the following Apache documentation:

Workflow Manager Dashboard Component

The dashboard provides monitoring capabilities for jobs submitted to Workflow Manager. There are three types of jobs in Workflow Manager: Workflow jobs, coordinator jobs, and bundle jobs. Each job type is viewed in a separate table in the dashboard.

You can perform actions such as run, kill, suspend, and resume on individual jobs, or you can select multiple jobs of a single type and perform these actions on several jobs at once.

From the dashboard, you can access details about a job, view job logs, and view the XML structure of the job.

Workflow jobs are launched from a client and run on the server engine. The Oozie metadata database contains the workflow definitions, variables, state information, and other data required to run the workflow jobs.

Workflow Action Types

Each action in a workflow represents a job to be run. For example, a Hive action runs a Hive job. Most actions in a workflow are executed asynchronously, so they have to wait until the previous action completes. However, file system operations on HDFS are executed synchronously.

Identical workflows can be run concurrently when properly parameterized. The workflow engine can detect completion of computation and processing actions. Each action is given a unique callback URL. The action invokes the URL when its task is complete. The workflow engine also has a mechanism to poll actions for completion, for cases in which the action fails to invoke the callback URL or the type of task cannot invoke the callback URL upon completion.

Workflow action types

| Hive Action | Used for asynchronously executing Hive and Hive2 scripts and Sqoop jobs. The workflow job waits until the Hive job completes before continuing to the next action. | To run the Hive job, you have to configure the Hive action with

the resourceManager,

nameNode, and Hive

script elements, as well as other necessary

parameters and configuration. |

| Hive2 Action | The Hive2 action runs Beeline to connect to Hive Server 2. The workflow job will wait until the Hive Server 2 job completes before continuing to the next action. | To run the Hive Server 2 job, you have to configure the Hive2

action with the resourceManager,

nameNode,

jdbc-url, and password

elements, and either Hive script or

query elements, as well as other

necessary parameters and configuration. |

| Sqoop Action | The workflow job waits until the Sqoop job completes before continuing to the next action. | The Sqoop action requires Apache Hadoop 0.23. To run the Sqoop

job, you have to configure the Sqoop action with the

resourceManager,

nameNode, and Sqoop

command or arg elements, as

well as configuration. |

| Pig Action | The workflow job waits until the Pig job completes before continuing to the next action. | The Pig action has to be configured with the

resourceManager,

nameNode, Pig script, and other necessary

parameters and configuration to run the Pig job. |

| Sub-workflow (Sub-wf) Action | The sub-workflow action runs a child workflow job. The parent workflow job waits until the child workflow job has completed. | The child workflow job can be in the same Oozie system or in another Oozie system. |

| Java Action | Java applications are executed in the Hadoop cluster as map-reduce jobs with a single Mapper task. The workflow job waits until the Java application completes its execution before continuing to the next action. | The Java action has to be configured with the

resourceManager,

nameNode, main Java class, JVM options,

and arguments. |

| Shell Action | Shell commands must complete before going to the next action. | The standard output of the shell command can be used to make decisions. |

| DistCp (distcp) Action | The DistCp action uses Hadoop "distributed copy" to copy files from one cluster to another or within the same cluster. | Both Hadoop clusters have to be configured with proxyuser for the Oozie process. IMPORTANT: The DistCp action may not work properly with all configurations (secure, insecure) in all versions of Hadoop. |

| MapReduce (MR) Action | The workflow job waits until the Hadoop MapReduce job completes before continuing to the next action in the workflow. | |

| SSH Action | Runs a remote secure shell command on a remote machine. The workflow waits for the SSH command to complete. | SSH commands are executed in the home directory of the defined user on the remote host. Important: SSH actions are deprecated in Oozie schema 0.1, and removed in Oozie schema 0.2. |

| Spark Action | The workflow job waits until the Spark job completes before continuing to the next action. | To run the Spark job, you have to configure the Spark action with

the resourceManager,

nameNode, and Spark master elements, as

well as other necessary elements, arguments, and configuration.

Important: The

yarn-client execution mode for the Oozie

Spark action is no longer supported and has been removed. Workflow

Manager and Oozie continue to support

yarn-cluster mode. |

| Email Actions | Email jobs are sent synchronously. | An email must contain an address, a subject and a body. |

| HDFS (FS) Action | Allows manipulation of files and directories in HDFS. File system operations are executed synchronously from within the FS action, but asynchronously within the overall workflow. | |

| Custom Action | Allows you to create a customized action by entering the appropriate XML. | Ensure that the JAR containing the Java code and the XML schema definition (XSD) for the custom action have been deployed. The XSD must also be specified in the Oozie configuration. |

More Information

For more information about actions, see the following Apache documentation: