Getting started with Kafka Connect

Get started with Kafka Connect in Cloudera Streaming Community Edition.

Kafka Connect is a tool for streaming data between Apache Kafka and other systems in a reliable and scalable fashion. Kafka Connect makes it simple to quickly define connectors that move large collections of data into and out of Kafka. Source connectors can ingest entire databases or collect metrics from all your application servers into Kafka topics, making the data available for stream processing with low latency. Sink connectors can deliver data from Kafka topics into secondary storage and query systems or into batch systems for offline analysis.

Cloudera Streaming Community Edition includes many different connectors. The majority of these are developed by Cloudera, but publicly available connectors are included as well. Each connector covers a specific use case for streaming data. Connectors are deployed, managed, and monitored using Streams Messaging Manager.

The following tutorial walks you through a simple use case where data is moved from a Kafka topic into a PostgreSQL database using the JDBC Sink connector, which is one of the Cloudera-developed connectors shipped with Cloudera Streaming Community Edition.

Before you begin

docker ps. For

example:docker ps -a --format '{{.ID}}\t{{.Names}}' --filter "name=kafka.(\d)" --filter "name=postgres"The Kafka container will either be called csce-kafka-1 or

csce_kafka_1.

The PostgreSQL container will either be called csce-postgresql-1 or

csce_postgresql_1.

Creating a database and table in PostgreSQL

In order to stream data using the JDBC Sink connector, a destination is required for that data. In this tutorial, the database and table is created using the PostgreSQL instance already deployed in Cloudera Streaming Community Edition.

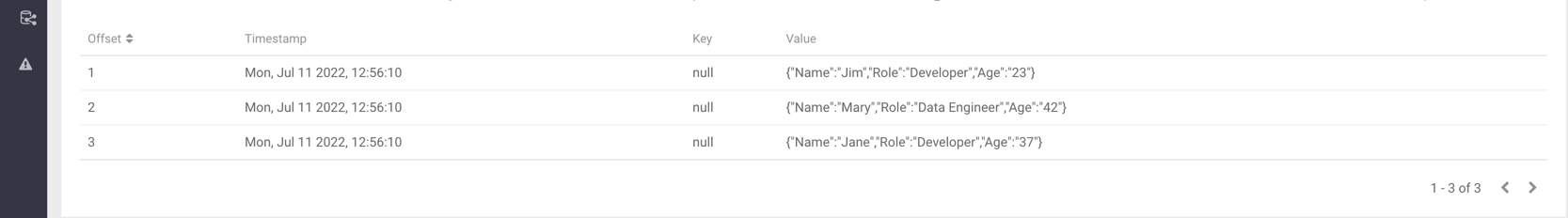

Creating a topic and producing messages

Before you can deploy the JDBC Sink connector, you need a Kafka topic with some messages that you can use. The connector connects to this topic and streams the data from the topic into the PostgreSQL database. The topic will be created using Streams Messaging Manager. Message production is done using the Kafka console producer.

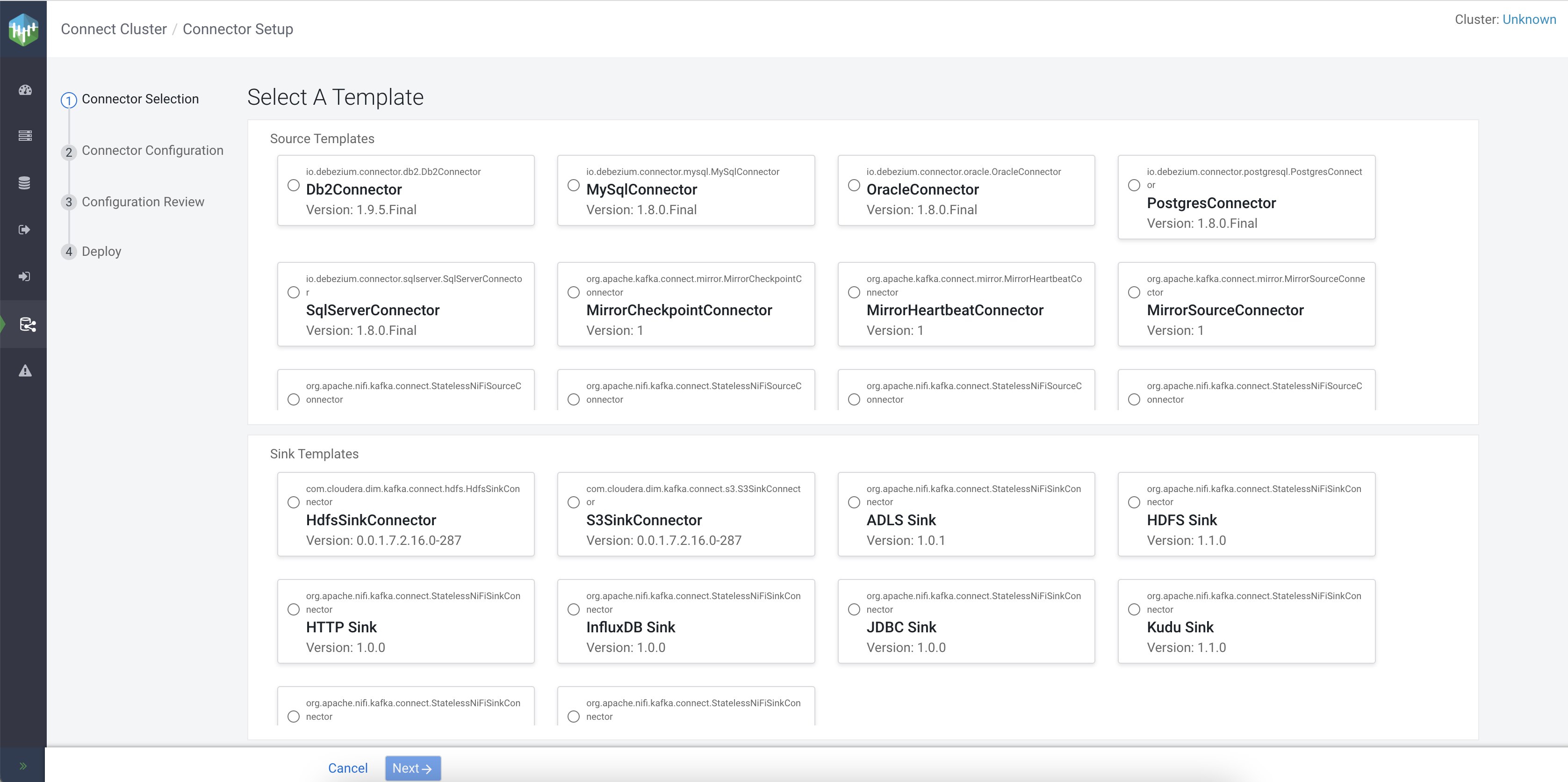

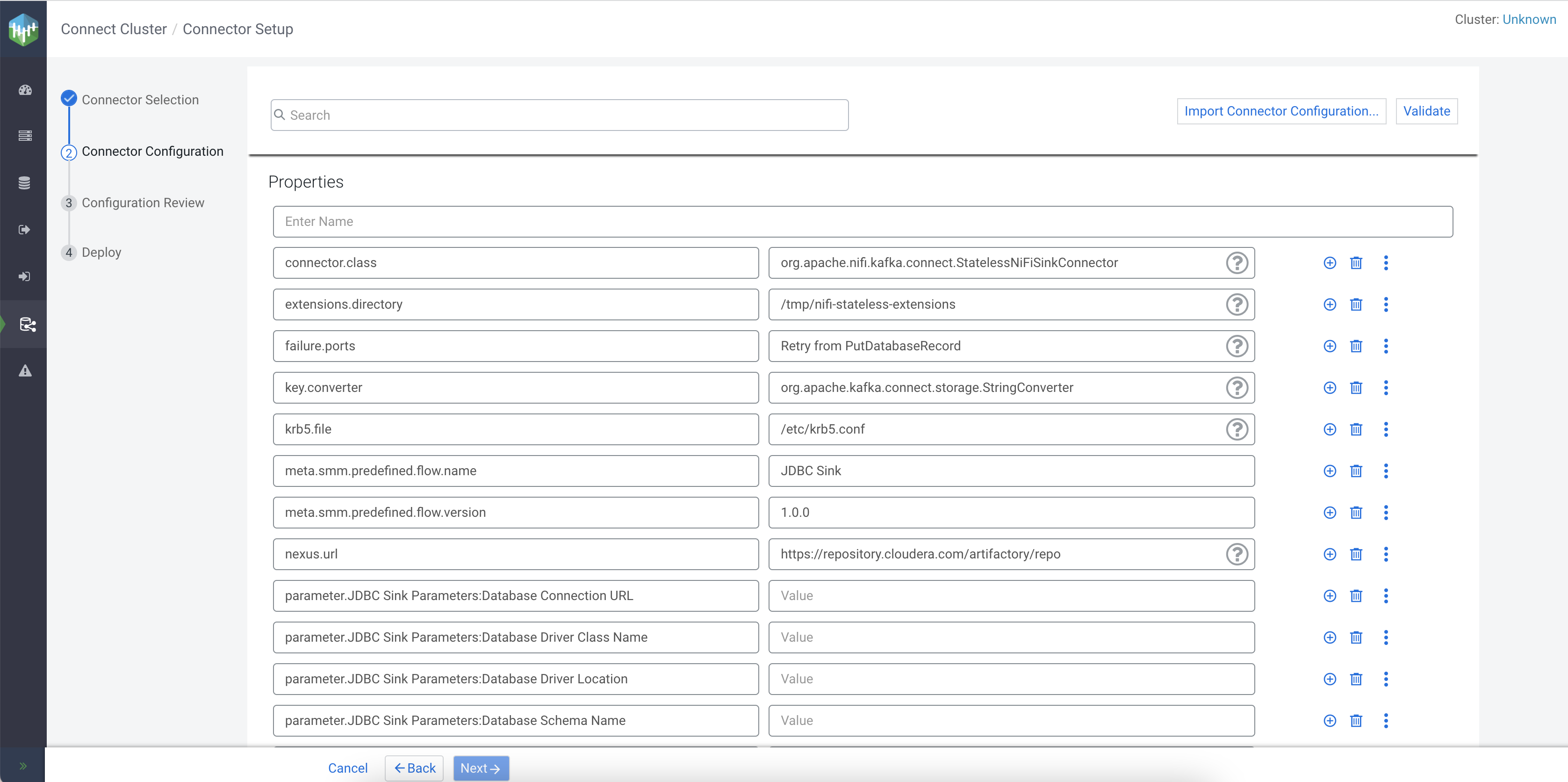

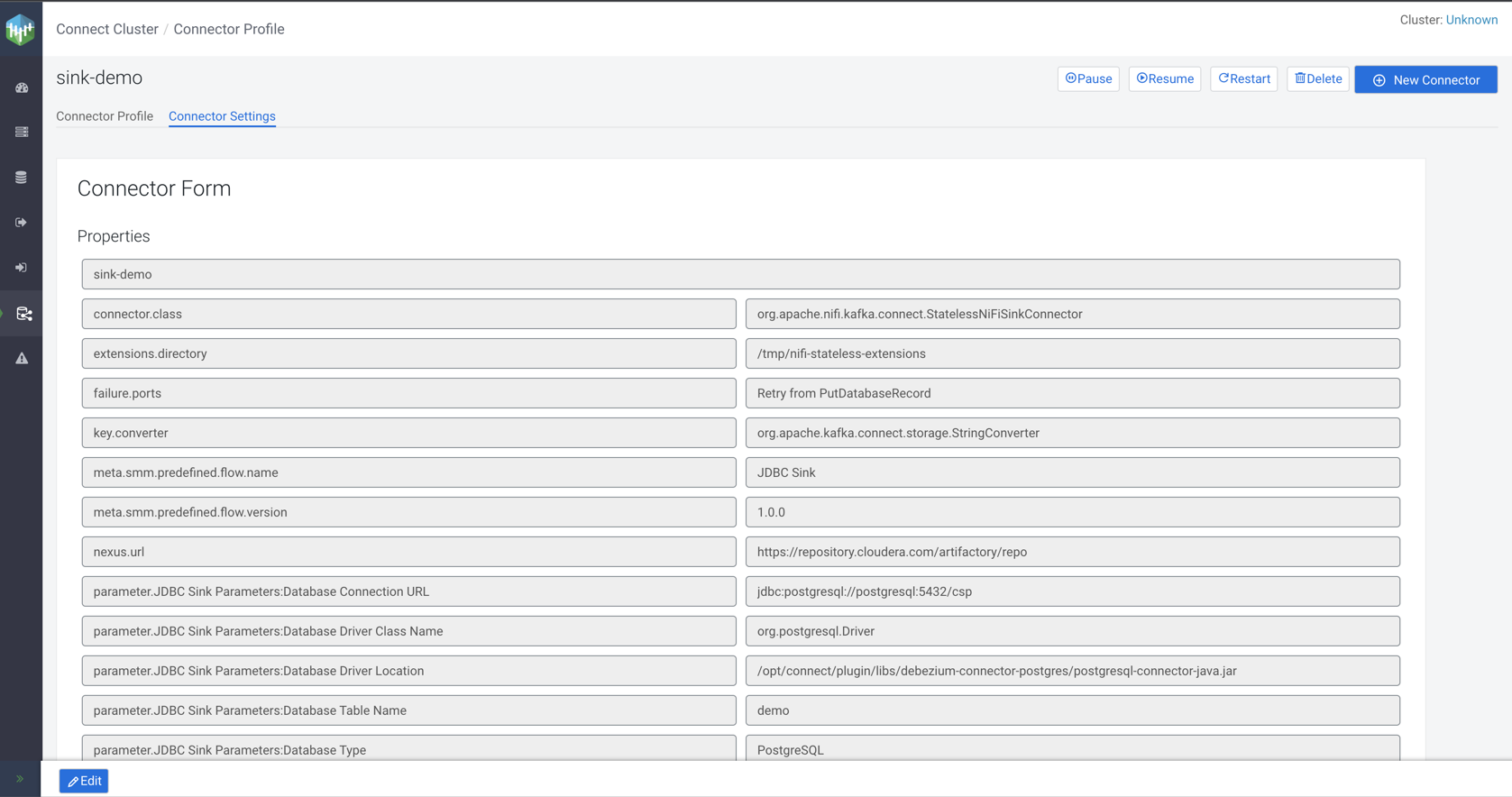

Deploying the connector

After both the topic and database is set up, it's time to deploy the JDBC Sink connector using the Streams Messaging Manager UI.

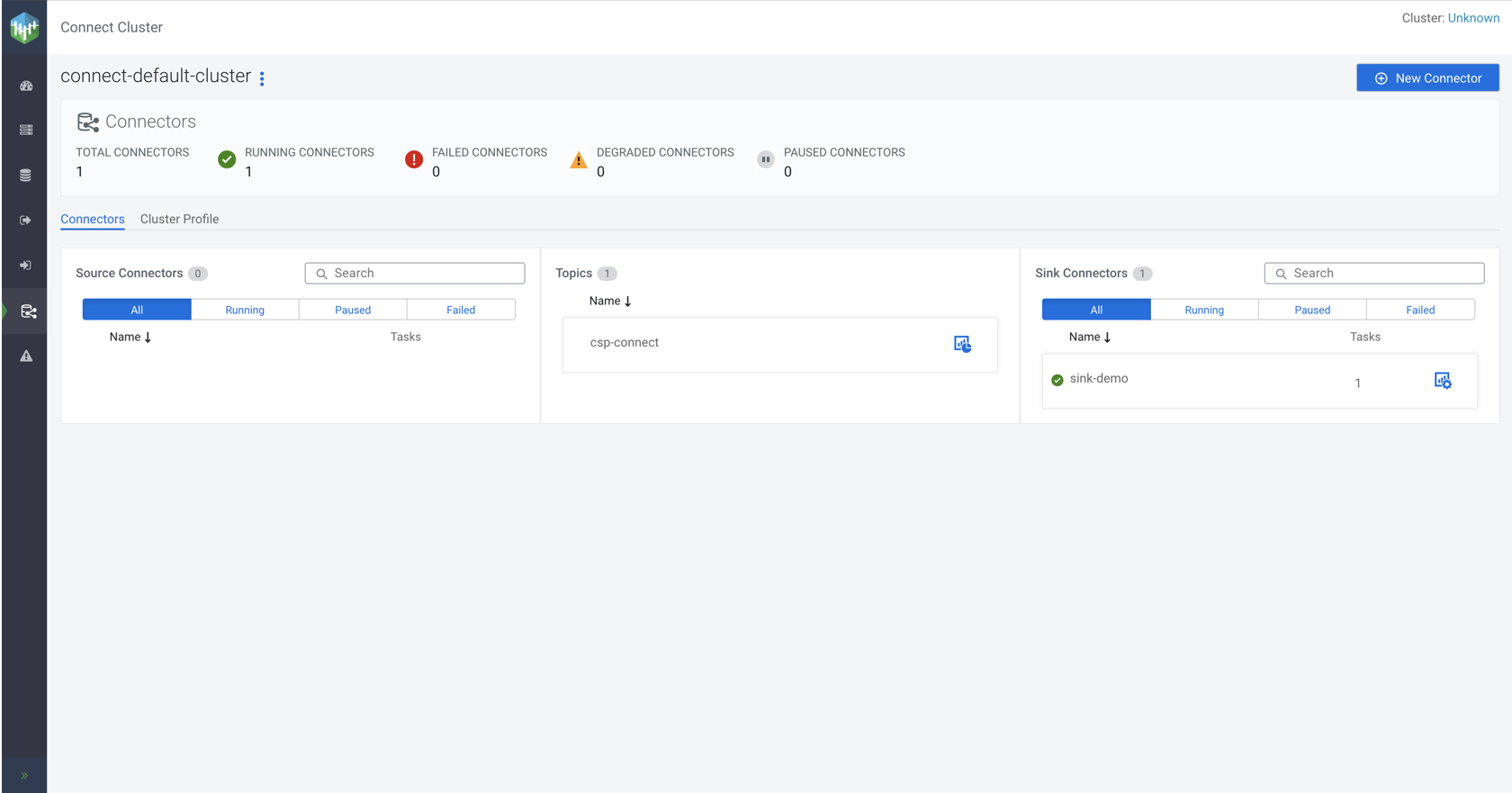

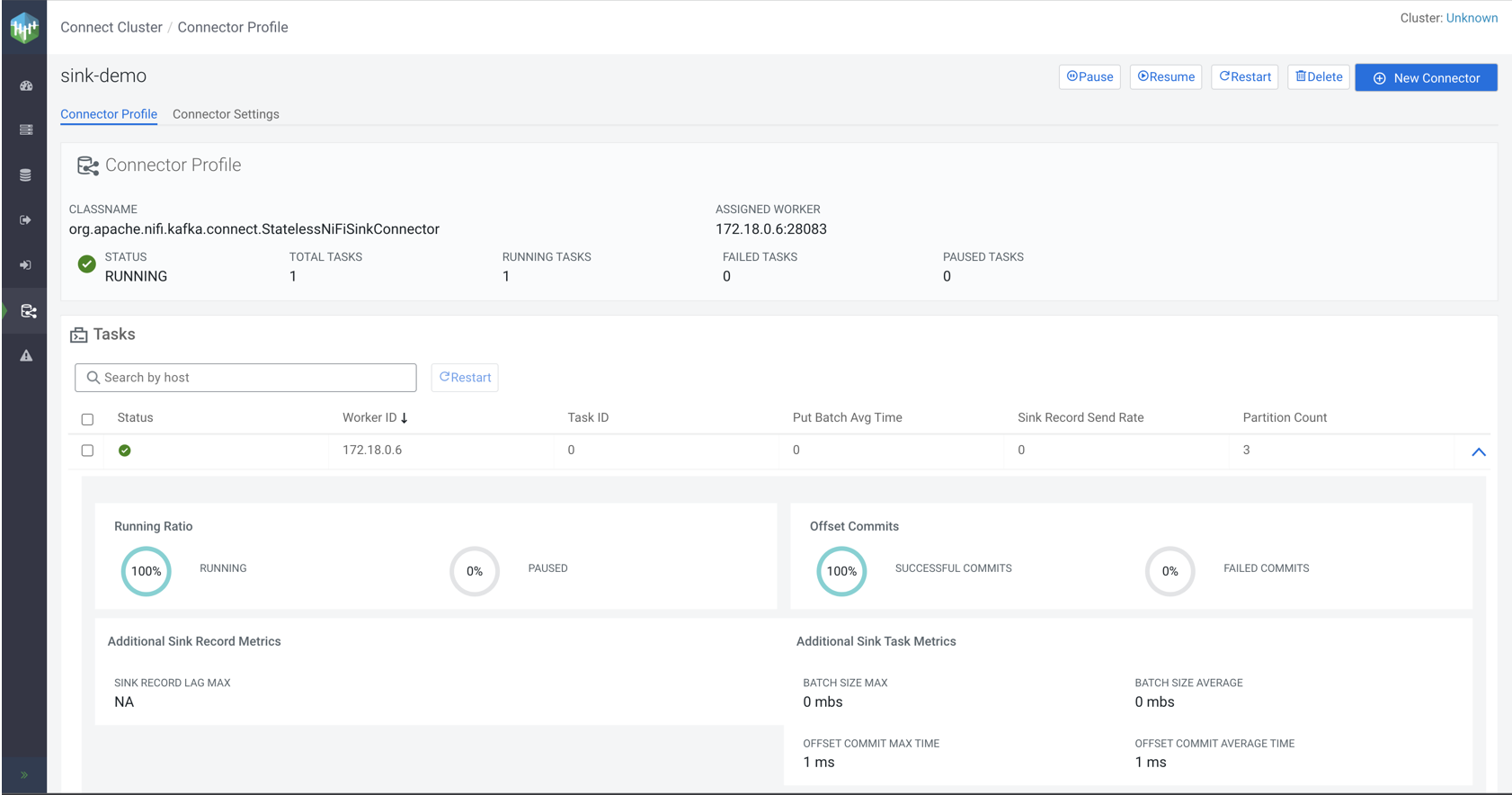

Monitoring and managing the connector

Once the connector is deployed, you can monitor its activity using the Streams Messaging Manager UI.