Known Issues in Hue

This topic describes known issues and workarounds for using Hue in this release of Cloudera Runtime.

- CDPD-18959: Due to tablib upgrade for Hue in CDP from 0.10.x to 0.14.x release which is generally used for Python3 release, the CSV download has a special character in the dataset which fails with the error message "UnicodeDecodeError: 'ascii' codec can't decode byte 0xef in position 8: ordinal not in range(128)"

- Workaround:

- cd /opt/cloudera/parcels/CDH/lib/

- Backup the hue folder cp -R hue hue_orgi

- cd hue

- Install python packages via pippip install wheel

- Install python packages for Hue

- ./build/env/bin/pip install wheel.

- /build/env/bin/pip setuptools==44.1.0

- ./build/env/bin/pip install tablib==0.12.1

- Restart Hue and and check again

- Hive and Impala query editors do not work with TLS 1.2

- Problem: If Hive or Impala engines are using TLS version 1.2 on your CDP cluster, then you won’t be able to run queries from the Hue Hive or Impala query editor.

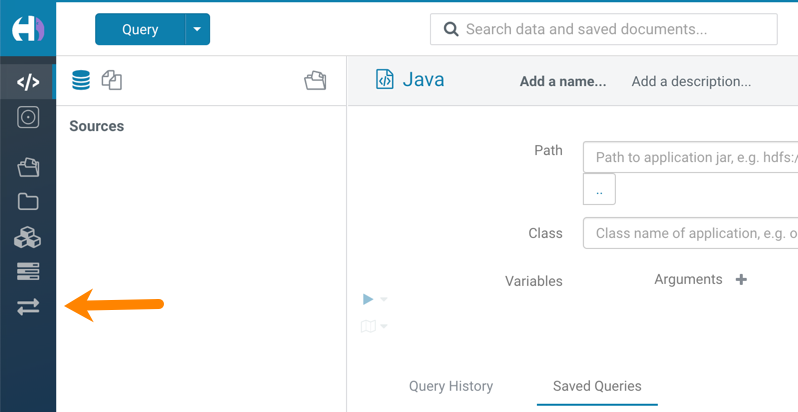

- Hue Importer is not supported in the Data Engineering template

- When you create a Data Hub cluster using the Data Engineering template, the Importer

application is not supported in Hue.

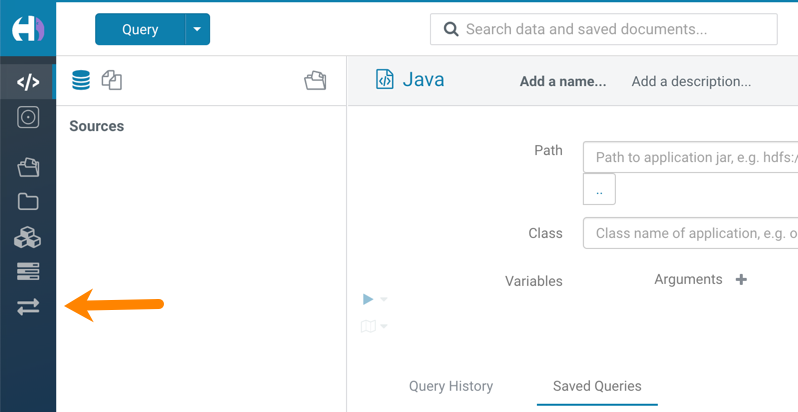

Figure 1. Hue web UI showing Importer icon on the left assist panel

- Hue Importer is not supported in the Data Engineering template

- When you create a Data Hub cluster using the Data Engineering template, the Importer

application is not supported in Hue:

- CDPD-3501: Hue-Atlas configuration information is missing on Data Mart clusters.

- Problem: The configuration file

hive-conf%2Fatlas-application.propertiesis missing on Data Mart clusters because Apache Hive is not installed. This properties file is needed for the Hue integration with Apache Atlas. - BalancerMember worker hostname too long error

- You may see the following error message while starting the Hue Load

Balancer:

BalancerMember worker hostname (xxx-xxxxxxxx-xxxxxxxxxxx-xxxxxxx.xxxxxx-xxxxxx-xxxxxx.example.site) too long.

Cloudera Manager displays this error when you create a Data Hub cluster using the Data Engineering template and the Hue Load Balancer worker node name has exceeded 64 characters. In a CDP Public Cloud deployment, the system automatically generates the Load Balancer worker node name through AWS or Azure.

For example, if you specify

cdp-123456-scaleclusteras the cluster name, CDP createscdp-123456-scalecluster-master2.repro-aw.a123-4a5b.example.siteas the worker node name.

Unsupported features

- Importing and exporting Oozie workflows across clusters and between different CDH versions is not supported

-

You can export Oozie workflows, schedules, and bundles from Hue and import them only within the same cluster if the cluster is unchanged. You can migrate bundle and coordinator jobs with their workflows only if their arguments have not changed between the old and the new cluster. For example, hostnames, NameNode, Resource Manager names, YARN queue names, and all the other parameters defined in the

workflow.xmlandjob.propertiesfiles.Using the import-export feature to migrate data between clusters is not recommended. To migrate data between different versions of CDH, for example, from CDH 5 to CDP 7, you must take the dump of the Hue database on the old cluster, restore it on the new cluster, and set up the database in the new environment. Also, the authentication method on the old and the new cluster should be the same because the Oozie workflows are tied to a user ID, and the exact user ID needs to be present in the new environment so that when a user logs into Hue, they can access their respective workflows.

- PySpark and SparkSQL are not supported with Livy in Hue

- Hue does not support configuring and using PySpark and SparkSQL with Livy in CDP Private Cloud Base.

Technical Service Bulletins

- TSB 2021-487: Cloudera Hue is vulnerable to Cross-Site Scripting attacks

-

Multiple Cross-Site Scripting (XSS) vulnerabilities of Cloudera Hue have been found. They allow JavaScript code injection and execution in the application context.

- CVE-2021-29994 - The Add Description field in the Table schema browser does not sanitize user inputs as expected.

- CVE-2021-32480 - Default Home direct button in Filebrowser is also susceptible to XSS attack.

- CVE-2021-32481 - The Error snippet dialog of the Hue UI does not sanitize user inputs.

- Knowledge article

- For the latest update on this issue see the corresponding Knowledge article: TSB 2021-487: Cloudera Hue is vulnerable to Cross-Site Scripting attacks (CVE-2021-29994, CVE-2021-32480, CVE-2021-32481)