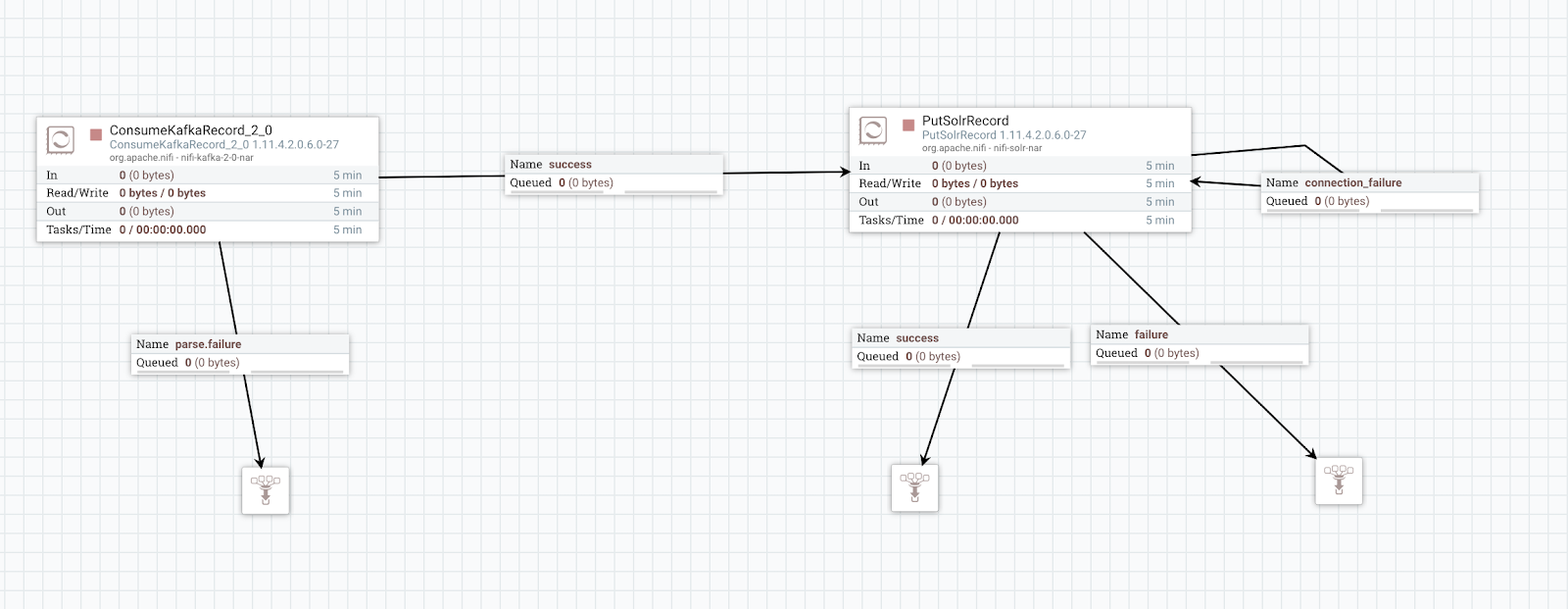

Build the data flow

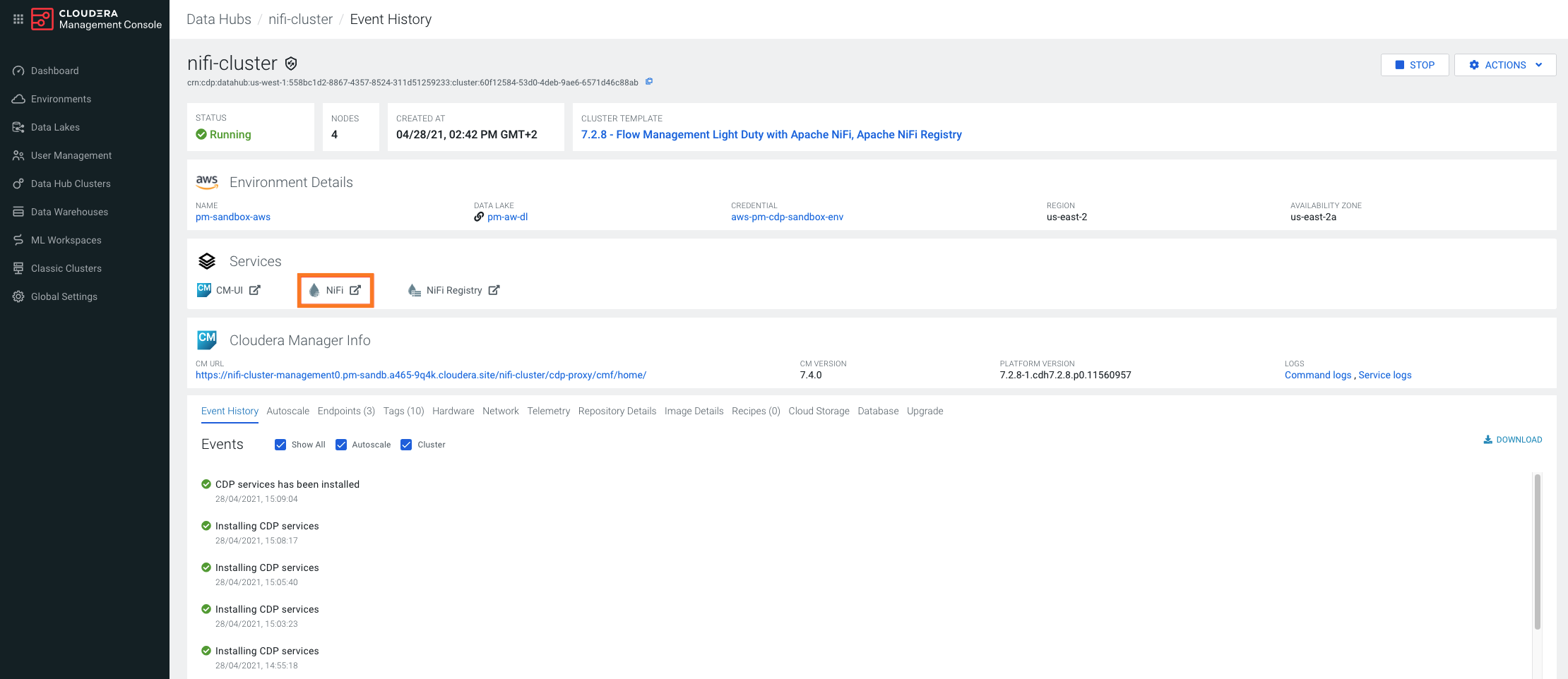

Learn how you can create an ingest data flow to move data from Apache Kafka to Apache Solr. This involves adding processors and other data flow elements to the NiFi canvas, configuring them, and connecting the elements to create the data flow.

Use the PutSolrRecord processor to build your Solr ingest data flow.