HDFS cloud replication

DLM supports replication of HDFS data from cluster to cloud storage and vice versa. The replication policy runs on the cluster and either pushes or pulls the data from cloud storage.

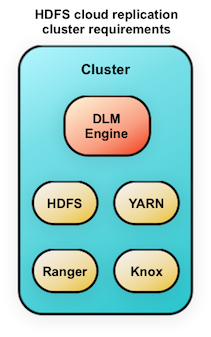

The cluster can be an On-premise or IaaS cluster with data on local HDFS. The cluster requires HDFS, YARN, Ranger, Knox and DLM Engine services.

The HDFS replication policy process overview consists of the following:

- The DLM App submits the replication policy to the DLM Engine on the destination cluster. The DLM Engine then schedules replication jobs at the specified frequency.

- At the specific frequency, DLM Engine submits a DistCp job that runs on destination YARN, reads data from source HDFS, and writes to destination HDFS.

- File length and checksums are used to determine changed files and validate that the data is copied correctly.

- The Ranger policies for the HDFS directory are exported from source Ranger

service and replicated to destination Ranger service.

NoteDLM Engine also adds a deny policy on the destination Ranger service for the target directory so that the target is not writable.

NoteDLM Engine also adds a deny policy on the destination Ranger service for the target directory so that the target is not writable. - Atlas entities related to HDFS directory are replicated. If there are no HDFS path entities are present within Atlas, they are first created and then exported.