Accessing Hive tables from Spark in CDP Data Center

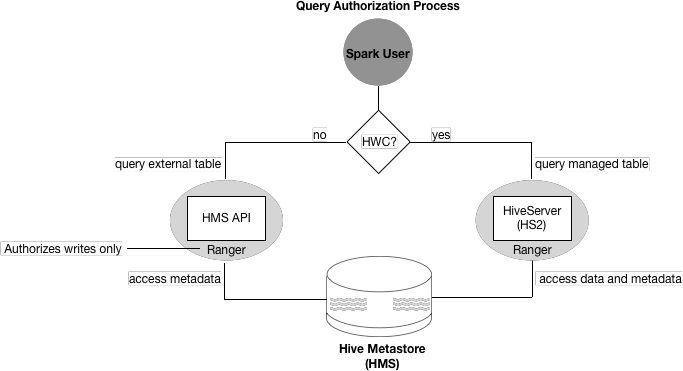

You need to understand authorization access to Hive tables from Spark. You might need to, or want to, use the Hive Warehouse Connector (HWC), depending on your use case.

Managed tables

To read or write managed tables from Spark, you need to use the HWC. You explicitly use HWC by calling the HiveWarehouseConnector API for writes. You can implicitly use HWC for reads by simply running a Spark SQL query on a managed table. A Spark job impersonates the end user when attempting to access a Hive managed table. As an end user, you do not have permission to these secure files in the Hive warehouse.

Managed table queries go through HiveServer, which is integrated with Ranger. As Ranger Administrator, you configure permissions to access the managed tables. You can fine-tune Ranger to protect specific data. For example, you can mask data in certain columns, or set up tag-based access control.

External tables

To read or write external tables from Spark, can use the HWC, or not, based on your use case. The Spark job accesses the metadata first, and then indirectly (no HWC), or directly (through HWC) accesses the data. Of course, file system permissions must be set to allow Spark direct access to the data.

External table queries go through the HMS API, which is integrated with Ranger. This release supports Ranger authorization of external table writes. This release of CDP Data Center does not support Ranger authorization of external table reads. Reads are supported in the CDP Public Cloud release. You need to check for, and add, a few properties to enable authorization of external table writes, as described in the next section.

Creating an external table stores the metadata in HMS. Using HWC to create the external table, HMS keeps track of the location of table names and columns. Dropping an external table deletes the metadata from HMS and from the file system.

Not using HWC, dropping an external table deletes only the metadata from HMS. If you do not have permission to access the file system, and you want to drop table data in addition to metadata, you need to use HWC.