Basic Cluster Setup

This section describes the setup for a simple three-node, non-secure cluster comprised of three instances of NiFi.

For each instance, certain properties in the nifi.properties file will need to be updated. In particular, the Web and Clustering properties should be evaluated for your situation and adjusted accordingly. All the properties are described in the System Properties section of this guide; however, in this section, we will focus on the minimum properties that must be set for a simple cluster.

For all three instances, the Cluster Common Properties can be left with the default settings. Note, however, that if you change these settings, they must be set the same on every instance in the cluster.

For each Node, the minimum properties to configure are as follows:

-

Under the Web Properties section, set either the HTTP or HTTPS port that you want the Node to run on. Also, consider whether you need to set the HTTP or HTTPS host property. All nodes in the cluster should use the same protocol setting.

-

Under the State Management section, set the

nifi.state.management.provider.clusterproperty to the identifier of the Cluster State Provider. Ensure that the Cluster State Provider has been configured in the state-management.xml file. See Configuring State Providers for more information. -

Under Cluster Node Properties, set the following:

-

nifi.cluster.is.node- Set this to true. -

nifi.cluster.node.address- Set this to the fully qualified hostname of the node. If left blank, it defaults tolocalhost. -

nifi.cluster.node.protocol.port- Set this to an open port that is higher than 1024 (anything lower requires root). -

nifi.cluster.node.protocol.threads- The number of threads that should be used to communicate with other nodes in the cluster. This property defaults to10. A thread pool is used for replicating requests to all nodes, and the thread pool will never have fewer than this number of threads. It will grow as needed up to the maximum value set by thenifi.cluster.node.protocol.max.threadsproperty. -

nifi.cluster.node.protocol.max.threads- The maximum number of threads that should be used to communicate with other nodes in the cluster. This property defaults to50. A thread pool is used for replication requests to all nodes, and the thread pool will have a "core" size that is configured by thenifi.cluster.node.protocol.threadsproperty. However, if necessary, the thread pool will increase the number of active threads to the limit set by this property. -

nifi.zookeeper.connect.string- The Connect String that is needed to connect to Apache ZooKeeper. This is a comma-separated list of hostname:port pairs. For example,localhost:2181,localhost:2182,localhost:2183. This should contain a list of all ZooKeeper instances in the ZooKeeper quorum. -

nifi.zookeeper.root.node- The root ZNode that should be used in ZooKeeper. ZooKeeper provides a directory-like structure for storing data. Each 'directory' in this structure is referred to as a ZNode. This denotes the root ZNode, or 'directory', that should be used for storing data. The default value is/root. This is important to set correctly, as which cluster the NiFi instance attempts to join is determined by which ZooKeeper instance it connects to and the ZooKeeper Root Node that is specified. -

nifi.cluster.flow.election.max.wait.time- Specifies the amount of time to wait before electing a Flow as the "correct" Flow. If the number of Nodes that have voted is equal to the number specified by thenifi.cluster.flow.election.max.candidatesproperty, the cluster will not wait this long. The default value is5 mins. Note that the time starts as soon as the first vote is cast. -

nifi.cluster.flow.election.max.candidates- Specifies the number of Nodes required in the cluster to cause early election of Flows. This allows the Nodes in the cluster to avoid having to wait a long time before starting processing if we reach at least this number of nodes in the cluster.

-

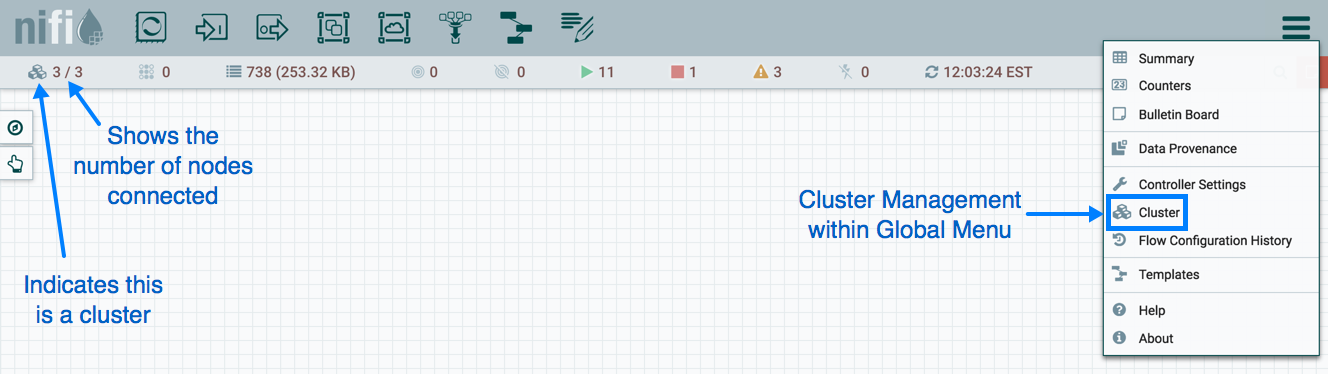

Now, it is possible to start up the cluster. It does not matter which order the instances start up. Navigate to the URL for one of the nodes, and the User Interface should look similar to the following: