Key features of SSB

SQL Stream Builder (SSB) within Cloudera supports out-of-box integration with Flink and Kafka, as virtual table sink and source. For integration with Business Intelligence tools you can create Materialized Views.

- Tables

- Tables are a core abstraction in SSB. Just like typical databases, they provide the interface for running queries. Data can be queried from tables, and results can be sent to tables. Tables do not have native storage in SSB, rather they reference data connectors for Kafka, Hive, Kudu and so on. If needed, tables have a schema definition as well. In the case of Kafka, table definition includes a rich interface for defining schema, and run-time characteristics like timestamps and various consumer/producer settings.

- Catalog Support

- In addition to creating tables manually, you can access tables in external systems using the supported Flink catalogs. SSB supports Hive, Kudu and Schema Registry as catalogs.

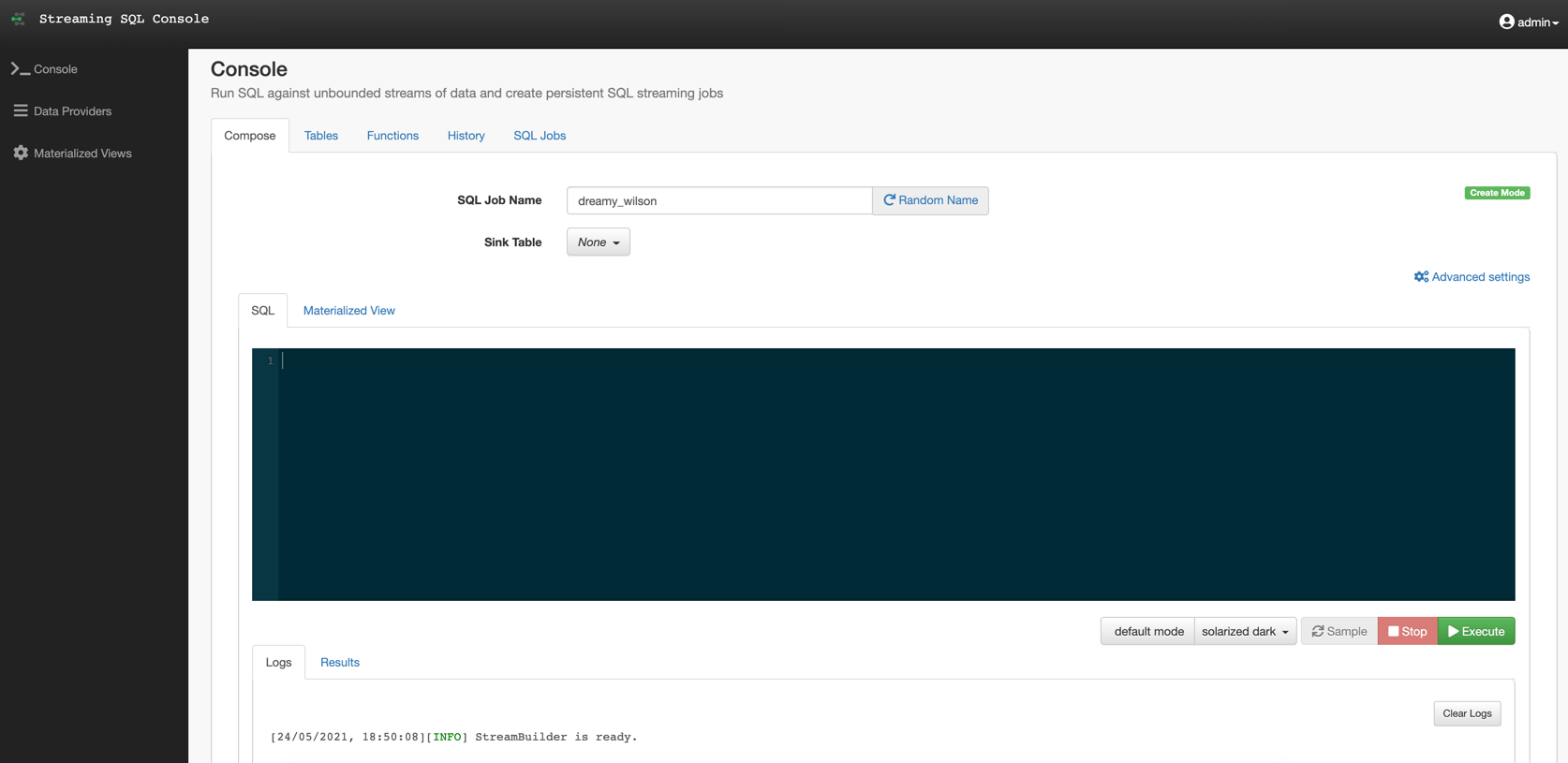

- Streaming SQL Console

- SSB comes with an interactive user interface that allows you to easily create, and manage

your SQL jobs in one place. It allows you to create and iterate on SQL statements with

robust tooling and capabilities. Query parsing is logged to the console, and results are

sampled back to the interface to help with iterating on the SQL statement as required.

- Materialized Views

- SSB has the capability to materialize results from a Streaming SQL query to a persistent view of the data that can be read through REST and over the PG wire protocol. Applications can use this mechanism to query streams of data in a way of high performance without deploying additional database systems. Materialized Views are built into the SQL Stream Builder service, and require no configuration or maintenance. The Materialized Views act like a special kind of sink, and can even be used in place of a sink. They require no indexing, storage allocation, or specific management.

- Detect Schema

- SSB is capable of reading JSON messages in a topic, identifying their data structure, and sampling the schema to the UI. This is an useful function when you do not use Schema Registry.

- Input Transform

- In case you are not aware of the incoming data structure or raw data is being collected from for example sensors, you can use the Input Transform to clean up and organize the incoming data before querying. Input transforms also allow access to Kafka header metadata directly in the query itself. Input transforms are written in Javascript and compiled to Java bytecode deployed with the Flink jar.

- User Defined Functions

- You can create customized and complex SQL queries by using User Defined Functions to enrich your data, apply computations or a business logic on it. User defined functions are written in Javascript, and compiled to Java bytecode deployed with the Flink jar.