Ingest data into Apache Druid

When Apache Druid (incubating) ingests data, Druid indexes the data. You can use one of several methods to ingest and index the data.

Druid has multiple ways to ingest data:

- Through Hadoop. Data for ingestion is on HDFS.

- From a file.

- From a Kafka stream. Data must be in a topic.

For more information, see about ingestion methods, see documentation on the druid.io web site (see link below).

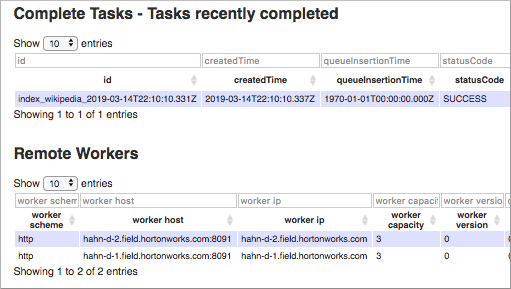

In this task, you ingest data from a file. You create an index task specification in JSON and use HTTP to ingest the data.