Upgrading Cloudera Data Services on premises using Cloudera Embedded Container Service

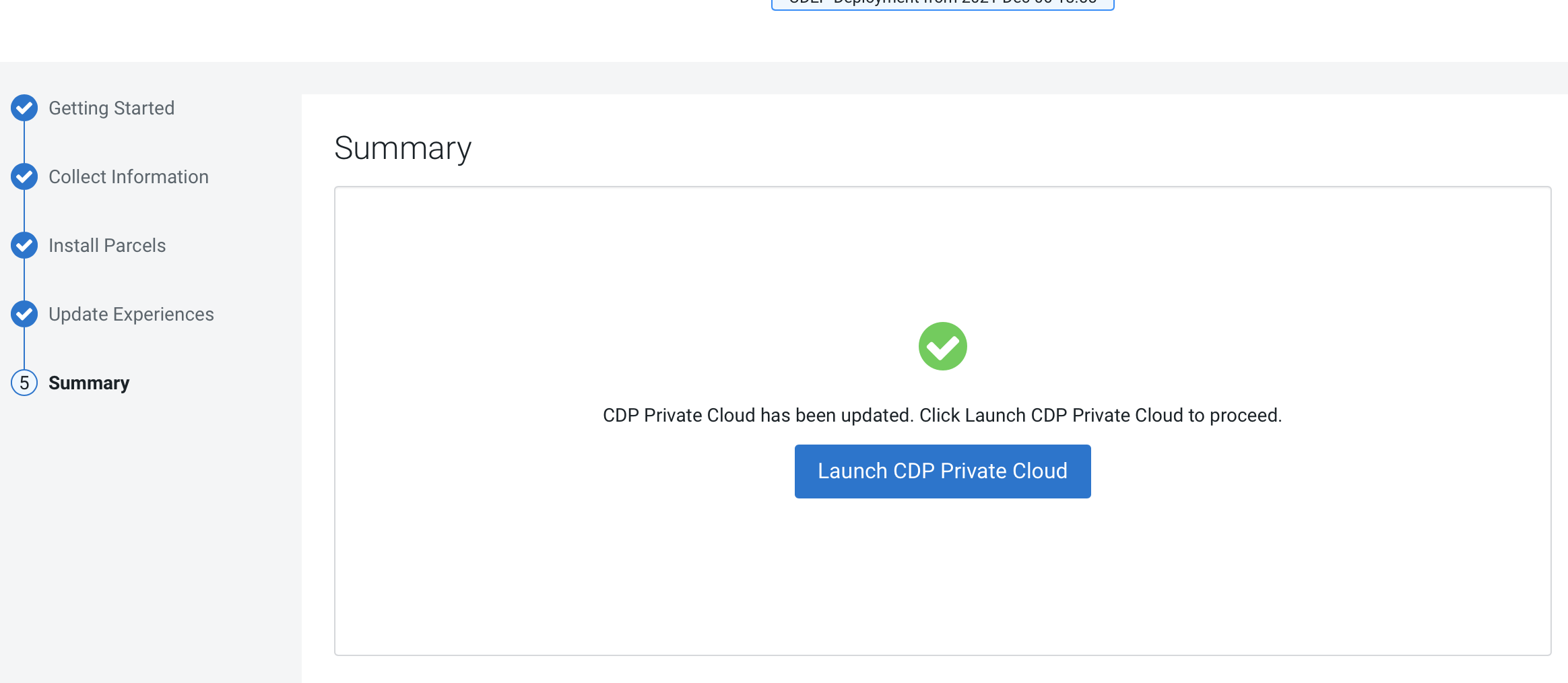

You can upgrade Cloudera Data Services on premises 1.5.3 version to 1.5.4 version without uninstalling the previous installation.

- Review the Software Support Matrix for ECS.

- The Docker registry that is configured with the cluster must remain the same during the upgrade process. If Cloudera Data Services on premises 1.5.2 or 1.5.3 was installed using the public Docker registry, Cloudera Data Services on premises 1.5.4 should also use the public Docker registry, and not be configured to use the embedded Docker registry. To use a different configuration for the Docker registry, you must perform a new installation of Cloudera Data Services on premises.

- If the upgrade stalls, do the following:

- Check the status of all pods by running the following command on the server node:

export PATH=$PATH:/opt/cloudera/parcels/ECS/installer/install/bin/linux/:/opt/cloudera/parcels/ECS/docker export KUBECONFIG=~/kubeconfig kubectl get pods --all-namespaces - If there are any pods stuck in "Terminating" state, then force terminate the pod

using the following

command:

kubectl delete pods <NAME OF THE POD> -n <NAMESPACE> --grace-period=0 —forceIf the upgrade still does not resume, continue with the remaining steps.

- If there are any pods in the "Pending" state, then you can try to reschedule the

pods in the "Pending state" by restarting the yunikorn-scheduler. Run the following

commands to restart yunikorn-scheduler:

kubectl get pods -n yunikorn kubectl get deploy -n yunikorn kubectl scale --replicas=0 -n yunikorn deployment/yunikorn-scheduler kubectl get deploy -n yunikorn kubectl scale --replicas=1 -n yunikorn deployment/yunikorn-scheduler kubectl get deploy -n yunikorn - In the Admin Console, go to the ECS service

and click .

The Longhorn dashboard opens.

-

Check the "In Progress" section of the dashboard to see whether there are any volumes stuck in the attaching/detaching state in. If a volume is that state, reboot its host.

- In the LongHorn UI, go to the Volume tab and check if any of the volumes are in the "Detached" state. If any are in the "Detached" state, then restart the associated pods or reattach them to the host manually.

- Check the status of all pods by running the following command on the server node:

- You may see the following error message during the Upgrade Cluster >

Reapplying all settings > kubectl-patch

:

If you see this error, do the following:kubectl rollout status deployment/rke2-ingress-nginx-controller -n kube-system --timeout=5m error: timed out waiting for the condition- Check whether all the Kubernetes nodes are ready for scheduling. Run the following

command from the ECS Server

node:

You will see output similar to the following:kubectl get nodesNAME STATUS ROLES AGE VERSION <node1> Ready,SchedulingDisabled control-plane,etcd,master 103m v1.21.11+rke2r1 <node2> Ready <none> 101m v1.21.11+rke2r1 <node3> Ready <none> 101m v1.21.11+rke2r1 <node4> Ready <none> 101m v1.21.11+rke2r1 -

Run the following command from the ECS Server node for the node showing a status of

SchedulingDisabled:kubectl uncordon <node1>You must add the NODENAME to the end of the command.

You will see output similar to the following:<node1>node/<node1> uncordoned - Scale down and scale up the rke2-ingress-nginx-controller pod

by running the following command on the ECS Server

node:

kubectl delete pod rke2-ingress-nginx-controller-<pod number> -n kube-system - Resume the upgrade.

- Check whether all the Kubernetes nodes are ready for scheduling. Run the following

command from the ECS Server

node:

- If a new release-dwx-server pod is unable to start because of an

existing release-dwx-server pod failing to start:

- Delete the pod manually by executing the following

command:

kubectl delete -n cdp pod cdp-release-dwx-server-<pod_id> - Resume the upgrade wizard if it had timed out.

- Delete the pod manually by executing the following

command:

- After upgrading, the Cloudera Manager admin role may be missing the

Host Administrators privilege in an upgraded cluster. The cluster administrator should run

the following command to manually add this privilege to the

role.

ipa role-add-privilege <cmadminrole> --privileges="Host Administrators" - If you specified a custom certificate, select the Cloudera Embedded Container Service

cluster in Cloudera Manager, then select . This command copies the

cert.pemand key.pem files from the Cloudera Manager server host to the ECS Cloudera Management Console host. - After upgrading, you can enable the unified time zone feature to synchronize the ECS cluster time zone with the Cloudera Manager Base time zone. When upgrading from earlier versions of Cloudera Private Cloud Data Services to 1.5.2 and higher, unified time zone is disabled by default to avoid affecting timestamp-sensitive logic. For more information, see ECS unified time zone.

icon, then click

icon, then click