Known Issues in Hue

Learn about the known issues in Hue, the impact or changes to the functionality, and the workaround.

- Unable to delete, move, or rename directories within the S3 bucket from Hue

- You may not be able to rename, move, or delete directories within your S3 bucket from the Hue web interface. This is because of an underlying issue, which will be fixed in a future release.

- Downloading Impala query results containing special characters in CSV format fails with ASCII codec error

- In CDP, Hue is compatible with Python 2.7.x, but the Tablib library for Hue has been upgraded from 0.10.x to 0.14.x, which is generally used with the Python 3 release. If you try to download Impala query results having special characters in the result set in a CSV format, then the download may fail with the ASCII unicode decode error.

- Impala SELECT table query fails with UTF-8 codec error

- Hue cannot handle columns containing non-UTF8 data. As a result,

you may see the following error while queying tables from the Impala editor in Hue:

'utf8' codec can't decode byte 0x91 in position 6: invalid start byte. - Hue Importer is not supported in the Data Engineering template

- When you create a Data Hub cluster using the Data Engineering template, the Importer

application is not supported in Hue.

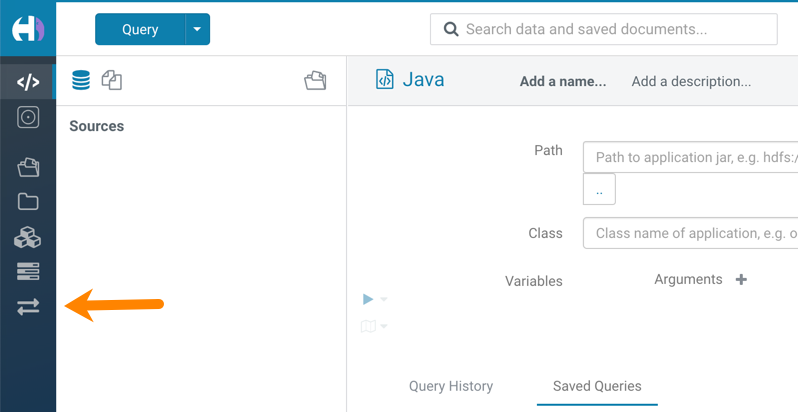

Figure 1. Hue web UI showing Importer icon on the left assist panel

- Hue Load Balancer role fails to start after upgrade to Cloudera Runtime 7 or you get the "BalancerMember worker hostname too long" error

- You may see the following error message while starting the Hue

Load

Balancer:

BalancerMember worker hostname (xxx-xxxxxxxx-xxxxxxxxxxx-xxxxxxx.xxxxxx-xxxxxx-xxxxxx.example.site) too long.

Or, the Hue load balancer role fails to start after the upgrade, which prevents the Hue service from starting. If this failure occurs during cluster creation, cluster creation fails with the following error:com.sequenceiq.cloudbreak.cm.ClouderaManagerOperationFailedException: Cluster template install failed: [Command [Start], with id [1234567890] failed: Failed to start role., Command [Start], with id [1234567890] failed: Failed to start role., Command [Start], with id [1234567890] failed: Failed to start role.] Unable to generate configuration for HUE_SERVER Role failed to start due to error com.cloudera.cmf.service.config.ConfigGenException: Unable to generate config file hue.ini

Cloudera Manager displays this error when you create a Data Hub cluster using the Data Engineering template and the Hue Load Balancer worker node name has exceeded 64 characters. In a CDP Public Cloud deployment, the system automatically generates the Load Balancer worker node name through AWS or Azure.

For example, if you specify

cdp-123456-scaleclusteras the cluster name, CDP createscdp-123456-scalecluster-master2.repro-aw.a123-4a5b.example.siteas the worker node name.

Unsupported features

- Importing and exporting Oozie workflows across clusters and between different CDH versions is not supported

-

You can export Oozie workflows, schedules, and bundles from Hue and import them only within the same cluster if the cluster is unchanged. You can migrate bundle and coordinator jobs with their workflows only if their arguments have not changed between the old and the new cluster. For example, hostnames, NameNode, Resource Manager names, YARN queue names, and all the other parameters defined in the

workflow.xmlandjob.propertiesfiles.Using the import-export feature to migrate data between clusters is not recommended. To migrate data between different versions of CDH, for example, from CDH 5 to CDP 7, you must take the dump of the Hue database on the old cluster, restore it on the new cluster, and set up the database in the new environment. Also, the authentication method on the old and the new cluster should be the same because the Oozie workflows are tied to a user ID, and the exact user ID needs to be present in the new environment so that when a user logs into Hue, they can access their respective workflows.

- INSIGHT-3707: Query history displays "Result Expired" message

- You see the "Result Expired" message under the Query History column on the Queries tab for queries which were run back to back. This is a known behaviour.

Technical Service Bulletins

- TSB 2024-723: Hue RAZ is using logger role to Read and Upload/Delete (write) files

- When using Cloudera Data Hub for Public Cloud (Data Hub) on

Amazon Web Services (AWS), users can use the Hue File Browser feature to access the

filesystem, and if permitted, read and write directly to the related S3 buckets. As

AWS does not provide fine-grained access control, Cloudera Data Platform

administrators can use the Ranger Authorization Service (RAZ) capability to take the

S3 filesystem, and overlay it with user and group specific permissions, making it

easier to allow certain users to have limited permissions, without having to grant

those users permissions to the entire S3 bucket.

This bulletin describes an issue when using RAZ with Data Hub, and attempting to use fine-grained access control to allow certain users write permissions.

Through RAZ, an administrator may, for a particular user, specify permissions more limited than what AWS provides for an S3 bucket, allowing the user to have read/write (or other similar fine grained access) permissions on only a subset of the files and directories within that bucket. However, under specific conditions, it is possible for such user to be able to read and write to the entire S3 bucket through Hue, due to Hue using the logger role (which will have full read/write to the S3 bucket) when using Data Hub with a RAZ enabled cluster. This problem also can affect the Hue service itself, by affecting proper access to home directories causing the service role to not start.

The root cause of this issue is, when accessing Amazon cloud resources, Hue uses the AWS Boto SDK library. This AWS Boto library has a bug that restricts permissions in certain AWS regions in such a way that it provides access to users who should not have it, regardless of RAZ settings. This issue only affects users in specific AWS regions, listed below, and it does not affect all AWS customers.

- Knowledge article

-

For the latest update on this issue see the corresponding Knowledge Article: TSB 2024-723: Hue Raz is using logger role to Read and Upload/Delete (write) files.