Spark metadata collection

Atlas can collect metadata from Spark, including queries on Hive tables. The Spark Atlas Connector (SAC) is available for Spark 2.4 and Atlas 2.1.

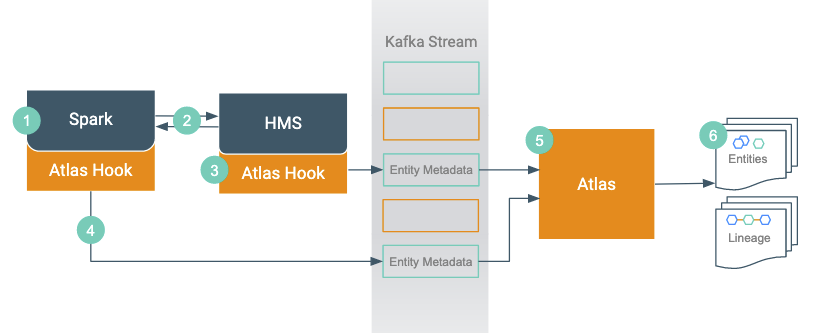

An Atlas hook runs in each Spark instance. This hook sends metadata to Atlas for Spark operations. Operations are represented by process entities in Atlas. Hive databases, tables, views, and columns that are referenced in the Spark operations are also represented in Atlas, but the metadata for these entities is collected from HMS. When a Spark operation involves files, the metadata for the file system and files are represented in Atlas as file system paths.

- When an action occurs in the Spark instance...

- It updates HMS with information about the assets affected by the action.

- The Atlas hook corresponding to HMS collects information for the changed and new assets and forms it into metadata entities. It publishes the metadata to a Kafka topic.

- The Atlas hook corresponding to the Spark instance collects information for the action and forms it into metadata entities. It publishes the metadata to a Kafka topic.

- Atlas reads the messages from the topic and determines what information will create new entities and what information updates existing entities.

- Atlas creates the appropriate entities and determines lineage from existing entities to the new entities.