Creating Input Transforms

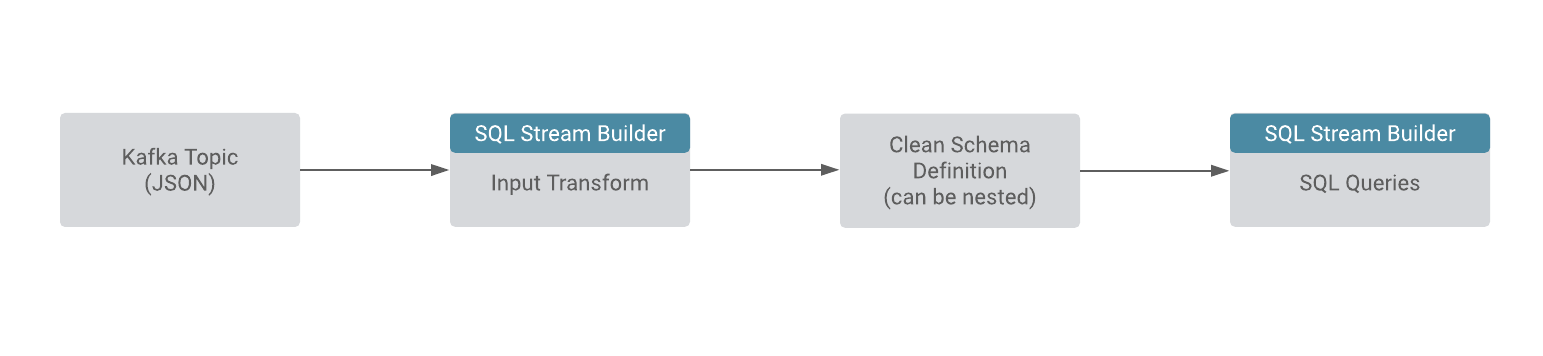

Input Transforms are a powerful way to clean, modify, and arrange data that is poorly organized, has changing format, has data that is not needed or otherwise hard to use. With the Input Transfrom feature of SQL Stream Builder, you can create a javascript function to transform the data after it has been consumed from a Kafk topic, and before you run SQL queries on the data.

- The source is not in your control, for example, data feed from a third-party provider

- The format is hard to change, for example, a legacy feed, other teams of feeds within your organization

- The messages are inconsistent

- The data from the sources do not have uniform keys, or without keys (like nested arrays), but are still in a valid JSON format

- The schema you want does not match the incoming topic

You can use the Input Transforms on Kafka tables that have the following

characteristics:

- Allows one transformation per source.

- Takes record as a JSON-formatted string input variable. The input is always named record.

- Emits the output of the last line to the calling JVM. It could be any variable name. In the following example, out and emit is used as a JSON-formatted string.

A basic input transformation looks like

this:

var out = JSON.parse(record.value); // record is input, parse JSON formatted string to object

// add more transformations if needed

JSON.stringify(out); // emit JSON formatted string of object