Creating Iceberg tables

Apache Iceberg is an open, high-performance table format for organizing datasets that can contain petabytes of data. Iceberg can be used to add tables to computing engines, such as Apache Hive and Apache Flink, from which the data can be queried using SQL.

As Iceberg is integrated as a connector to Flink, you can use the table format the same way for SQL Stream Builder (SSB). The following table summarizes the Iceberg features that are supported in SSB:

| Feature | SSB |

|---|---|

| Create catalog | Supported |

| Create database | Supported |

| Create table | Supported |

| Alter table | Supported |

| Drop table | Supported |

| Select | Supported |

| Insert into | Supported |

| Insert overwrite | Supported |

| Upsert | Technical preview1 |

| Equality delete | Technical preview |

| Metadata tables | Not supported |

| Rewrite files action | Supported |

Using Hive for Iceberg integration

When using the Hive service located on your cluster, you can add it as a catalog on Streaming SQL Console. Before creating the Iceberg table, ensure that you have added Hive as a catalog using the steps described in Adding catalogs documentation.

CREATE

TABLE statement as the example shows

below:CREATE TABLE iceberg_hive_table (

col_str STRING,

col_int INT

) WITH (

'connector' = 'iceberg',

'catalog-database' = 'test_db',

'catalog-type' = 'hive',

'catalog-name' = 'iceberg_hive_catalog',

'catalog-table' = 'iceberg_hive_table',

'ssb-hive-catalog' = 'ssb_hive_catalog',

'engine.hive.enabled' = 'true'

);| Property | Example | Description |

|---|---|---|

catalog-database |

test_db |

The Iceberg database name in the backend catalog, uses the current Flink database name by default. It will be created automatically if it does not exist when writing records into the Flink table |

catalog-type |

hive |

Type of the catalog |

catalog-name |

iceberg_hive_catalog |

User-specified catalog name. It is required as the connector does not have any default value. |

catalog-table |

iceberg_hive_table |

Name of the Iceberg table in the backend catalog. |

ssb-hive-catalog |

ssb_hive_catalog |

The name of the Hive catalog you have provided when adding Hive as a catalog. |

engine.hive.enabled |

true |

The engine.hive.enabled configuration is required to enable

Hive compatibility. The configuration is automatically set to

true if there is no custom value specified. |

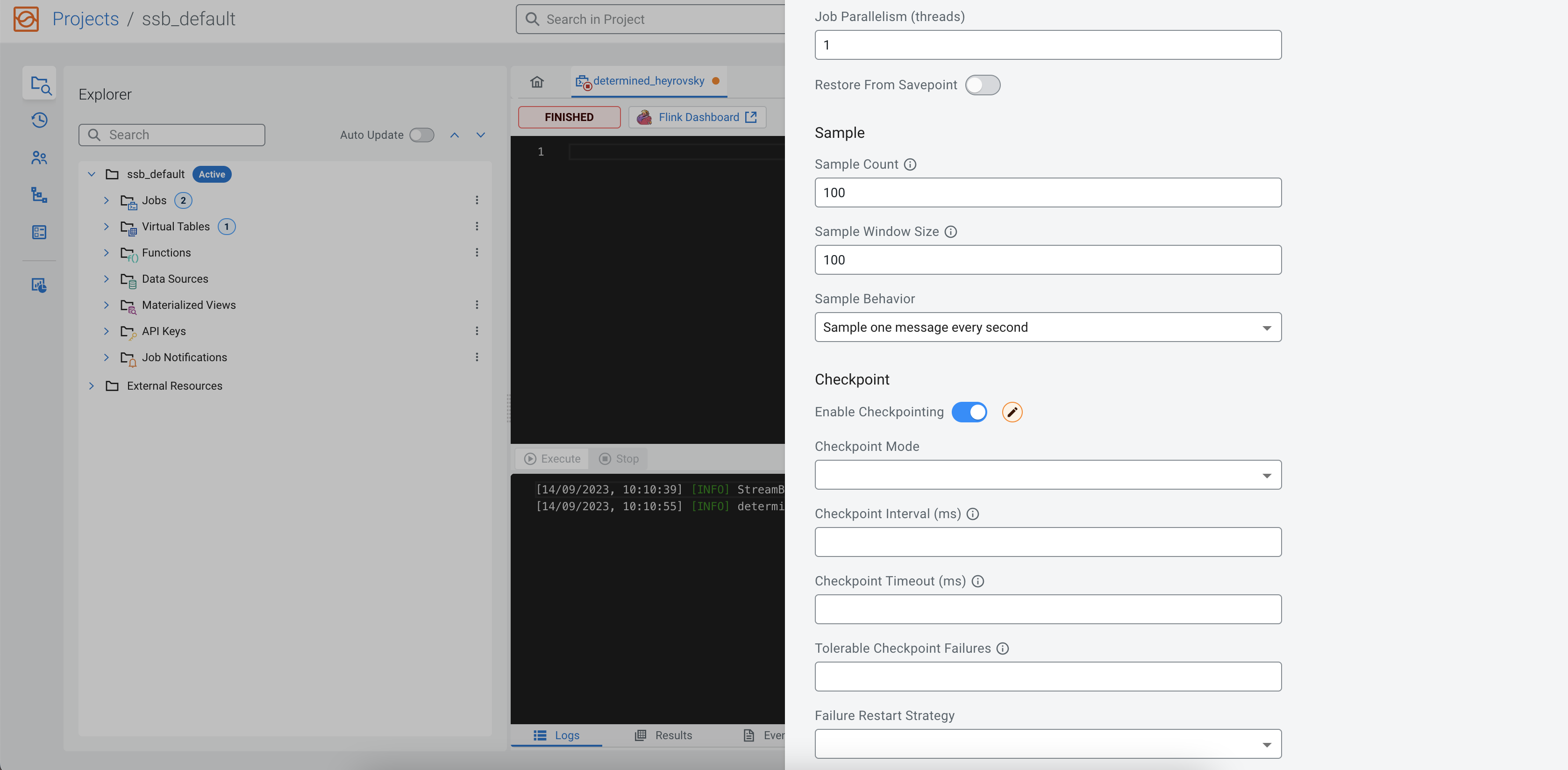

- Access the Job Settings page from the SQL Editor.

- Enable Checkpointing using the toggle, and configure the

checkpointing settings based on your requirement.

Using the Hive service from Cloudera Data Warehouse (CDW)

To access Iceberg tables stored in Cloudera Data Warehouse, follow the configuration steps below.

- Ensure that the

ssbuser has access to theall - database, tablepolicy under the Hadoop SQL service.For more informationn, see the Configuring Hive policies documentation.

- Create ID Broker mapping for the workload user.

For more informationn, see the Creating IDBroker mapping documentation.

- Access Cloudera Manager of the CDW environment.

- Click .

- Download the

hive-conftarball.The downloaded tarball needs to be uploaded to the manager node of the Flink cluster.

- Copy the

hive-confto the manager node of the Flink cluster.scp <location>/hive-conf <workload_username>@<manager_node_FQDN>:. Password:<your_workload_password> - Extract the file under the

/tmpdirectory that is accessible by thessbuser using the following commands:mkdir /opt/hive-conf cd /opt/hive-conf tar -xvf /path/to/hive-conf.tar.gz chmod a+x . chmod -R a+r . - Access the Streaming SQL Console and create the table using the following

example:

CREATE TABLE my_ssb_table ( id INT ) WITH ( 'connector' = 'iceberg', 'catalog-name' = 'internal-use', 'catalog-database' = 'default', 'catalog-table' = 'my_iceberg_table', 'hive-conf-dir' = '/opt/hive-conf', 'engine.hive.enabled' = 'true' );