Replication Manager Overview

Cloudera Manager provides an integrated, easy-to-use management solution for enabling data protection on the Hadoop platform.

Replication Manager enables you to replicate data across data centers for disaster recovery scenarios. Replications can include data stored in HDFS, data stored in Hive tables, Hive metastore data, and Impala metadata (catalog server metadata) associated with Impala tables registered in the Hive metastore. When critical data is stored on HDFS, Cloudera Manager helps to ensure that the data is available at all times, even in case of complete shutdown of a data center.

You can also use the HBase shell to replicate HBase data. (Cloudera Manager does not manage HBase replications.)

This feature requires a valid license. To understand more about Cloudera license requirements, see Managing Licenses.

You can also use Cloudera Manager to schedule, save, and restore snapshots of HDFS directories and HBase tables.

- Select - Choose datasets that are critical for your business operations.

- Schedule - Create an appropriate schedule for data replication and snapshots. Trigger replication and snapshots as required for your business needs.

- Monitor - Track progress of your snapshots and replication jobs through a central console and easily identify issues or files that failed to be transferred.

- Alert - Issue alerts when a snapshot or replication job fails or is aborted so that the problem can be diagnosed quickly.

- You can set it up on files or directories in HDFS and on external tables in Hive—without manual translation of Hive datasets to HDFS datasets, or vice versa. Hive Metastore information is also replicated.

- Applications that depend on external table definitions stored in Hive, operate on both replica and source as table definitions are updated.

- The hdfs user should have access to all Hive datasets, including all

operations. Otherwise, Hive import fails during the replication process. To provide

access, follow these steps:

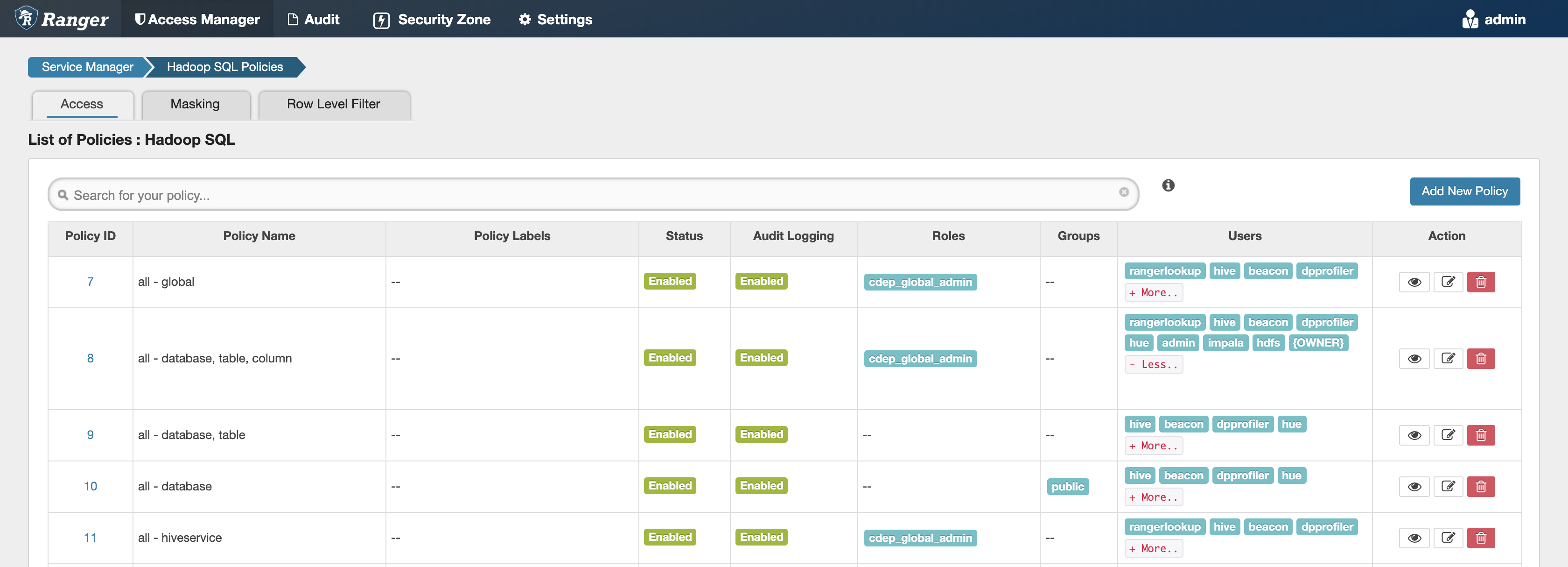

- Log in to Ranger Admin UI.

- Navigate to the section, and provide hdfs user permission to the all-database, table, column policy name.

You can also perform a “dry run” to verify configuration and understand the cost of the overall operation before actually copying the entire dataset.