Creating Kafka tables using wizard

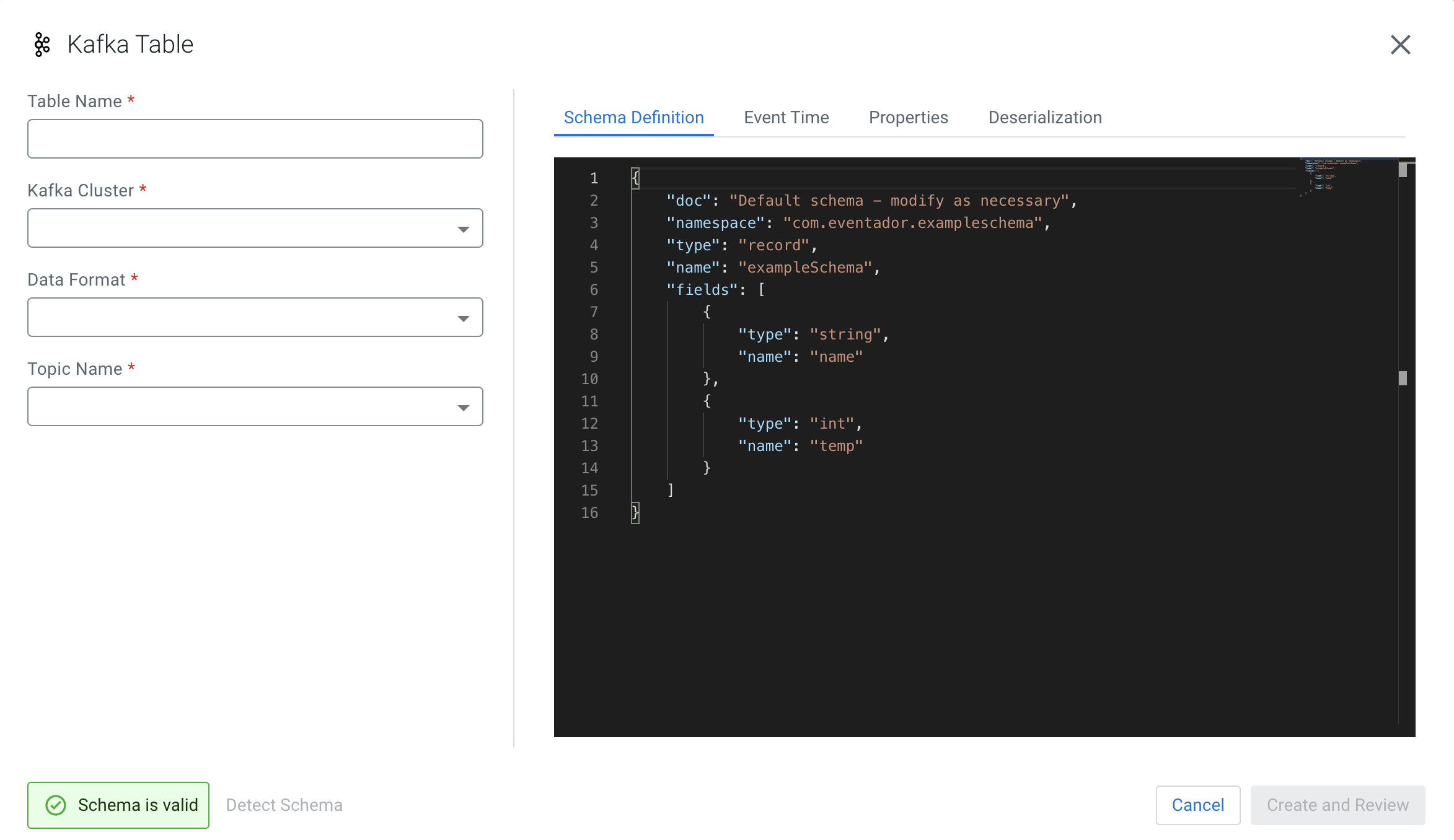

After registering a Kafka data source, you can use the Kafka table wizard in Streaming SQL Console to create a Kafka table.

You can also create Kafka tables using one of the Kafka templates. For more information, see the Kafka connectors and Using connectors with templates sections.

- Make sure that you have registered Kafka as a Data Source.

- Make sure that you have created topics in Kafka.

- Make sure there is generated data in the Kafka topic.

- Make sure that you have the right permissions set in Ranger.

FROM or at the INSERT INTO statements.