Compaction prerequisites

To prevent data loss or an unsuccessful compaction, you must meet the prerequisites before compaction occurs.

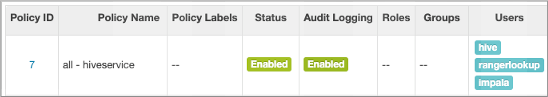

Exclude compaction users from Ranger policies

Compaction causes data loss if Apache Ranger policies for masking or row filtering are enabled

and the user hive or any other compaction user is included in the Ranger

policies.

- Set up Ranger masking or row filtering policies to exclude the user

hivefrom the policies.The user (namedhive) appears in the Users list in the Ranger Admin UI.

- Identify any other compaction users from the masking or row filtering policies for tables as

follows:

- If the

hive.compaction.run.as.userproperty is configured, the user runs compaction. - If a user is configured as owner of the directory on which the compaction will run, the user runs compaction.

- If a user is configured as the table owner, the user runs compaction

- If the

- Exclude compaction users from the masking or row filtering policies for tables.

Failure to perform these critical steps can cause data loss. For example, if a compaction user is included in an enabled Ranger masking policy, the user sees only the masked data, just like other users who are subject to the Ranger masking policy. The unmasked data is overwritten during compaction, which leads to data loss of unmasked content as only the underlying tables will contain masked data. Similarly, if Ranger row filtering is enabled, you do not see, or have access to, the filtered rows, and data is lost after compaction from the underlying tables.

The worker process executes queries to perform compaction, making Hive data subject to data loss (HIVE-27643). MapReduce-based compactions are not subject to the data loss described above as these compactions directly use the MapReduce framework.