JDBC read mode introduction

JDBC read mode is a connection that Hive Warehouse Connector (HWC) makes to HiveServer (HS2) to get transaction information. JDBC read mode is secured through Ranger authorization and supports fine-grained access control, such as column masking. You need to understand how you read Apache Hive tables from Apache Spark through HWC using the JDBC mode. The location where your queries are executed affects configuration. Understanding execution locations and recommendations help you configure JDBC reads for your use case.

Component Interaction

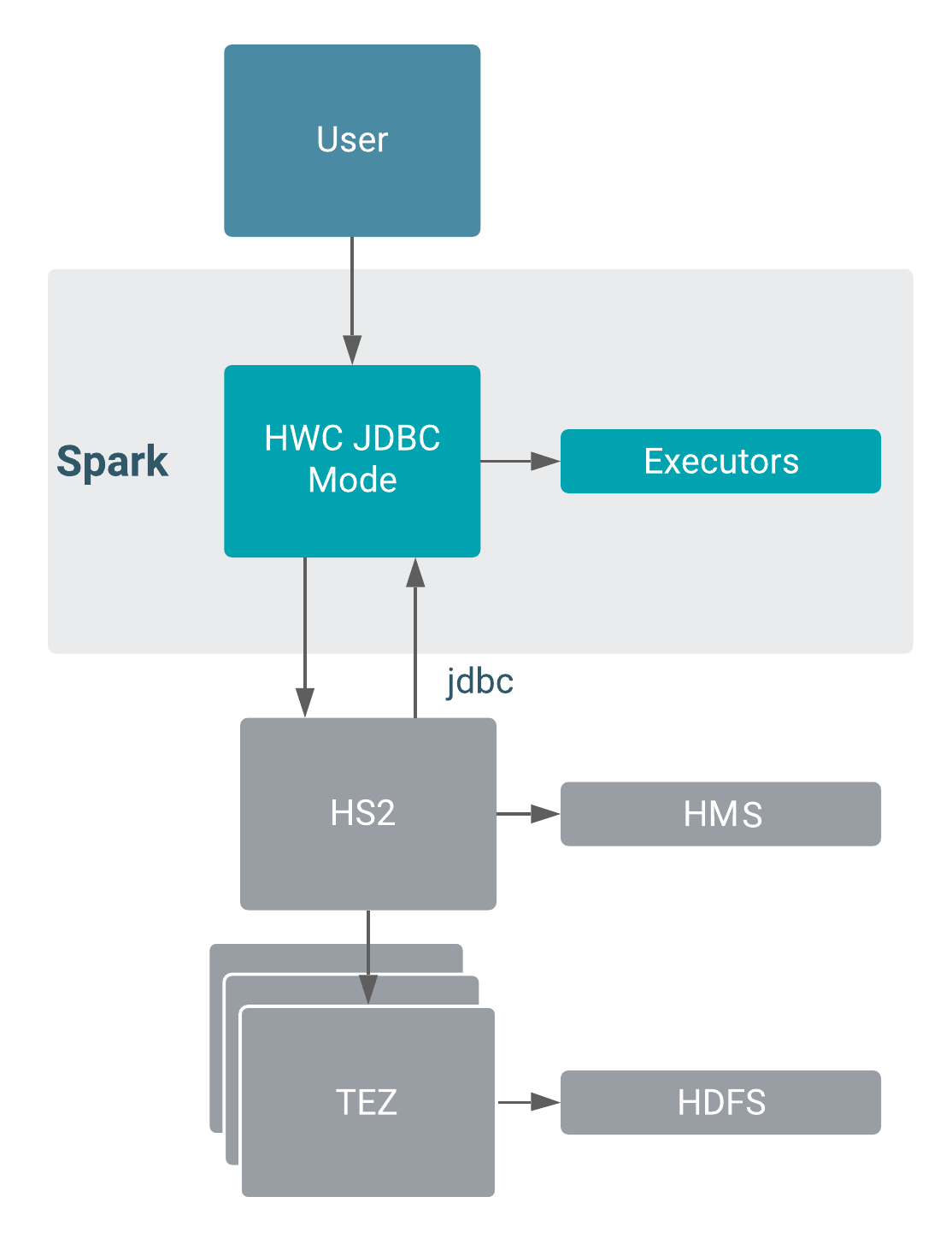

Only one JDBC connection to HiveServer (HS2) is a potential bottleneck in data transfer to Spark. The following diagram shows interaction when you configure HWC in JDBC mode with Hive metastore (HMS), TEZ, and HDFS.

HWC does not use JDBC to write. HWC writes to an intermediate location from Spark, and then executes a LOAD DATA query to write the data. Using HWC to write data is recommended for production.

Configuration

A JDBC read takes place in these locations:

- Driver: Using the Hive JDBC url, connects to Hive and executes the query on the driver side.

- Cluster: From Spark executors, connects to Hive through JDBC and executes the query.

Authorization occurs on the server.

- Client (Driver)

In the client, any failures to connect to HiveServer (HS2) will not be retried.

-

Cluster (Executor)--recommended

In the cluster, any failures to connect to HS2 will be retried automatically.

JDBC reads are recommended for production for workloads having a data size of 1GB or less. Larger workloads are not recommended for JDBC reads in production due to slow performance.

Where your queries are executed affects the Kerberos configurations for HWC.

Optimize reads using HWC session APIs

Using the HWC session API, you can use hive.execute to execute a fast read. This command processes queries through HWC to perform JDBC or Direct Reader reads.