Configuring multiple YARN queues for autoscaling

Manual steps are required to configure multiple YARN queues for load-based autoscaling.

-

Set the Resource Manager plugin property to fine using Cloudera Manager:

- In Cloudera Manager, select the YARN service.

- Go to the Configuration tab.

-

Search for plugin-type and set the below property in the

ResourceManager Advanced Configuration Snippet (Safety Valve) for

yarn-site.xml field.

Name: yarn.resourcemanager.autoscaling.plugin-type Value: fine - Save the changes.

- Start or restart the YARN ResourceManager service for the changes to apply.

-

Ensure that the queue configuration uses absolute values instead of percentages:

- In the Cloudera Management Console, navigate to the specific Cloudera Data Hub cluster, and scroll to the Services section. Click Queue Manager.

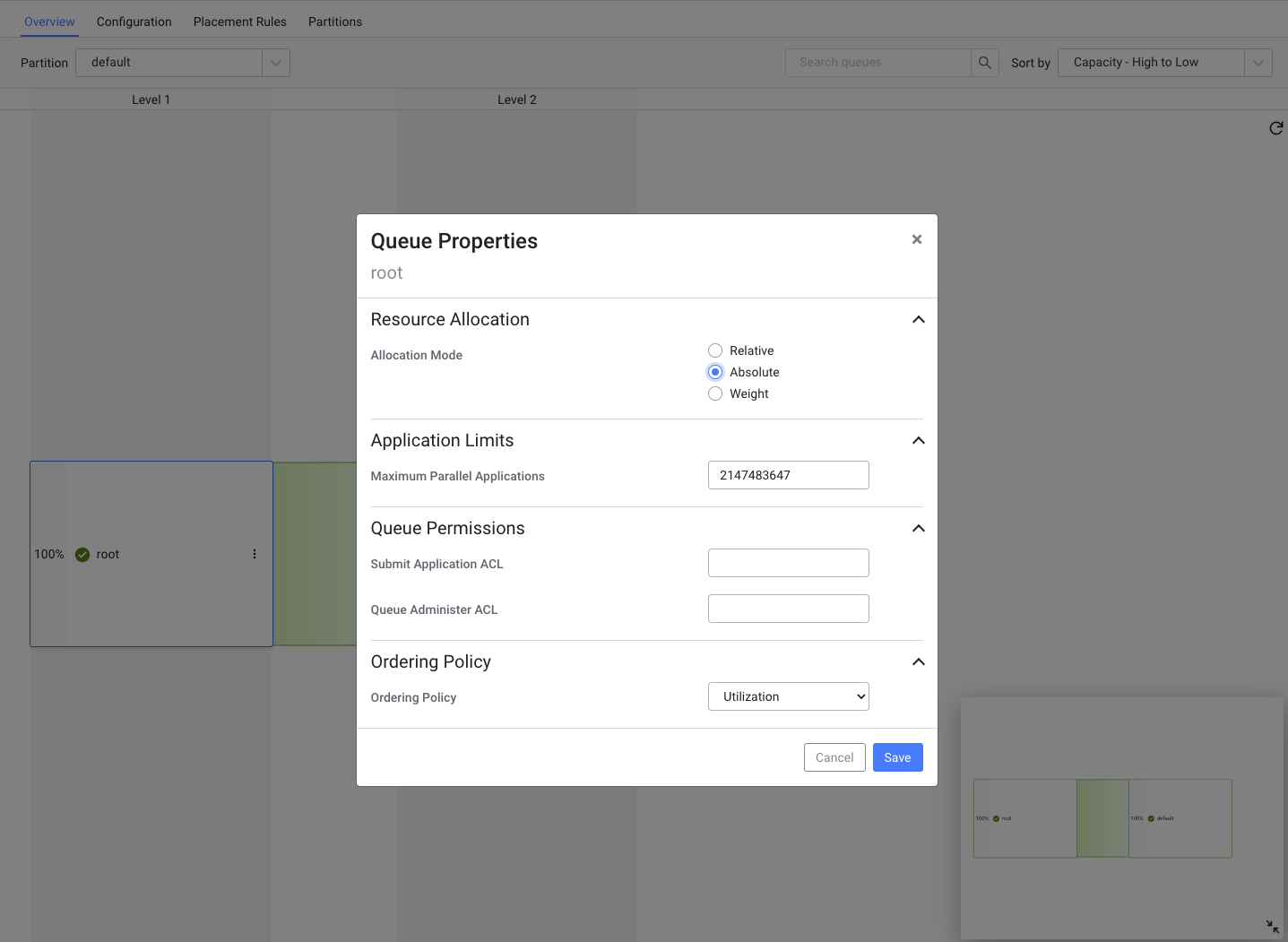

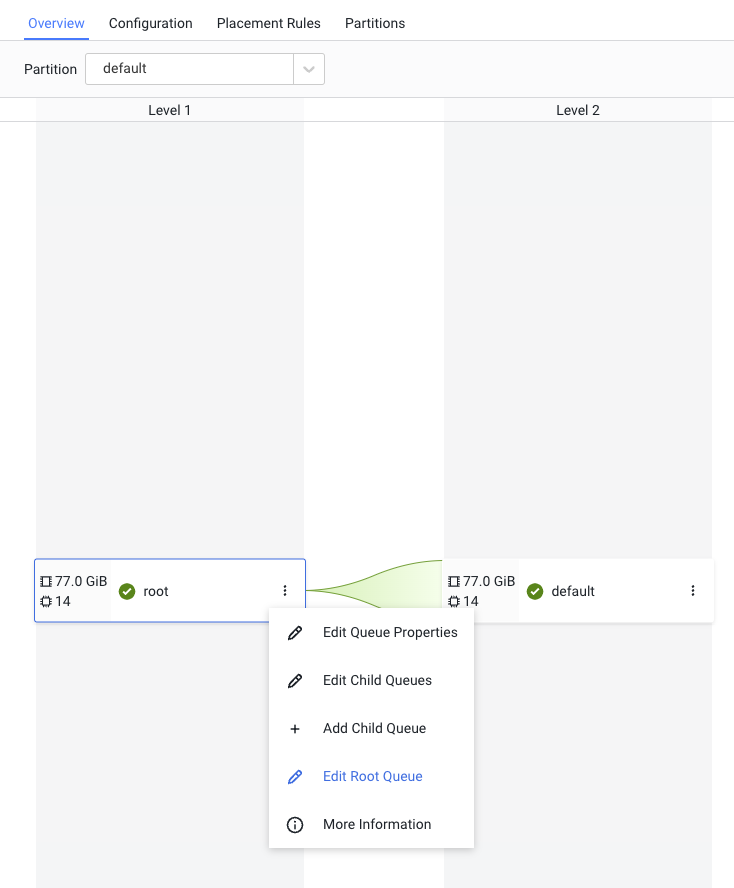

- Click on the three vertical dots on the root and select Edit Queue Properties option.

- In the Queue Properties dialog box, select

Absolute under Resource Allocation mode and click

Save.

- Disable autoscaling on the cluster. You can skip this step if you are on Cloudera Runtime version 7.2.17+.

- Scale-up the autoscaling hostGroup manually to the new ‘Target max’ number of nodes. You can skip this step if you are on Cloudera Runtime version 7.2.17+.

- Configure the queues:

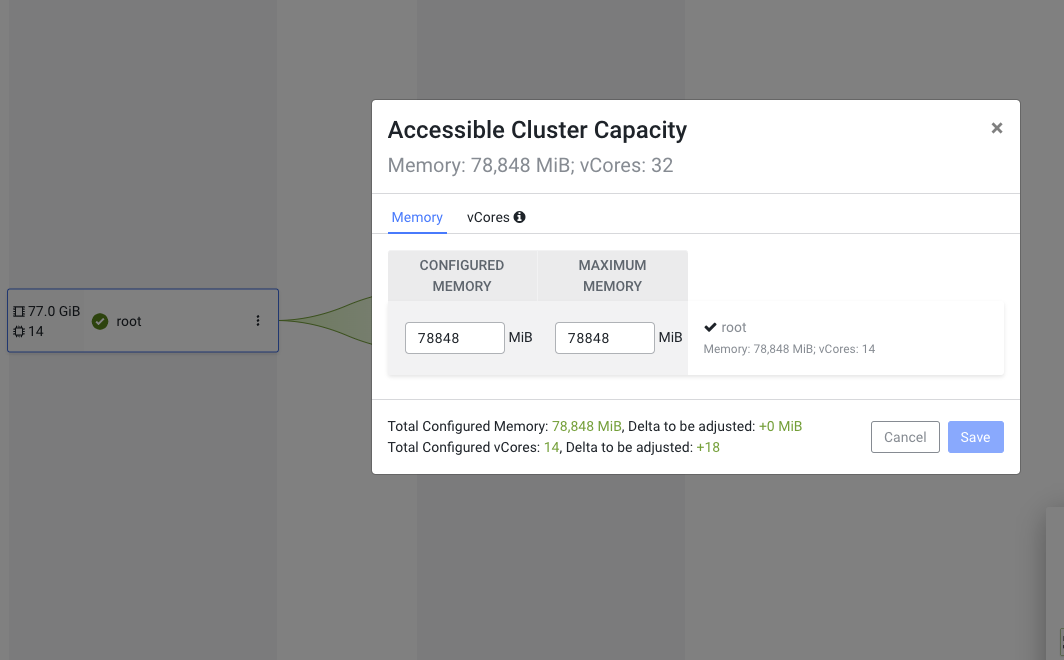

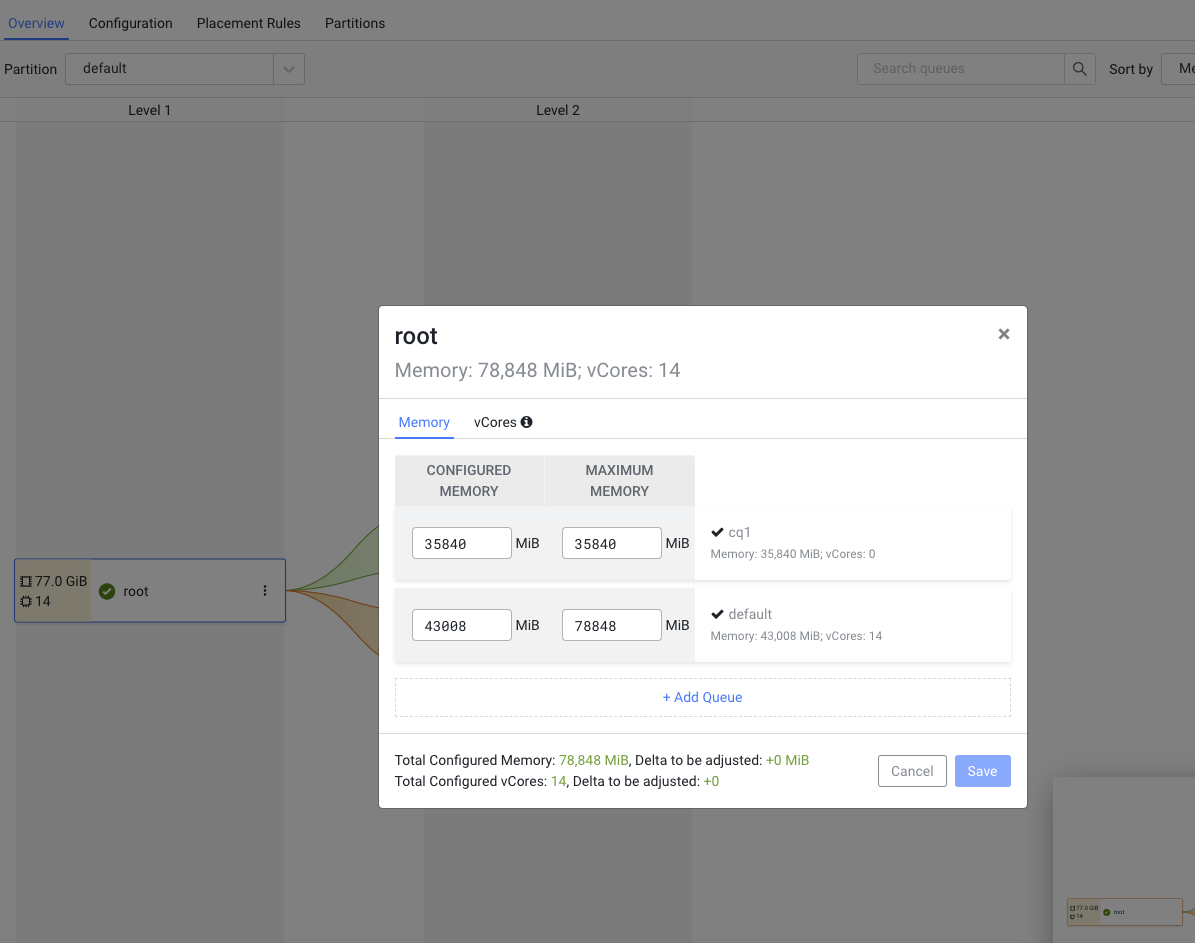

- In the Queue Manager UI, click on the three vertical dots on the root,

select Edit Root Queue and set the ‘Configured Memory’ and

‘Maximum Memory’ to the sum total memory of the maximum number of

nodes that was set in the target field of Autoscale

Policy parameters. Optionally, do the same for the CPUs.

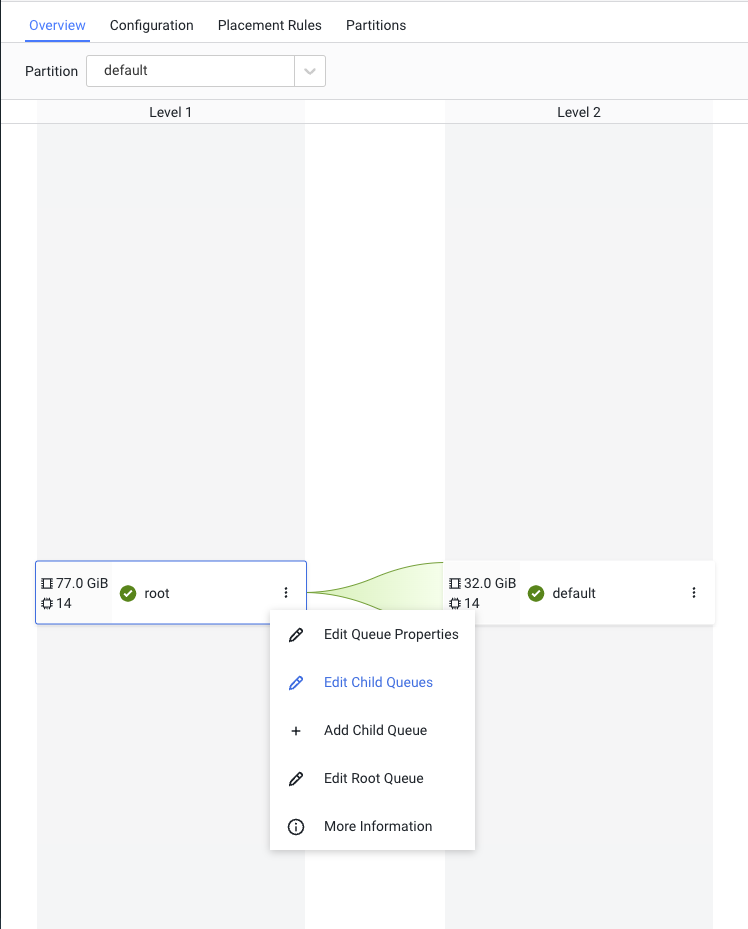

- Edit the Child Queues as needed, and configure the resource allocation. In

the example below, a new queue called `cq1` is added, which has a configured memory

allocation, and maximum memory allocation of 35GB. The `default` queue has a

configured memory allocation of 42GB, and a maximum memory allocation of 77GB.

- Jobs submitted to cq1 queue can cause the cluster to autoscale so that 35GB is available to the cq1 queue (within the cluster max of 77GB).

- Jobs submitted to the default queue can cause the cluster to autoscale so that 77GB is available to the default queue (which is the cluster max in this case).

- In the Queue Manager UI, click on the three vertical dots on the root,

select Edit Root Queue and set the ‘Configured Memory’ and

‘Maximum Memory’ to the sum total memory of the maximum number of

nodes that was set in the target field of Autoscale

Policy parameters. Optionally, do the same for the CPUs.

- Re-enable autoscaling on the cluster.