Creating a Model Endpoint using UI

The Create Model Endpoint page allows you to select a specific Cloudera AI Inference service instance and a model version from Cloudera AI Registry to create a new model endpoint.

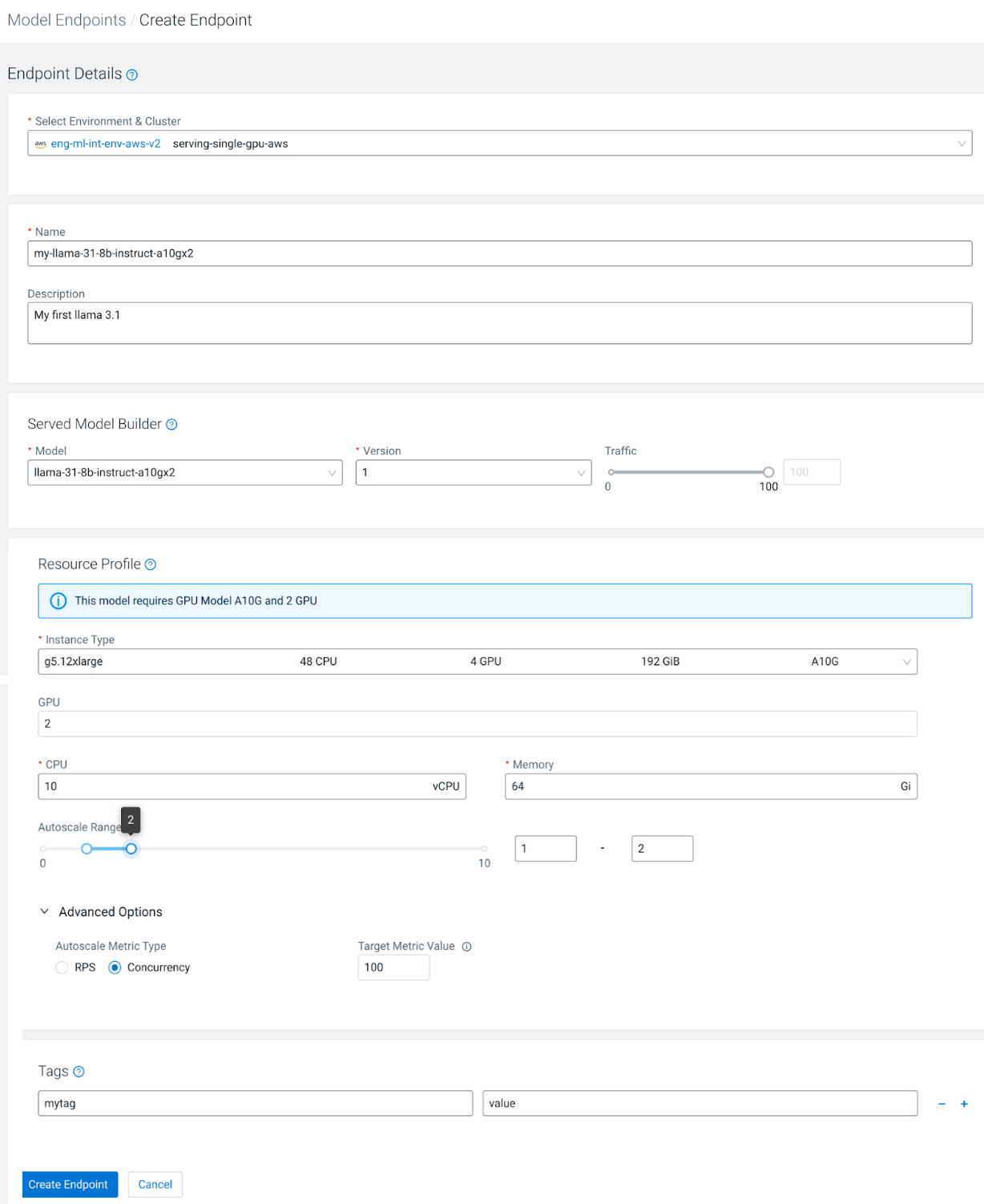

The following steps illustrate how to create a Llama 3.1 model endpoint.

-

In the Cloudera console, click the

Cloudera AI tile.

The Cloudera AI Workbenches page displays.

-

Click Model Endpoints under Deployments

on the left navigation menu.

The Model Endpoints landing page is displayed.

-

Click the Create Endpoint button.

The Create Endpoint page displays the details to create an endpoint.

- In the Select Environment & Cluster dropdown list, select your Cloudera environment and the Cloudera AI Inference service instance within which you want to create the model endpoint.

- In the Name textbox, enter a name for the model endpoint.

- In the Description textbox add a short description of the model endpoint. Ensure that the description text you enter is below 5000 characters.

- Under Served Model Builder, select the model (Model), and modelversion (Version) from the dropdown you want to deploy. Using the Traffic slider bar, specify the traffic split between different model versions that you deploy. It is always set to 100% for the first model version which you cannot change.

- Under Resource Profile, select the type of the instance from the Instance Type dropdown list. For NVIDIA NIM models this field is mandatory. Specify which compute node instance type you wish to run on your model replicas. The instance type you choose depends on the capabilities of the instance type and on what is required by the NVIDIA NIM. The field is optional for normal predictive model endpoints.

- In the GPU field, specify the number of GPUs each model endpoint replica requires. This field gets populated automatically for NVIDIA NIM models.

- In the CPU field, specify the number of CPUs each model endpoint replica requires.

- In the Memory field, specify the amount of CPU memory each model endpoint replica requires.

- Using the Autoscale Range slider bar, specify the minimum and maximum number of replicas for the model endpoint. Based on which autoscaling parameter you choose, the system scales the number of replicas to meet the incoming load.

-

Under Advanced Options, in the Autoscale Metric

Type select the autoscale metric type as Requests per second

(RPS) or Concurrency per replica of the

model endpoint.

If you choose to scale as per RPS and the Target Metric Value is set to 200, then the system automatically adds a new replica when it sees that a single replica is handling 200 or more requests per second. If the RPS falls below 200, then the system scales down the model endpoint by terminating a replica.

-

If your administrator has enabled Fine-grained Access Control, you must define

access levels for users or groups assigned the MLUser or MLAdmin resource roles during

endpoint creation. Three access levels can be specified for Model Endpoints:

- View: The model endpoint appears in the Model Endpoints list and the listEndpoints API. Users can access model endpoint metadata.

- Access: The user or group run inference on the model endpoint.

- Manage: The user or group can view the endpoint, run inference, and modify or delete the endpoint.

- In Tags, add any custom key and value pairs for your own use.

-

Click Create Endpoint to create the model endpoint.

It can take tens of minutes for the model endpoint to be ready. The time taken is determined by the following points:

- whether a new node has to be scaled-in to the cluster.

- the time taken to pull the necessary container images.

- the time taken to download model artifacts to the cluster nodes from the Cloudera AI Registry.

In the above example, we are creating a model endpoint for the instruct variant of Llama 3.1 8B, which has been optimized to run on two NVIDIA A10G GPUs per replica. The cluster we are deploying into is in AWS, and it has a node group consisting of

g5.12xlargeinstance types. In this example there are no other node groups that have instance types containing A10G GPUs, so theg5.12xlargeinstance type is the only choice. For NVIDIA NIM models that specify GPU models and count, the GPU field of the resource configuration page will be filled in automatically by the UI.