Configuring Cloudera Copilot

After setting up credentials, you must make configuration changes in the Cloudera AI UI before using Cloudera Copilot.

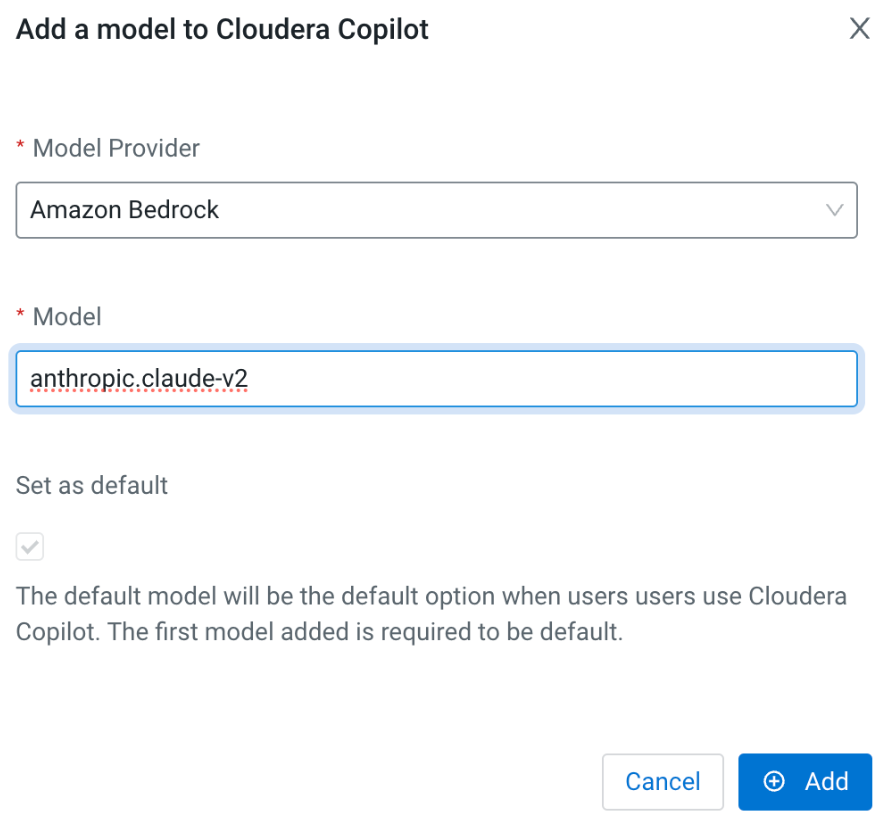

Choosing a model

Models vary in accuracy, and cost. Larger models will provide more accurate responses but will cost more. For Cloudera AI Inference service models, larger models require more expensive GPU hardware to run on, while in Amazon Bedrock, larger models will cost more per prompt.

Language models vs Embedding models

Cloudera Copilot supports the following model types:

- Language models: These are used for code completion, debugging, and chat.

- Embedding models: These are used for Retrieval Augmented Generation (RAG) use cases. This allows you to augment language model responses with specific information that a language model is not aware of. For example, you can provide internal company documents that map company acronyms to their definitions.

Recommended models

- Language models:

- Llama 3.1 Instruct 70b (AI Inference)

- Claude v3.5 Sonnet (Amazon Bedrock)

- Embedding models:

- E5 Embedding v5 (AI Inference)

- Titan Embed Text v2 (Amazon Bedrock)