In certain scenarios, you might need to interact with data that resides outside of the

data lake S3 buckets. You can add a bucket to S3, enable access to the bucket, and then, define

external tables based on the data, such as a CSV file, you put into the bucket.

In this task, you see how to add access to an S3 bucket to create an external table based on CSV data using Hue.

You need to enable read/write access to the external S3 bucket before creating the table. From

the command line of your cluster, you can run HDFS CLI commands on the S3 bucket. You can also

use the S3 bucket for uploading a UDF jar for registration, and then include

UDFs in queries from your cluster.

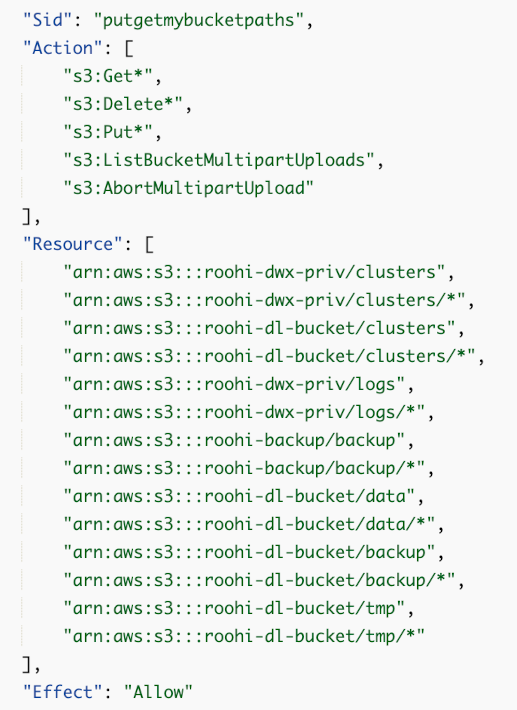

In this task, first you note the Managed Policy ARN attached to the Node Instance Role

used while activating the cluster. Next, you edit the managed policy in the JSON file, for

example noderole-inline-policy.json.

-

In your managed policy, locate the sid "putgetmybucketpaths" for editing.

-

Append resources to the resource section for the buckets you added.

For example, you added a bucket more-sales-data. To enable access to the

more-sales-data bucket, you append resources to the end of the "resource" section, as

shown in the last two resource names:

"Resource":[

...

"arn:aws:s3:::roohi-dl-bucket/backup/*",

"arn:aws:s3:::more-sales-data",

"arn:aws:s3:::more-sales-data/*"

],

-

Click Review policy in the lower right corner of the page,

and then click Save changes.

You can now access the more-sales-data bucket outside your data lake from Hue in

your Cloudera Data Warehouse service cluster. For example, you can create

external Hive tables that point to the bucket, and join those external tables with tables

already in your data lake. You can govern Cloudera Data Warehouse user access

to this external S3 bucket using Ranger Hadoop SQL Policies.