Convert Spark Submits to CDE API Requests

You can execute each of the commands mentioned in the previous section, from the terminal, or in a third-party application such as GitLab or a Jupyter Notebook.

As mentioned in the introductory section, the API requires downloading a CDE Token, based on your user credentials and the CDE Virtual Cluster you want to connect to.

You need the following environment variables:

- CDP WorkloadUsername and WorkloadPassword. You can contact your CDP Administrator for the credentials.

- ACCESS_TOKEN. For information on how to get an access token, see Getting an Access Token.

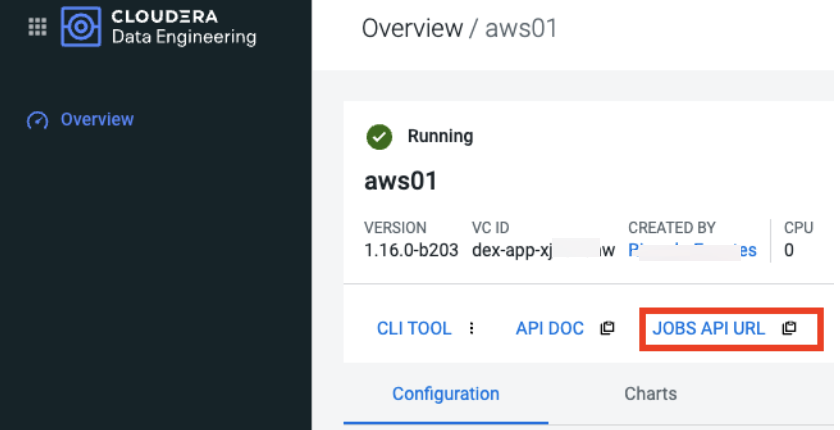

- JOBS_API_URL. You can copy the URL from the CDE Virtual Cluster Service Details page as shown below.

Prior to proceeding with the examples, you must save the variables as environment variables

in your local environment. For example:

export CDE_TOKEN=<access_token>

export JOBS_API_URL=<jobs_api_url>Create a CDE Resource

# Create a CDE Resource with the API

curl -H "Authorization: Bearer $ACCESS_TOKEN" -X POST \

"$JOBS_API_URL/resources" -H "Content-Type: application/json" \

-d "{ \"name\": \"cde_api_resource\"}"List CDE Resources at Virtual Cluster Level

# curl -H "Authorization: Bearer ${CDE_TOKEN}" -X GET \

# ${CDE_JOB_URL_AWS}/resources"Create CDE job from Resource

curl -H "Authorization: Bearer $ACCESS_TOKEN" -X POST "$JOBS_API_URL/jobs" \

-H "accept: application/json" \

-H "Content-Type: application/json" \

-d "{ \"name\": \"cde_cicd_job\", \"type\": \"spark\", \"retentionPolicy\":

\"keep_indefinitely\", \"mounts\": [ { \"dirPrefix\": \"/\", \"resourceName\":

\"cde_cicd_resource\" } ], \"spark\": { \"file\": \"pyspark.py\"},\"schedule\": {

\"enabled\": false} }"Run CDE Job

curl -H "Authorization: Bearer $ACCESS_TOKEN" -H 'accept: application/json' -H 'Content-Type:

application/json' -X POST "$CDE_VC_ENDPOINT/jobs/cml2cde_cicd_job/run" -d '{"overrides":

{"spark":{"driverCores": 2, "driverMemory": "4g", "executorCores": 4,

"executorMemory": "4g", "numExecutors": 4}}}'