HDFS HA

You must check HDFS HA and set Failover controller.

-

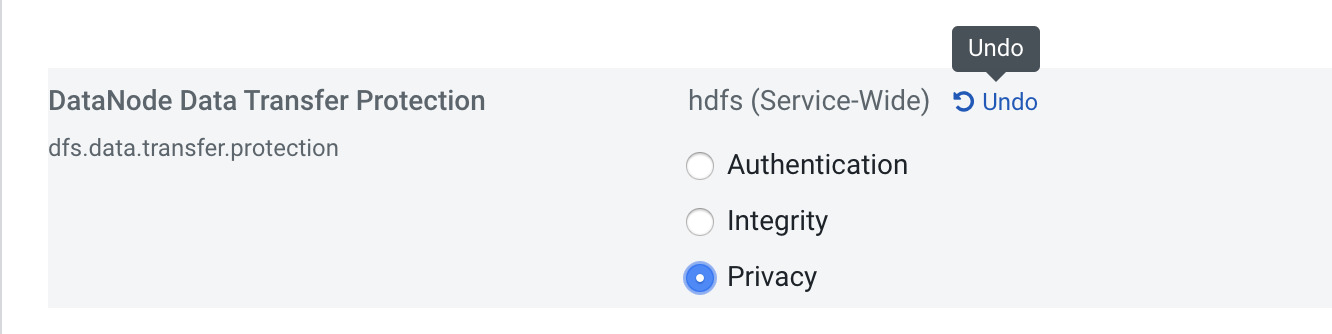

Cloudera Manager does not support the comma separated entries. Review

dfs.data.transfer.protection and

hadoop.rpc.protection parameters. If SSL is not enabled, then click

Undo. This will clear the selections.

-

Start HDFS service.

Failover Controller fails to start. You must continue with step 3 or 4.

-

Format the ZooKeeper Failover controller ZnNode. SSH to the Failover controller host

And perform the following using the example:

# cd /var/run/cloudera-scm-agent/process/<xx>-hdfs-FAILOVERCONTROLLER /opt/cloudera/parcels/CDH/bin/hdfs --config /var/run/cloudera-scm-agent/process/<xx>-hdfs-FAILOVERCONTROLLER/ zkfc -formatZK-

Check the output in the log for success. If the error is:

`ERROR tools.DFSZKFailoverController: DFSZKFailOverController exiting due to earlier exception java.io.IOException: Running in secure mode, but config doesn't have a keytab`. - The ZooKeeper nodes are not created. You must manually create the ZNodes for the Failover controllers to start and allow an Active Namenode to be elected.

- With the zookeeper-client, open a ZooKeeper Shell `zookeeper-client -server <a_zk_server>`

-

Create the required ZNodes

create /hadoop-hacreate /hadoop-ha/<namenode_namespace>.

-

Check the output in the log for success. If the error is:

-

This is an alternative step to the above step 3.

- Log in to Cloudera Manager.

- Navigate to Clusters

- Click HDFS

- Go to Configuration tab

- Under Filters > Scope, click Failover Controller

- Navigate to the Failover Controller instance

- Initialize Automatic Failover Znode.

- Start HDFS service again.